Content Warning: This insight contains antisemitic, racist, and hateful imagery.

Introduction

Extremists exploit social media platforms to spread hate against minority groups based on protected attributes such as gender, religion, and ethnicity. Platforms and researchers have been actively developing AI tools to detect and remove such hate speech. However, extremists employ various forms of implicit hate speech (IHS) to evade AI detection systems.

IHS spreads hateful messages using subtle expressions and complex contextual semantic relationships instead of explicit abusive words, bringing challenges to automatic detection algorithms. Common forms of IHS include dog whistles, coded language, humorous hate speech, and implicit dehumanisation. Moreover, the forms and expressions of IHS evolve rapidly with societal controversies (e.g., regional wars). Identifying and tracking such changes in IHS is crucial for platforms trying to counter them.

In this Insight, we report and analyse “Substitution” as a new form of IHS. Recently, we observed extremists using “Substitution” by propagating hateful rhetoric against a target group (e.g., Jews) while explicitly referencing another label group (e.g., Chinese). We show that Substitution not only effectively spreads hate but also exacerbates engagement and obscures detection.

Antisemitism by Substitution: The Chinese Control American…

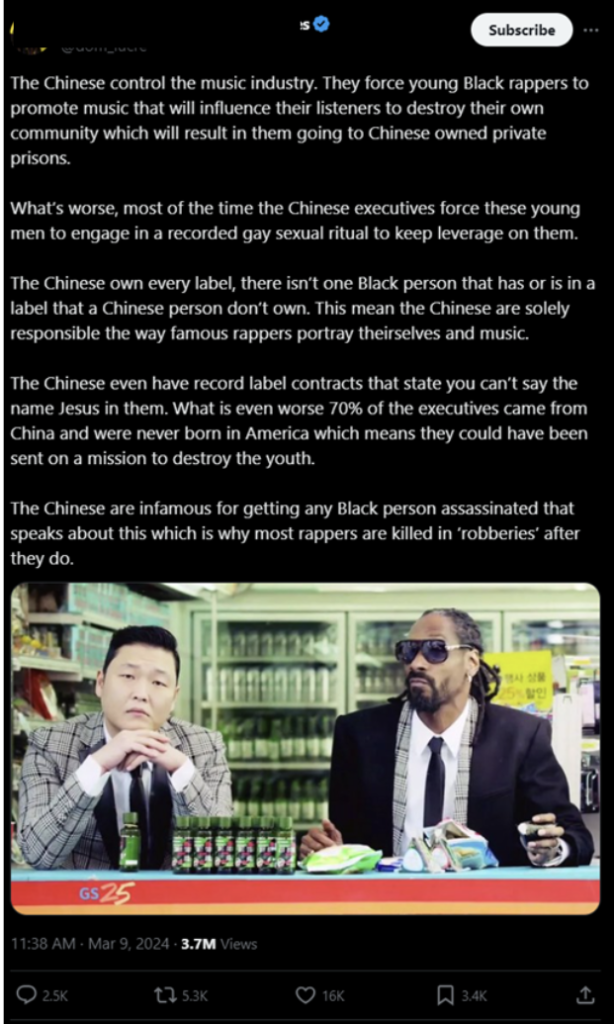

In a recent post on X (Fig. 1), an extremist influencer claims “The Chinese control the (American) music industry.” He elaborates by referencing other common antisemitic conspiracies while referring to the Chinese, including “destroying their communities,” “incarcerating them in Chinese-owned prisons,” “forcing young Black rappers to engage in recorded homosexual acts,” and “destroying young Black rappers who mention the name Jesus”. The post includes a photo of famous South Korean singer, PSY, alongside Snoop Dogg. Millions of views combined with the thousands of likes and reposts demonstrate Substitution’s strong engagement.

Fig. 1: Extremist Substitution post citing hateful conspiracies about Jews (target group) and the music industry while referring to Chinese (label group).

Substitution using prominent hateful stereotypes, conspiracies, and symbols

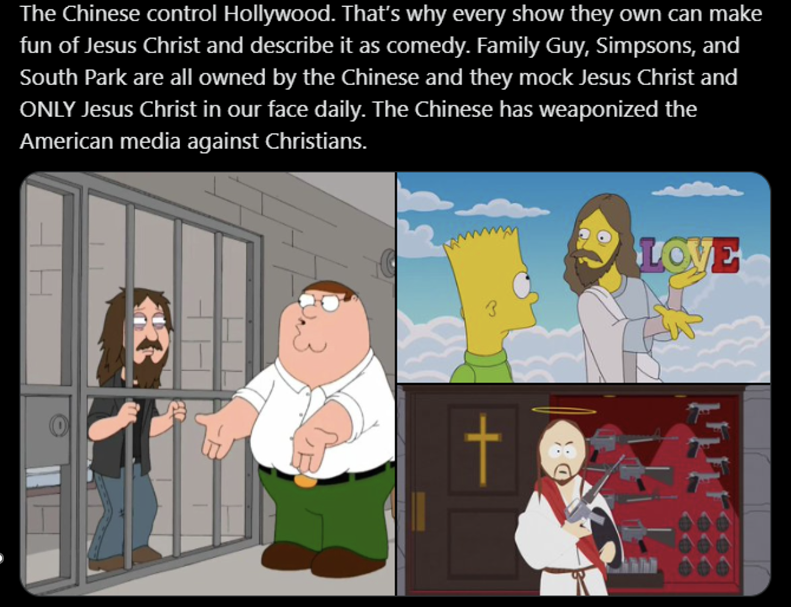

To ensure that the antisemitic message is still clearly conveyed to the target audience, Substitution cites the most recognisable hateful stereotypes and conspiracies, for example, inspired by the Zionist occupation government. Accordingly, the audience responds by mentioning antisemitic conspiracies like “The Chinese control Hollywood” and “The Chinese have weaponised the American media against Christians” (Fig. 2). Others echoed the racist conspiracies: “I was always suspicious of the Chinese stranglehold on the music industry, Hollywood, MSM, politicians, and banks.”

Fig. 2: A Substitution post claiming that the Chinese control Hollywood.

Extremists use Substitution to add other antisemitic conspiracies like “Those pesky ‘Chinese’… They founded the Federal Reserve. They destroyed Weimar Germany. They funded both WW1 & WW2. They even took over most of US politics and industry! Why do the ‘Chinese’ have so much power?” This statement refers to false reports that claim Jews control the media, banks, and government.

Substitution also spreads using popular antisemitic symbols and memes. One user writes the echo symbol, “(((the Chinese)))”, to send an antisemitic signal in response to the post. The triple parentheses are known as applying to Jewish names or topics to identify, mock, and harass Jews. The symbolism is clearly recognisable within the anti-Jewish extremist community.

The audience recognises the antisemitic Substitution

By using prominent antisemitic stereotypes, conspiracies, and symbols, extremists ensure that their target audience still recognises the hateful message despite referencing another label minority. It becomes evident that the audience clearly recognises the Substitution. They either directly point out “Exchange Chinese for Jewish?” or use Homophones (words with similar pronunciations but different spellings) such as “And by Chinese you mean the Joos.” This demonstrates that Substitution is easily recognisable for the broader target audience, which is a crucial foundation for the propagation of IHS.

Deliberate ambiguity exacerbates engagement with Substitution

Substitution increases its harmfulness by being deliberately ambiguous, which helps to further exacerbate user engagement. Substitution itself is deliberately ambiguous by confusing the hateful stereotypes about one minority (e.g., Jews) with the label of another minority (e.g., Chinese). The original post in Fig. 1 skilfully extends that ambiguity by using a picture of the Korean singer, PSY, instead of a Chinese person.

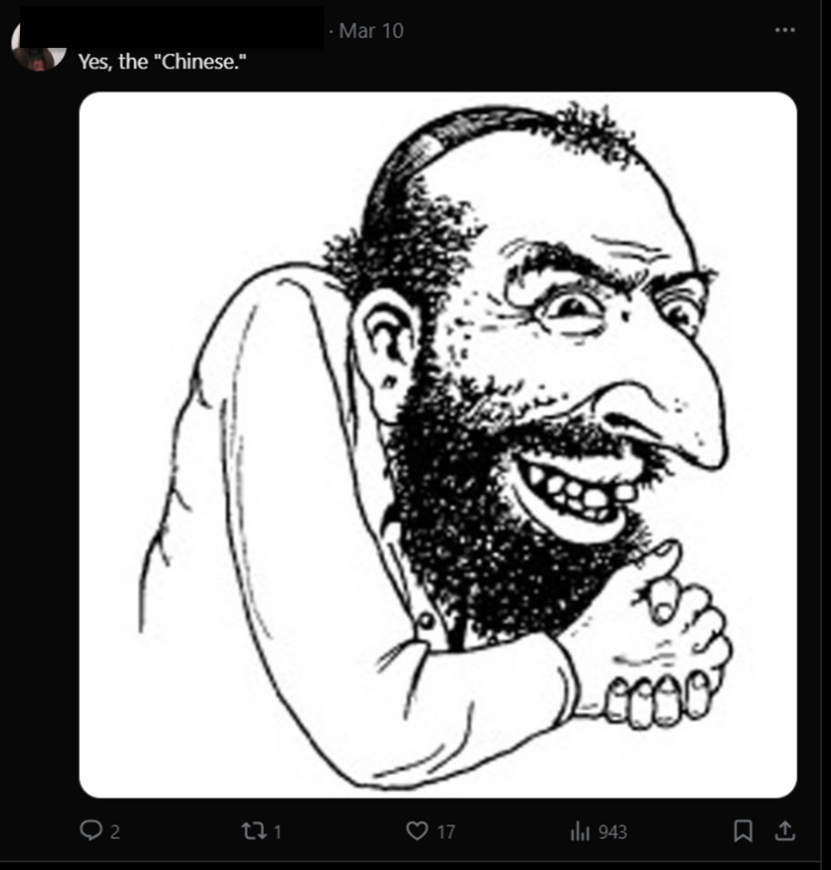

The deliberate ambiguity thereby attracts different forms of engagement. On the one hand, those who wish to emphasise the ambiguity humorously are drawn in. One extremist points towards the intent of Substitution: “Lol. I don’t think he’s talking about Chinese people. I think he’s saying that you can criticize the Chinese on this platform, but there’s another group you can’t criticize.” Others satirically comment with a meme of a person showing stereotypical antisemitic features, accompanied by the text “Yes, the Chinese” (Fig. 3).

Fig. 3. A meme depicting the antisemitic stereotypical appearance of Jews clarifying Substitution’s deliberate ambiguity.

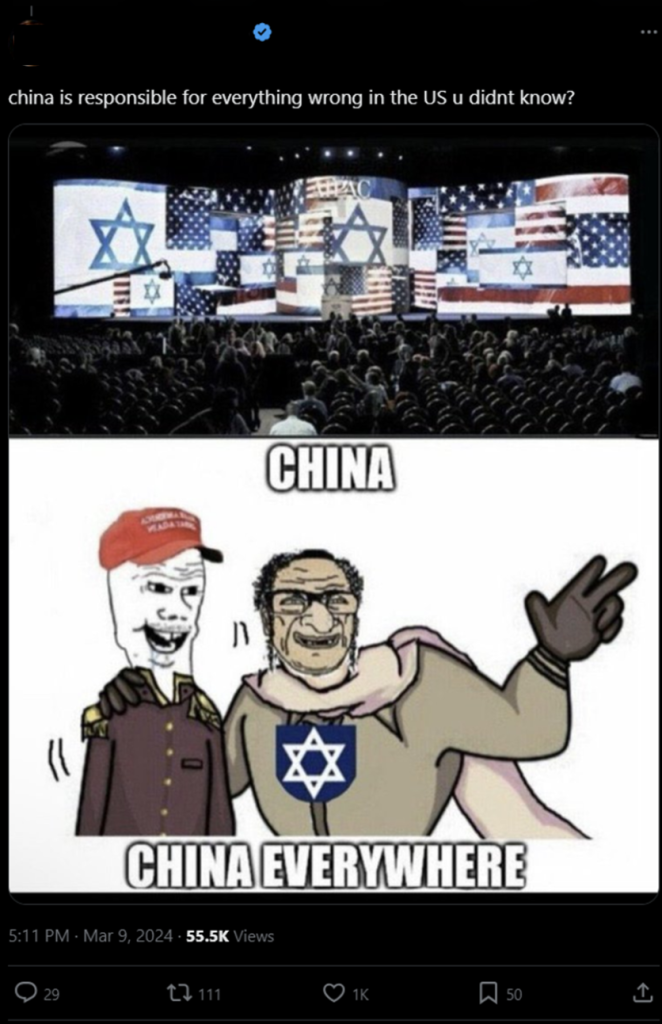

A particularly satiric extremist even suggests it is the Jews substituting themselves with the Chinese to distract the US from their alleged influence (Fig. 4). The meme implies that Jews aim to conceal their manipulation of US politics by distracting through a threat of the Chinese.

Fig. 4. A satiric hateful meme response that extends the conspiracy theory promoted in the original Substitution post.

On the other hand, the deliberate ambiguity triggers those seeking to clarify or correct the Substitution. Others fail to recognise the malicious intention and correct “Your attached photo is of snoop with a Korean, not Chinese btw.” Meanwhile, these clarifications provoke more discussions about the Substitution, for example, “He is not really talking about the Chinese. He is talking about the great ally of US.”

Even though not all comments share the antisemitic resentments, they still help to increase the exposure of the post. Platforms’ recommender algorithms detect and promote posts with high user engagement. The deliberately ambiguous nature of Substitution provokes extra user engagement which inadvertently increases through platform recommenders.

Substitution as a means to avoid detection

Substitution emerges against the backdrop of an increasing crackdown on the alarming rise of antisemitism. In the US, some states have expanded punitive measures to address the escalating antisemitic incidents. Substitution allows extremists, especially those with millions of followers who are potentially subject to more regulatory scrutiny, to spread IHS without fear of persecution. Whether Chinese are a suitable label group for Substitution because detection algorithms are less sensitive towards Sinophobic threats or due to their mismatch with the hateful stereotypes of the target group remains to be seen.

Hate Speech Policy and AI Detection

Our observation of Substitution as a new form of IHS poses various implications for online platforms. Naturally, it starts with the emphasis on making platforms, regulators, researchers, and activists in this space aware of this new threat. IHS has a notorious history of normalising discrimination and inciting mass violence. By recognising this threat, we can properly work towards addressing it.

In order to guide automated AI algorithms in detecting substitution, we offer various starting points. First, we need to note that the cases of Substitution that we observed still use the same – commonly recognisable – stereotypes, conspiracies, and symbols. Current hate speech detection algorithms extract features from text (or images) that are related to pre-determined target groups. Given the ambiguity of target groups, we propose that detection algorithms need to be debiased against target groups by reducing the emphasis on the inclusion/exclusion of particular terms. Instead, we need to redirect attention towards the broader context of the conversation, removing spurious target-related features and making them ideally generalisable to targets that are unseen in the training stage. Furthermore, detection algorithms ought to consider the interdependence and discrepancies between images and text messages in posts.

Third, we urge platforms to include Substitution in their policies and to educate users to report instead of engaging with such deliberately ambiguous content. Currently, moderation practices and policies like Community Notes fail to include Substitution. The enforcement of such policies needs to be sensitive towards all potential target groups alike (e.g., Jews, Chinese). The timely updating of hate speech policies to aid human moderation is crucial for controlling the spread of Substitution. This is particularly crucial given that platforms’ own recommender algorithms may inadvertently promote the spread of Substitution IHS.

Hetiao (Slim) Xie is an MPhil student at The University of Queensland. His primary research focuses on applying and optimizing machine learning and NLP to counter online implicit hate speech.

Marten Risius is a Senior Lecturer (Assistant Professor) at The University of Queensland. His work focuses on the digital society and online engagement with a focus on Trust & Safety.

Morteza Namvar is a Senior Lecturer (Assistant Professor) at The University of Queensland, specializing in applying NLP and machine learning in the Information Systems domain.

Saeed Akhlaghpour is an Associate Professor at The University of Queensland. He investigates data privacy, cybersecurity, and digital health.