Note: This Insight is a preliminary exploration and forms part of a broader research paper currently in development. The full paper will further analyse how AI technologies enable enhanced messaging, increased emotional engagement, and refined targeting strategies within digital jihadist online influence campaigns.

Introduction

The rapid democratisation of generative AI technology in recent years has not only transformed private industries and creative domains but is also having a profound impact on how extremist organisations engage in influence operations online. This shift marks a pivotal moment in digital warfare, where AI tools, while also providing tremendous benefits to private industries, are also becoming instruments of manipulation in the hands of extremist groups. Building on previous GNET insights that have shed light on the adaptations of AI technology for harmful purposes, this piece extends the exploration into the use of AI by jihadists.

Previous analyses reveal how some online communities, notably on 4chan, misuse Large Language Models (LLMs) for harmful purposes. These groups create digital personas that perpetuate violent stereotypes against the African American community and ‘smut bots‘ capable of generating explicit, harmful narratives. The manipulation of LLMs to create content that normalises extremist narratives raises serious ethical concerns about the unchecked use of AI in digital spaces. Other investigations have shown how many users in these communities employ open-source models stripped of safety features or circumvent DALL-E safeguards to create large quantities of antisemitic content.

While discussions around the Islamic State’s use of AI tools for creating visually compelling propaganda have begun to surface, there remains a significant gap in research on the broader implications of the role of AI in enhancing the operational effectiveness of terrorist groups. This dearth of in-depth analysis extends to how various jihadist factions leverage AI technology to execute more sophisticated information operations. This Insight will bridge this knowledge gap by delving into two distinct instances where violent jihadist organisations have strategically deployed AI; one by Hamas and the other by supporters of al-Qaeda and the Islamic State. Each case study sheds light on different facets of AI application, specifically focusing on AI-crafted content that simplifies misleading narratives and broadens the appeal and online reach of violent extremist ideologies.

Case Study 1: Hamas and the ‘Israeli Diaper Force’

The ongoing conflict between Israel and Palestine marks a historic moment as it represents the first war where generative AI is being used to challenge the authenticity of legitimate media and inundate digital platforms with fake content. This application of AI has introduced a new frontier in the dissemination of propaganda, enabling the creation and rapid spread of false narratives at an unprecedented scale.

In early December 2023, during a televised public briefing, Abu Obeida, the spokesperson for the Al Qassam Brigades, the military wing of Hamas, a designated terrorist organisation in several countries, claimed that the Israeli Defense Forces (IDF) is the only military in the world that wears diapers – “specifically Pampers” (Fig. 1). The origins of this provocative statement, aimed at undermining the dignity of the Israeli military, can be traced to older comments made by the infamous former Iranian Commander, Qassam Soleimani. In a 2017 interview, Soleimani insinuated that the American military supplies its personnel with diapers, allowing them to “urinate in them when they’re scared”. This rhetoric, steeped in derision, serves not only to belittle the opposing forces but also to propagate a narrative of cowardice.

Fig. 1: Abu Obeida claims IDF soldiers wear diapers

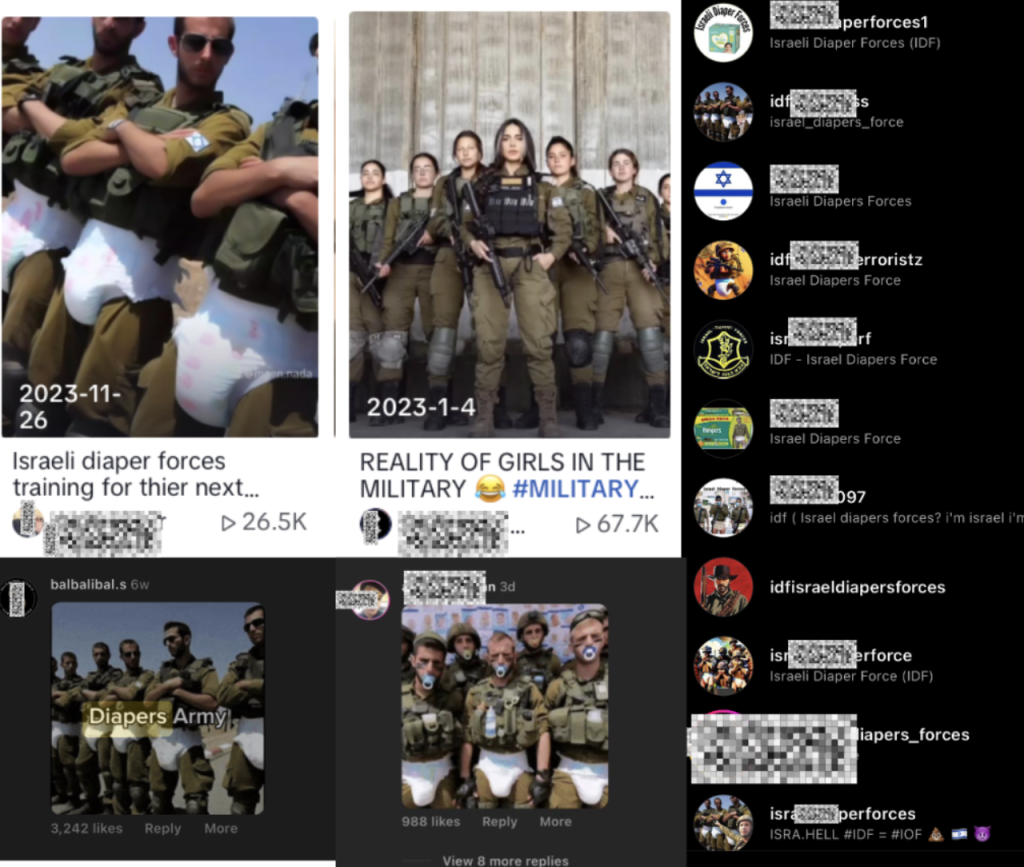

While the comments made by Soleimani had limited reach, the remarks by Hamas found a broad audience on social media, propelled by an onslaught of TikTok videos, YouTube shorts, Twitter posts containing AI-generated images and dramatised audio deep fakes of Israeli commanders suggesting that the emasculating claim was true (Figs. 2-4). Many of the posts also contained the hashtag #IsraeliDiaperForce, which was often applied to the Instagram posts of major news outlets.

Fig. 2: Example TikTok Video using GenAI images of IDF soldiers wearing diapers

Fig. 3: Voice Clone of Israeli Commander suggesting he wears diapers

Fig. 4: TikTok videos showing the variety of dedicated Instagram accounts, & popular comments on news posts containing the ‘Israeli Diaper Force’ hashtag

Despite the content being clearly AI-generated, its captivating and visually appealing nature may have played a significant role in the widespread propagation of the unfounded assertion that IDF soldiers wear diapers or the overall sentiment of implied cowardice. This scenario highlights the emergence of the ‘Synthetic Narrative Amplification Phenomenon’ (SNAP), an evolved form of the previously coined ‘liar’s dividend’. The liar’s dividend posits that lies are more believable in a more uncertain information environment wrought by synthetic media like deepfakes. Individuals are more likely to gravitate towards sources that align with their existing biases rather than critically evaluating the evidence at hand. The SNAP diverges from the liar’s dividend, revealing how even overtly artificial images can streamline complex narratives into easily digestible, AI-generated memes. This simplification, a cornerstone of SNAP, transforms false narratives into appealing synthetic memes that resonate widely, enhancing virality.

The ‘Israeli Diaper Force’ narrative, amplified by Hamas through AI-generated content, exemplifies the SNAP. This scenario, emerging from the Israel-Palestine conflict, underscores a pivotal shift in digital warfare: the strategic use of generative AI to craft and disseminate propaganda using identifiable generative AI content to reach more eyes. Before generative AI reaches the point where it can create content indistinguishable from reality, SNAP is already empowering malicious actors to shape public opinion significantly with this nascent technology.

Case Study 2: AQ/IS Digital Influence Campaigns Through Audio Deepfake Nasheeds

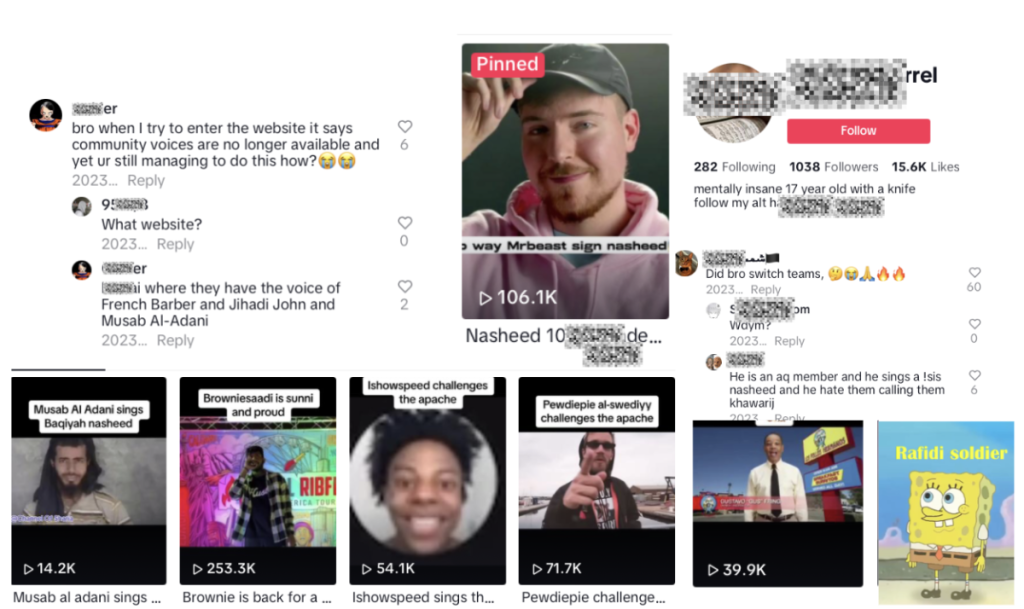

Starting in early 2023, online platforms saw a significant increase in highly convincing voice clones or audio deepfakes of celebrities and politicians that ranged from the absurd to the surreal. One popular example is the viral TikTok videos of Joe Biden and Donald Trump engaging in video game battles while talking about life. These instances were largely considered harmless and comical, a modern extension of political cartoons. However, this trend was quickly adopted by other actors, including affiliates or sympathisers of groups like al-Qaeda and Islamic State. Over the past several months, there has been a large amount of content posted to TikTok in which animated versions of well-known characters, like Spongebob Squarepants and Rick Sanchez and popular YouTubers like PewDiePie and MrBeast can be heard singing battle nasheeds – Islamic vocal music pieces traditionally used to motivate warriors in combat (Figs. 5-7). Today, these nasheeds have been adapted by extremist groups to rally supporters and glorify their causes, showcasing their message through digital platforms without instrumental accompaniment. Some of these videos gained hundreds of thousands of views, highlighting the potent impact of combining popular culture and advanced technology to spread extremist messages.

Fig. 5: Peter Griffin singing ‘For the Sake of Allah’ & committing acts of violence

Fig. 6: Rick Sanchez Singing Baqiyah, a reference to the Islamic State

The strategic deployment of extremist audio deepfake nasheeds featuring animated characters and internet personalities marks a sophisticated evolution in the tactics used by extremists to broaden the reach of their content. By embedding extremist narratives within content that mimics the tone and style of popular entertainment, these videos navigate past the usual scrutiny applied to such messages, making the ideology more accessible and attractive to a wider audience. This approach is especially cunning, as it leverages the trust and fondness viewers have for these familiar figures, subtly guiding them to engage with and possibly follow creators of this content based on their interest in the characters, not necessarily recognising the extremist undertones.

Fig. 7: Several viral TikTok videos of popular AQ/IS Voice Clone Nasheeds

The choice of characters in much of this content suggests that the creators of these videos are targeting younger and more impressionable audiences who might naturally gravitate towards cartoon characters and famous YouTubers. The content’s seemingly harmless appearance, combined with its engaging and often humorous execution, increases the potential for younger audiences to follow the content creators and, therefore, the likelihood they will be exposed to more extremist content. The inherent virality of these AI deep fakes ensures their rapid spread across social media platforms. The widespread availability of voice cloning technology has granted violent groups the capability to shroud their messages in a layer of cultural significance and engagement previously beyond their reach.

Navigating the Nascent Threat of AI in Propaganda: Challenges and Safeguards

The use of generative AI by extremist groups for the purpose of crafting and disseminating propaganda represents a nuanced challenge for the trust and safety teams across social media and AI companies. In the context of these specific incidents, simply applying labels or watermarks to AI-generated content is unlikely to significantly deter the effectiveness of such influence campaigns. The case studies in this Insight have demonstrated that even without watermarks, the content produced by jihadists using AI technologies was readily identifiable as inauthentic. This underscores the notion that while watermarks might serve as useful tools in other situations to signal manipulated content, they fall short in the face of campaigns where the authenticity of the content is not the primary concern. The focus of these actors on streamlining their narratives and enhancing their appeal makes the presence or absence of watermarks largely irrelevant to their objectives.

Addressing the challenges posed by using generative AI to spread extremist propaganda demands a comprehensive set of actions from both AI and social media companies. This requires moving beyond simple deterrents like watermarks and embracing more sophisticated strategies to mitigate the threats effectively.

For Social Media Companies:

The advent of generative AI technologies has introduced a significant challenge, unleashing a deluge of content that violates policies in sophisticated and unprecedented ways. To navigate this landscape effectively, it’s imperative for these companies to significantly bolster their Open Source Intelligence (OSINT) capabilities. This can be achieved by cultivating in-house expertise or partnering with specialised firms dedicated to the nuanced analysis of extremist online trends and behaviours. These experts are instrumental in identifying vulnerabilities within content moderation systems. As generative AI tools become increasingly pivotal for users attempting to circumvent content moderation policies and generate harmful content, enhancing these teams becomes essential. Their insights are critical not just for refining AI moderation models but also for strengthening current policy frameworks.

For AI companies:

It is necessary to vigilantly monitor how malicious actors might circumvent safeguards or leverage AI tools to fuel influence campaigns. By investing in advanced surveillance of their platforms, AI companies are already taking proactive measures such as suspending accounts that engage in malicious activities, restricting specific prompts that lead to the generation of harmful content, or implementing warnings for users about potential misuse. However, there is much more they can do, including coordinating with social media companies to organise cross-industry Red Teaming exercises that involve AI companies, social media platforms, and even intelligence officials. These exercises would simulate real-world exploits, allowing for discussions surrounding vulnerabilities, defences, and refinement of countermeasures in a controlled environment. This already exists within the cybersecurity industry, and there is limited reason why this can not exist to prevent informational threats. By engaging in these collaborative efforts, which bring together expertise, stakeholders can develop a comprehensive understanding of potential AI threats and establish robust, collective defences.

For Governments:

The role of governmental bodies in this ecosystem is to foster an informed public through targeted educational initiatives. These efforts should not solely focus on discerning real from fake content but should be broadened to encompass an understanding of how technologies can be manipulated to alter perceptions and spread misinformation. Several governments that have been met with an onslaught of influence operations have invested heavily in this effort like Estonia and Taiwan. It’s crucial that defence departments invest heavily in similar efforts to enable citizens to understand how online dynamics and meme culture can advance their digital literacy. By prioritising education that goes beyond the binary of real versus fake and delves into the mechanics of technological manipulation and its impact on information dissemination, societies can become more resilient to digital influence operations.

Daniel Siegel is a Master’s student at Columbia University’s School of International and Public Affairs. With a foundation as a counterterrorism researcher at UNC Chapel Hill’s esteemed Program on Digital Propaganda, Daniel honed his expertise in analyzing visual extremist content. His research delves deep into the strategies of both extremist non-state and state actors, exploring their exploitation of cyberspace and emerging technologies.