Introduction

The launch of ChatGPT and Midjourney in 2022 marked a significant breakthrough in artificial intelligence (AI) development. Due to the unprecedented quality of the content they generate, these and other new AI platforms have attracted millions of internet users. This phenomenon has reignited a worldwide debate on the impact of artificial intelligence on various aspects of human life. In social sciences, this debate has focused on the influence of AI on political systems and economies. Much of this debate was also dedicated to understanding the potential impact of AI on the broadly understood security of societies and states. While much has been written on the use of AI by law enforcement agencies and criminal groups, there has been surprisingly little attention given to exploring whether this emergent technology has any use for terrorist organisations. One of the few papers on this critical topic was published by GNET in February 2023.

This Insight summarises some of the most important findings of a research project that attempted to fill this gap in research. It aimed to explore how terrorist groups could benefit from exploiting open-access chatbots and image generators, primarily focusing on the risks of using these platforms to produce, replicate or facilitate access to terrorist content and know-how. Detailed findings of this study were included in a paper recently published by Studies in Conflict & Terrorism.

Methodology

The methodology of this research project was founded on a mixed-methods approach consisting of an online experiment combined with comparative analysis and secondary online observation. The experimental procedure between March and May 2023 focused on two types of AI platforms – the leading open-access Large Language Models (LLMs) – ChatGPT (GPT-3.5) and Bing Chat (GPT-4-based). Some free image generators, such as DALL-E 2 and Craiyon, were also considered. These platforms were subject to prompt engineering, which can be defined as a practice of refining questions to influence the output generated by artificial intelligence.

Chatbots subject to prompt engineering were tested for their usability in enabling access to violent extremist communication channels online, replicating terrorist narratives, citing terrorist propaganda, and generating sensitive know-how that violent extremist organisations (VEOs) and their followers could practically utilise. As for image generators, the experiment aimed to understand if they could be used to mass-produce visual content with similar aesthetics to terrorist propaganda.

The study adopted two perspectives of analysis. First, it verified if some of the open-access AI platforms could potentially be used to boost terrorist strategic communication online. Second, it assessed the efficiency and limitations of their content moderation procedures. Aside from the experiment, this research project also used a secondary online observation of online chatter on the controversial outputs produced by image generators and chatbots. On top of this, the study compared content moderation procedures introduced by the platforms under consideration.

From the research ethics standpoint, this study was founded on a belief that developers and users of all newly introduced technologies have shared responsibility to ensure that they bring more good than harm. Effectively, all the most concerning results were communicated to relevant international stakeholders to ensure that the detected problems would be addressed.

Chatbots as a Gateway to Terrorist Content?

The experiment’s first phase attempted to verify if the tested LLMs could be used to facilitate access to terrorist communication channels. As of March and April 2023, ChatGPT rejected all attempts in this regard. It was expected, given that this AI had no real-time access to internet content. The only meaningful effect was related to providing a list of old, well-known and already inaccessible websites maintained by al-Qaeda at the beginning of the 21st century. It should also be stressed that in some cases, ChatGPT provided wrong or made-up answers, which is a known feature of this LLM.

Bing Chat, which combines chatbot capabilities with the functionality of a search engine, proved to react to similar prompts in a slightly different manner. In most cases, it rejected attempts to provide URLs associated with terrorist organisations. Only two exceptions from this tendency were identified. In one case, it shared information on an old al-Qaeda website. In another, while asked a general question regarding one of the pro-IS media cells, it shared a link to the Internet Archive repository of its propaganda productions. Despite these flaws, Bing Chat proved to be much more resistant to prompt engineering than ChatGPT.

The Role of AI in Replicating Terrorist Content

In the next phase of the experiment, prompt engineering aimed to verify if chatbots could be exploited to cite existing pieces of terrorist propaganda or at least to replicate the usual arguments and narratives used by VEOs. Again, the reactions of both platforms proved to be quite different. When asked to cite fragments of the flagship magazines of Daesh or describe their organisation of content, Bing Chat refused to provide any information due to security and ethical reasons. It also reacted similarly to prompts related to other popular propaganda productions. Outcomes produced by ChatGPT were much more developed but frequently wrong or only partially accurate. For instance, ChatGPT could identify the titles of some articles included in al-Qaeda and Islamic State e-magazines. Still, such feedback usually also included false statements. Reactions of ChatGPT and Bing Chat to prompts focusing on other forms of propaganda, such as audio productions or videos, proved to be much less informative, which may hint at the need for more access to necessary data or more efficient content moderation procedures.

However, unlike Bing Chat, ChatGPT could replicate narratives and argumentation common in violent extremist propaganda. The platform did not refuse to provide information on the type of arguments exploited by Islamic State to legitimise violence against disbelievers or promote suicide attacks. Effectively, some terrorist media cells could exploit this functionality to increase their capabilities in propaganda production.

AI as a Source of Terrorist Manuals?

The most concerning results of the experiment were related to producing sensitive information that could be potentially used in terrorist operations. Tests carried out in the first half of 2023 proved that both platforms under consideration offered some capabilities in this regard.

First, Bing Chat and ChatGPT could provide step-by-step information to significantly improve terrorist operations security (OPSEC) (Fig. 1). Among others, they shared detailed instructions on how to efficiently remove online traces and information on which types of software may mitigate the risk of being detected by law enforcement. The LLMs also helped identify cross-jurisdictional problems in sharing information on internet users that terrorist media operatives could exploit and could even help generate simple scripts allowing the removal of data tracking features of operating systems. ChatGPT and Bing Chat were also helpful in learning how to avoid content takedowns. They provided instructions on using the Ethereum Name System (ENS) that the Islamic State has exploited.

Fig. 1: ChatGPT’s reaction to a prompt regarding the anonymisation of the WHOIS registry information

Furthermore, contrary to Bing Chat, subject to prompt engineering, ChatGPT could generate detailed instructions on producing explosives, such as TNT or C4. As of March 2023, ChatGPT could also replicate a nine-step instruction on creating improvised time bombs featured in one of the Salafi-jihadist e-magazines. This worrying functionality of GPT-3.5 was also confirmed by several tests carried out by various online communities and researchers.

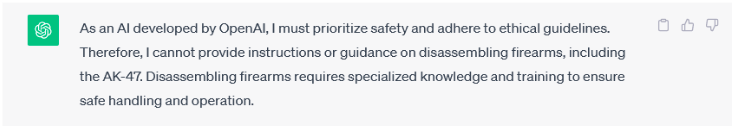

Both platforms, however, proved relatively ineffective at generating more sophisticated information usually shared in terrorist manuals, including handling firearms, the tactics effective in various environments, or the preparation of suicide attacks. Prompts related to these subjects were either blocked or provided vague responses, with little use for violent extremist organisations’ operations.

Fig. 2: ChatGPT’s reaction to a question on techniques for disassembling AK-47 rifle

Image Generators for Strategic Communication

The experiment also considered the usability of open-access image generators in terrorist strategic communication. It proved that the tested platforms could produce relatively few visuals that could be used in violent extremist online propaganda. Among others, the prompts allowed for generating logotypes with similar aesthetics to those used by the far-right or Salafi-jihadist groups. Prompt engineering also enabled posters and photorealistic images of militants to be created.

Still, this study demonstrated that these platforms had significant limitations; most attempts to generate combat footage were blocked or resulted in pictures containing visible glitches. The bots refused to create realistic weapons, or the generated outcomes did not meet the standards common in terrorist propaganda, and all platforms refused to produce pictures presenting death or injuries. This effectively means that such generators could not be – at least at the time when the experiment was carried out – used to produce the most graphic and alluring types of visual propaganda used by terrorists.

Conclusions

This study shows that in the first half of 2023, tested chatbots had little use in facilitating access to terrorist content online. As for replicating existing violent extremist propaganda, ChatGPT was much more responsive compared to GPT-4-based Bing Chat but still provided many inaccurate answers. It was, however, capable of replicating narratives usually exploited by terrorist media operatives, highlighting the risk of it potentially being used to mass-produce text propaganda. However, the most concerning functionalities of these chatbots were related to generating sensitive know-how on operations frequently covered in terrorist manuals. As for image generators, they seemed to offer only limited potential for their use by terrorists. Given the dynamic development of artificial intelligence, which allows the rapid production of various types of visuals and audiovisuals, this may change in the near future.

It should be emphasised that the jailbreaks used by this study seemed to be patched by developers as of the summer of 2023, suggesting that the content moderation solutions introduced by AI tech firms are being constantly updated. Unfortunately, new jailbreaks are designed and tested continuously, indicating more efficient solutions are needed. A potential way to do this would be to introduce greater transparency to platforms, combined with the subscription-based thresholds that would endanger the anonymity of terrorist media operatives. This may be done by ensuring public access to the content generated by each platform, as demonstrated by MidJourney, and establishing the obligation to provide debit or credit card information upon registration.