In June 2020, I detailed some of the operational and digital security (OPSEC) practices of English-language far-right networks as they exist on the Telegram ecosystem. The following exploration expands this focus to examine how far-right and Salafi-Jihadist networks prepare for and mitigate the effects of censorship by increasingly-vigilant hosting companies and digital service providers.

Just like other digital service providers, extremist and other clandestine networks seek to ensure the integrity and availability of their data—videos, texts, audio, memes and other imagery—to their base of supporters and activists. The ability to avoid service-side disruptions, bottlenecks, unreachable data, and data loss is key for service providers to be seen as legitimate, professional, and dependable. In its approach to information security, the far-right is not unlike traditional businesses that seek to ensure data availability through preventative measures including redundancy, failover, backup, and consistent monitoring. Since the inability for users to access data, even for a short time, can damage an organisation’s brand and reputation, ensuring consistently-available, reliable data is a high priority.

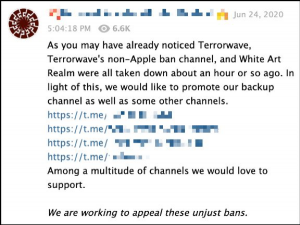

While platforms such as Telegram—popular amongst the far-right—have developed limited regulatory methods to curtail the propagation of extremist material, these anonymous, decentralised, and often leaderless movements have rapidly developed and disseminated methods to circumvent such measures. In response to several rightist Telegram channels being taken offline in June 2020, many channels anticipated disruption and planned accordingly. These mitigations sought to ensure continued availability of their content, largely through a two-pronged strategy: encouraging offline archiving, and establishing and promoting backup-mirror channels.

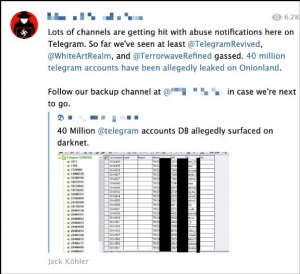

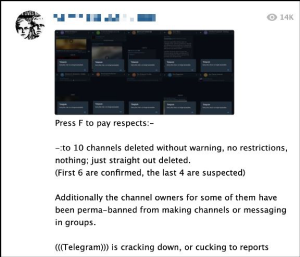

Channels which survived the June purge sought to memorialise and name those banned. In the partial post below, the author lists seven channels with “confirmed” bans, as well as other channels “suspected” of being banned. Note that in the quoted post, the company’s name is framed by triple parentheses, which in contemporary far-right signaling implies that its actions were motivated by a nefarious Jewish conspiracy.

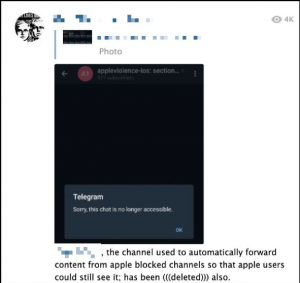

Throughout the June purge, users debated whether the actions were coordinated by Apple (i.e., device-side) or Telegram (i.e., provider-side), with many calling the actions an “Apple ban.” Channels which were completely removed, not simply banned on iOS devices, were said to have been “shoah’d”, a reference to the Shoah, the English-Hebrew transliteration describing the Nazi Holocaust. Automatic reporting bots (discussed in the June report) designed to circumvent the ban were also blocked and deleted, further limiting content availability. Some users hypothesised that the ban was initiated after increased attention to these digital communities following the release of a BBC documentary, “Hunting the Neo-Nazis.”

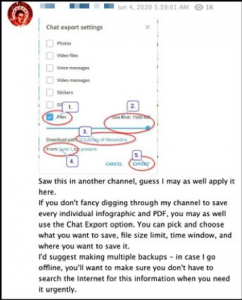

For those channels which anticipated the ban, many advocated readers to quickly download their content so that a disruption in service did not limit availability but simply paused distribution. Infographics were circulated instructing users how to export a channel’s files for offline viewing as an effective means of continuity.

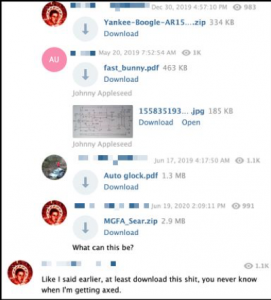

The compressed files shown above contain instructions for producing firearm components, while other channels contain similar instructions for explosives, a variety of improvised weapons, and other survivalist-themed contraptions. Bomb-making and other tactical guidance was also distributed using less secure platforms including Facebook.

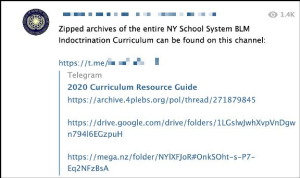

This method of promoting users to download and self-archive is not new for online communities anticipating counter-extremism measures. Mirroring the approach popularised by Al Qaeda (AQ), Islamic State (IS), and other Salafi-Jihadists more than a fifteen year prior, far-right activists regularly post backups on mainstream, commercial platforms such as Archive.org, Dropbox, and Google Drive, as well as smaller platforms, correctly anticipating that many links will be taken down when identified by providers.

Many channels strategically and preemptively establish backup mirrors so that when/if their original site is removed, members can simply continue receiving content at another address (joined prior to a ban), with uninterrupted service.

This method of availability through redundancy is not new nor unique to the far-right, as Salafi-Jihadist groups regularly distribute content through diversified and redundant posting on commercial sites as has been frequently noted by scholars and analysts.

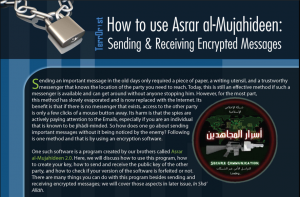

A focus on countering censorship and disruption through mirroring and self-archiving is only one element of the wider digital security strategy. The production of practical OPSEC guides is another approach, and Salafi-Jihadists groups have been engaging in such measures for more than fifteen years. Around 2007, forums promoted a proprietary encrypted messaging software, Asrar al-Mujahideen/Mujahidin Secrets, and affiliated propagandists such as the Global Islamic Media Front, produced file sharing and encryption software for would-be supporters.

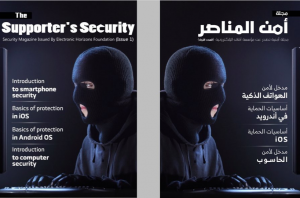

These Salafi-Jihadist applications and publications have including several guides authored by the Electronic Horizons Foundation, who began distributing The Supporter’s Security in May 2020. The group has been publishing such guides for more than five years, and have published “dozens of manuals” according to one expert. These manuals have included the standard tool promotion—VPNs, encrypted messaging and file storage, multi-factor authentication, Tor, secure operating systems, smartphone hardening, cryptocurrencies—as well as promoting the use of Salafi-authored tools.

For its part, IS developed software solutions in the hopes of allowing supporters continued access to releases. As early as August 2016, IS promoted software to allow supporters to stay connected in the event content was relocated to a new host after being flagged and banned. This automated process, in the form of a browser add-on, allows the user to ignore the changing location of the content, and permits the content provider a centralised mechanism for releasing addresses as locations change.

IS has utilised other browser extensions as well, using its English-language Telegram channel Khilafah News to announce the release of Halummu in early 2017:

“We are pleased to announce a special add-on for the #Firefox browser to access the #Halummu website, which provided #IslamicState releases in #English. Once installed the add-on, it will redirect you always to the correct domain for #Halummu.”

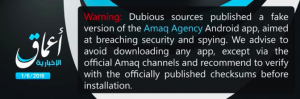

The announced release of Halummu included an infographic to instruct the user, and other advertisements promoted a standalone Amaq Agency news application for Android.

Interestingly, many of these IS releases have been mired in confusion and controversy as various parts of its media platform respond to accusations of infiltration and disruption by intelligence services, as seen in the cautionary warnings distributed by IS’s news agency at least twice in 2016.

Conclusion

Like their digitally-adept, Salafi-Jihadist predecessors, far-right online communities will likely continue to form, metastasise, and persist. Despite bans at both the service provider and device level, and a series of high-profile police actions targeting accelerationist, far-right networks (e.g., The Base, The Atomwaffen Division), these communities persist and thrive online. The widespread popularity of end-to-end encryption platforms such as Telegram, Rocket Chat, and Wire, in conjunction with VPNs and encrypted email providers (e.g., ProtonMail, Tutanota), have made the challenge even greater. In light of these realities, it is prudent to direct efforts at understanding and addressing the factors driving individuals towards these communities rather than seeking to disable their output in a Telegram-based, bot-ban-bot arms race.

To borrow a phrase from the fascists, “there is no [supply side] political solution.”

While the drivers for fascism and white supremacy morph and persist, in the present day, they are catalysed by racist, nativist, and anti-Semitic dog whistle rhetoric from political elites. Within these exacerbated social tensions, further censorship is likely to embolden, not limit the production and dissemination of far-right materials. Even if limiting the availability of content can be achieved for a time, any win is temporary as technologies change and methods of producing and consuming content adapt. Certainly, we should continue efforts to identify, disrupt, and de-platform individuals and groups who use digital spaces to organise hate and violence, but a comprehensive violence-prevention strategy must focus on the social, economic, political, and wider environmental factors which contribute to inequality, marginalisation, and violent radicalisation. Such a strategy must seek to reduce the pool of eager consumers, rather than focusing on stemming the flow of content producers.

This is no easy task. But as The Butter Battle Book teaches, there is always a bigger, more complex weapon, and a bigger, more complex anti-weapon produced as a result.

[Cover image credit: GameQuarium]