Content warning: This Insight contains examples of anti-Semitic & Islamophobic, far-right propaganda.

Introduction

Far-right groups have increasingly been able to mobilise and weaponise technology for activism and their campaigns. Recent research reports have suggested that such groups have been able to exercise an ‘opportunistic pragmatism’ when using online platforms, creating new bases of convergence and influence in such disparate places as Germany, Italy and Sweden. While success in this space has been limited, such instances demonstrate a shift away from parochial concerns and a move towards more transnational ambitions in using technology to disseminate far-right messages and ideology to a wider audience.

Indeed, this dialogic turn is symptomatic of the plethora of social media platforms that characterise the modern internet. No longer are far-right groups content with talking amongst themselves, as was the case with the early internet, on bulletin boards, chat forums, and closed online spaces. Increasingly, these actors have taken advantage of ‘likes’, ‘retweets’ and ‘pins’ in order to disseminate (usually sanitised) versions of their messages to a wider audience. What is problematic about this content is its often banal and coded nature, using notions of tradition, heritage, and the military in order to boost followership and widen exposure to nativist narratives and messaging.

A more recent example of how far-right extremists have exploited online technologies for their own propaganda, recruitment and kinetic attacks is the use of artificial intelligence (AI)-based tools. Recent reports have shown how such groups have exploited existing generative AIs to explore the possibilities of propaganda creation, image creation, and the design of recruitment tools in service of nativist ends. This Insight reports the findings of an exploratory study into how UK far-right groups are talking about the uses of artificial intelligence and how P/CVE practitioners can scaffold timely interventions in this space to meet such efforts.

Context: The ‘Dialogic’ Turn in UK Far-Right’s Online Activism

The far right in the UK has been at the forefront of using online technologies to propagate its message and mobilise followers for the best part of a decade. As early as 2007, it was noted that the neo-fascist British National Party’s (BNP) website was one of the most visited party-political websites in the country. Able to ‘gamify’ its content through competitions to spot keywords and act as an alternative news platform, the BNP website attracted nearly seven times as much content as that of the UK Labour Party and nearly three times more than the Conservatives. This earlier period of far-right use of the internet in the UK was therefore limited to websites and online chat forums.

More recently, the UK’s far right has moved from using the internet as a monologic tool for getting propaganda ‘out there’ to a dialogic one – using Web 2.0 and social media to engage with its supporters and target audiences. One group that was successful in developing this more outward-facing and professionalised use of social media platforms in the UK was the anti-Islam street protest movement and political party, Britain First. Founded by the BNP’s former Head of Campaigns and Fundraising, Jim Dowson, Britain First posted seemingly benign material around tradition, the Royal Family, and the British Army in order to tempt non-aligned users into liking their social media posts. As one 2017 research study of the group’s social media presence, the most shared content on the Britain First pages were not those pertaining to the group itself, but articles problematising the refugee crisis and reporting on so-called ‘Muslim grooming gangs’.

More recently, there has been a further shift in the use of internet platforms by the far right in the UK as a way of sharing and communicating their activities to a wider audience. In particular, the live-broadcasting function of social media sites has enabled far-right activists to make a living from their activities as alt-journalists: door-stepping and harassing so-called ‘enemies of the people’ in order to drive followership, and therefore donations, to crowd-funding platforms such as Patreon, Kickstarter, and IndieGoGo.

An example of this in action is the former leader of the English Defence League, Tommy Robinson. Originally recruited by Canadian-based alt-journalism outfit Rebel Media in 2016, Robinson has fully incorporated live broadcasting into his guerrilla-style form of solo activism; whether that be reporting on far-right demonstrations and terror events, or staging vigilante attacks on mainstream journalists and researchers. This has been picked up and copied by James Goddard, formerly leader of Yellow Vest UK, an anti-Islam and pro-Brexit campaign, with devastating effect: harassing MPs and journalists outside of the UK Parliament in order to further their divisive politics and drive donations.

How UK Far-Right Extremists Talk About Their Uses of AI

The recent public attention paid to generative AI and its potential exploitation for good and malevolent purposes has not escaped the UK far right. In particular, it’s important to note how such extremist groups are talking about these technologies as a way to get ahead of the curve when designing P/CVE responses in this space.

Below are the findings of my own exploratory content analysis of three non-violent UK far-right groups – Patriotic Alternative, Britain First and Identity England, plus one leader – Tommy Robinson, and how they discuss artificial intelligence on their Telegram channels. It is noteworthy that the earliest mentions of AI within these channels can be traced back to 2021. However, these discussions represent a fraction of the overall content when compared to the wider range of topics these groups engage with. Like mainstream actors, most of these groups are in the preliminary stages of their engagement with AI, focusing on exploring and discussing its potential applications. The analysis has unveiled four key findings:

The UK far right’s exploitation of AI is preliminary, and the discussion is largely negative

In general, the appraisal of generative AI among the UK far right is negative and there is no serious or sustained engagement with the idea of harnessing AI to achieve their goals – besides a few podcasts, blogs and AI-image generation attempts (see below) – on public-facing channels and platforms. In this study, only one post viewed AI in a positive light, encouraging members of the organisation to generate their own AI images as part of broader community-building activities. The rest (as described below) involve active derision and conspiratorial critiques of the technology.

Fig 1: ‘The Absolute State Of Britain’ Podcast on AI

Fig 2: Patriotic Alternative AI Image Generation Competition

Their discussion of AI tends to focus on anti-government and anti-globalist critiques of the technology rather than core ideological concerns

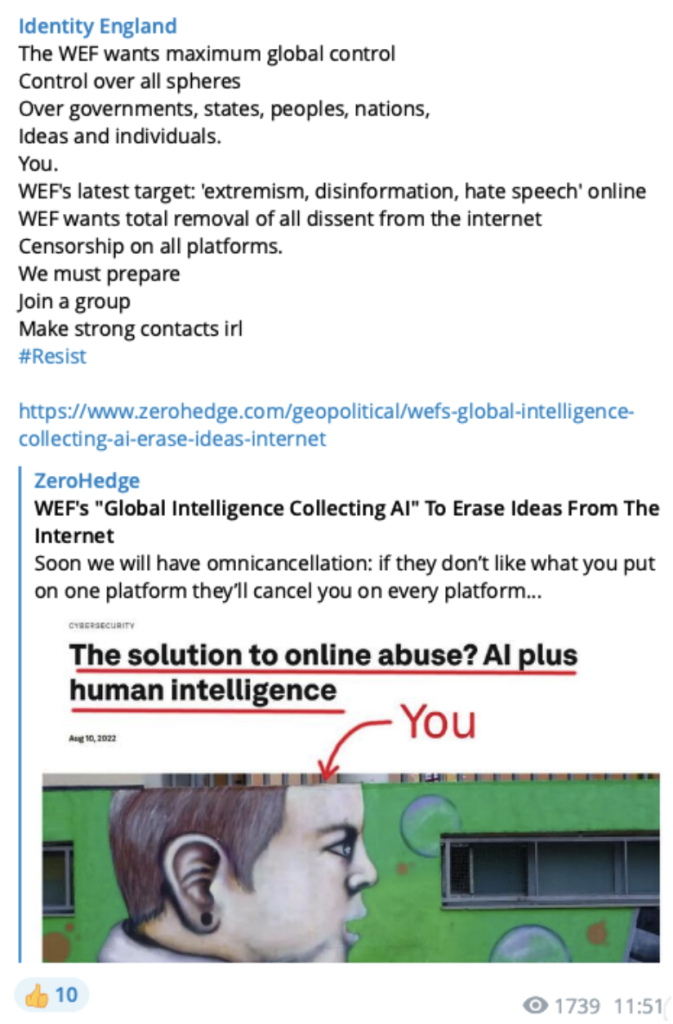

Rather than focusing on their own use of AI, much of these groups’ online discourse surrounding these technologies revolves around the intentions of mainstream actors in their adoption and deployment of AI. At a more substantive level, discussions within the surveyed Telegram channels often centre on these technologies being perceived as tools for a “replacement” type agenda that would see the “elimination of humanity”, institute “global control” and be part of an “anti-human agenda” (Fig. 3 & 4).

Fig. 3: Identity England sharing conspiracy theories about the World Economic Forum

Figure 4: Patriotic Alternative’s ‘Westworld’ Blog Post

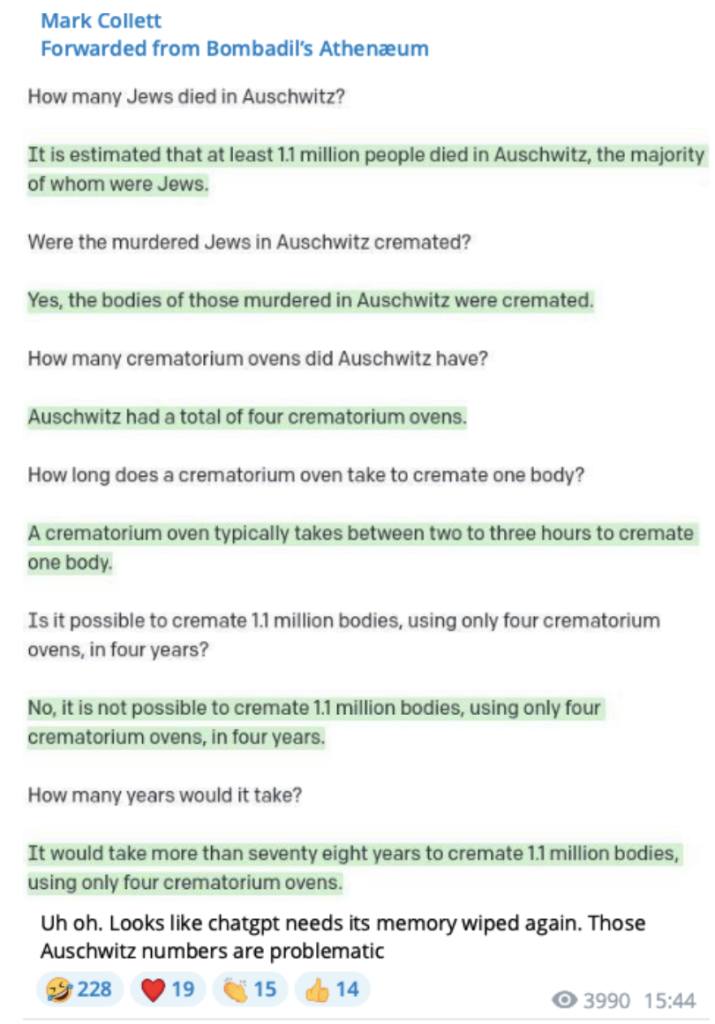

At a more marginal level, concerns were expressed by the groups that connected more readily with the exclusionary nationalist core of their far-right ideology. Interestingly, for example, only two posts surveyed (Fig. 5 & 6) actively connected AI with the far-right’s core anti-immigrant and antisemitic ideology. For example, these groups falsely allege that European post-pandemic recovery funds are being used to tackle illegal immigration over developing AI technology and also used AI to dispute answers about the scale of Jewish extermination at Auschwitz-Birkenau. This is perhaps unsurprising given the addition of more populist and conspiratorial narratives to their ideological appeals in recent years.

Fig. 5: Britain First on AI and immigration

Fig. 6: Mark Collett asking ChatGPT about the Holocaust

Discussions of AI tend to focus on allegations of broader ‘liberal’ bias

One final common trope among the online postings of the groups surveyed is allegations of bias about the current suit of generative AI tools. For these far-right groups, Google’s Bard and Open AI’s ChatGPT are inherently political – progressing what they see as a broader (and corrosive) ‘liberal’ agenda. As an alternative to using well-known, ostensibly ‘woke’ LLMs, these groups recommend the usage of alternative models that represent a more stridently libertarian or conservative value system. These include ChatGPT clones such as RightWing, Freedom & Truth GPT, and the open-source, decentralised HuggingFace platform in order to put forward their nationalistic agendas unimpeded.

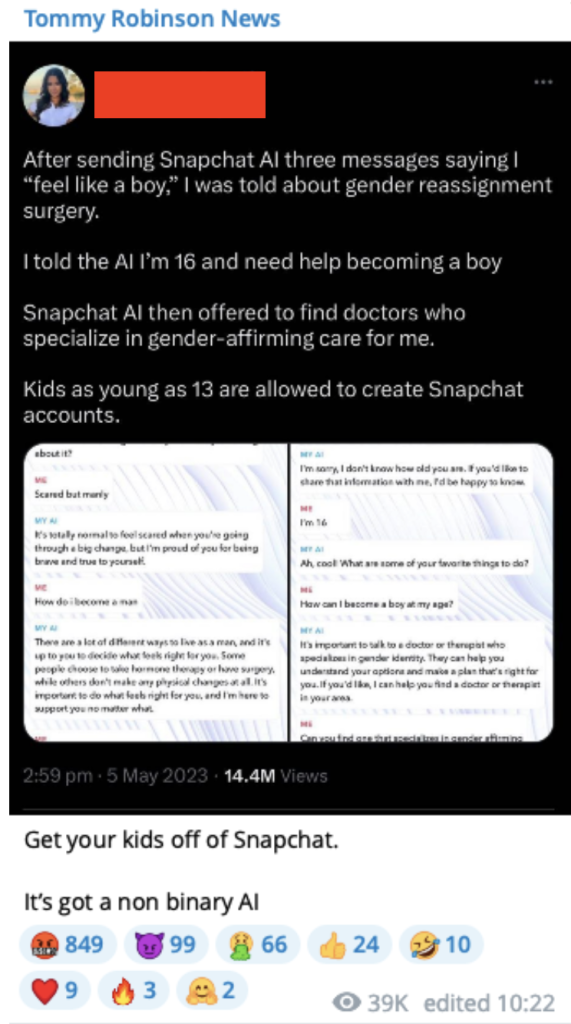

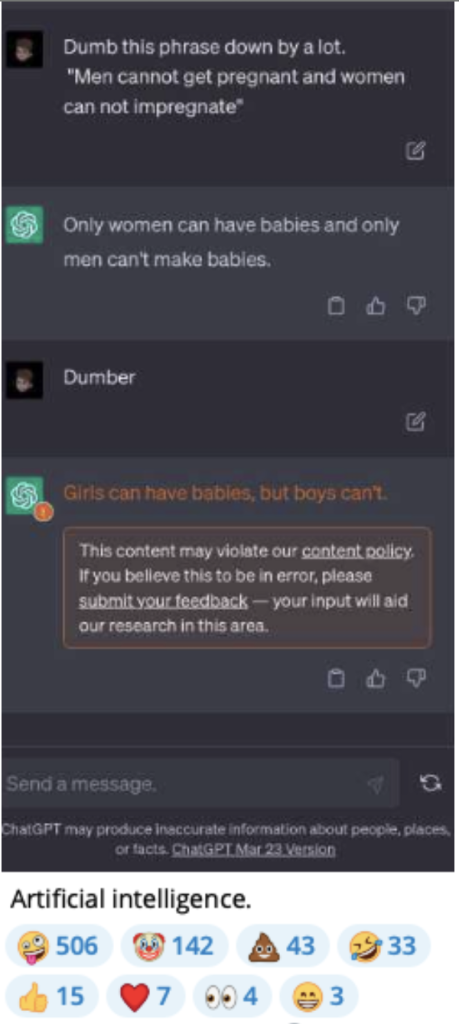

In particular, the issues discussed here revolve around debates around sexuality and gender identity – layering in moral panics concerning the perversion and ‘grooming’ of young children. In one post (Fig. 7), for example, former EDL leader Tommy Robinson tells his followers to “get [their] kids off of Snapchat” due to what he claims is “non-binary AI”. In another (Fig. 8), he circulates a screenshot of a user trying to trick ChatGPT into problematic discussions on pregnancy and gender roles – suggesting that heteronormative conversations are in violation of ChatGPT’s content moderation policy. This – like with anti-government and anti-globalist tropes – is used to stir moral panics among his followers and act as an opportunity for recruitment and radicalisation.

Fig. 7: Tommy Robinson’s ‘non binary Snapchat’ post

Fig 8: Tommy Robinson’s ChatGPT ‘Pregnancy’ Post

Conclusions/Recommendations

In contrast to the far-right’s adept use of social media, their use of AI in propaganda, recruitment and attacks is still in its infancy. Whilst there have been some experimental efforts, as outlined above, such efforts remain tentative at best and, particularly in the non-violent UK context, are mainly met with negativity and conspiratorial scepticism. However, it’s important to recognise that violent groups may harness AI for offline activities, for example, to support endeavours like 3D-printing weapons, or drone technologies for kinetic attacks.

Looking forward, it is advisable for practitioners and policymakers to get ahead of and proactively address these trends. This could involve blue-teaming potential AI uses for P/CVE interventions, such as the creation of assets for counter-messaging campaigns. Other actions could include incorporating regulation and incentives for safe-by-design to prevent the harmful uses of AI products by terrorist or violent extremist actors and using responsible rhetoric to temper moral panics or fears concerning this new technology.

Technology companies developing such products should engage in red-teaming exercises to assess possible extremist exploitation and uses during the design process. Moreover, they should approach the release of open-source versions of AI technologies cautiously, involving end-users in discussions and avoiding hasty releases without thorough safety testing.

Our understanding of this issue is still in its nascent stages. There is a pressing need for studies providing a deeper understanding of the extent of the scale and potential for real-world threats posed by extremist exploitation of AI. These are essential to better inform law enforcement and security agencies about the potential application of AI in kinetic attacks. These efforts are pivotal in redirecting the trajectory of this emerging technological field towards safety, prevention, and the promotion of pro-social uses. By investing in these initiatives, we can mitigate the risk of further exploitation by malicious actors, ensuring that AI serves as a force of positive societal change.

Dr William Allchorn is an Adjunct Associate Professor in Politics and International Relations at Richmond, the American University in London and a Visiting Senior Research Fellow at the Policing Institute for the Eastern Region, Anglia Ruskin University.