Introduction

Israel’s war on Hamas has been making headlines worldwide since it began on 7 October 2023. However, individuals around the world not only consume news about the war via traditional media and news outlets, but often through browsing social media platforms.

Our monitoring of online far-right extremist channels has shown that these individuals use meticulous strategies to spread anti-Israel and antisemitic propaganda on mainstream social media platforms. Their goal is to normalise far-right ideologies and narratives, ultimately aiming to radicalise individuals across the ideological spectrum.

Far-right activists often exploit online discussions of current events to further their own agendas, with a primary aim of Red Pilling— radicalising various groups by interposing and disguising far-right ideology into mainstream discourse. For instance, following the 2020 US presidential elections, when supporters of candidate Donald Trump felt disenfranchised, far-right extremists capitalised on this and sought to radicalise them. Similarly, throughout the COVID-19 pandemic, they exploited the anti-vaccination movement to radicalise users and spread antisemitic conspiracy theories.

This Insight presents findings from the research we conducted at the Antisemitism & Global Far-Right Desk at the International Institute for Counter-Terrorism (ICT) – “From Memes to Mainstream – How Far-right Extremists Weaponize AI to Spread Antisemitism and Radicalization.

We present four tactics used by these extremists: (1) Narrowcasting, (2) Crafting Engagement, (3) Fake Accounts, and (4) Exploiting AI for Meme Creation.

Borderline content is a central facet of the strategies explored in this Insight. The strategies and discussions implicitly specify that the material being created and disseminated should not directly violate platform policies but still serve as a subversive means to deliver extremist beliefs in a palatable manner to wider audiences.

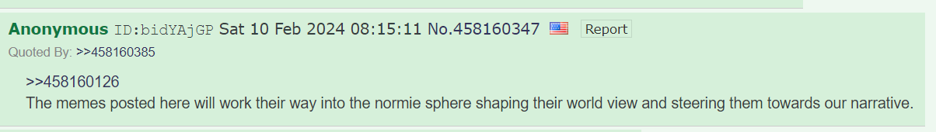

Fig. 1: User on 4chan explaining the logic of radicalisation through

meme warfare and borderline content

The following explored tactics are deeply interconnected and are not mutually exclusive nor sequential. The tactics are: (1) Narrowcasting (2) Crafting Engagement (3) Fake Accounts (4) Exploiting AI for Meme Creation.

The posts shown below often contain more than one of the tactics explored.

Tactic # 1: Narrowcasting

Extremists sometimes employ a targeted approach termed narrowcasting on social networking platforms. The process involves curating content and messaging towards specific segments of the population customised to be attuned to their values, preferences, demographic attributes, or memberships. For example, a user on a neo-Nazi board strategising ways to target Black online spaces with relevant and palatable antisemitic conspiracies and rhetoric.

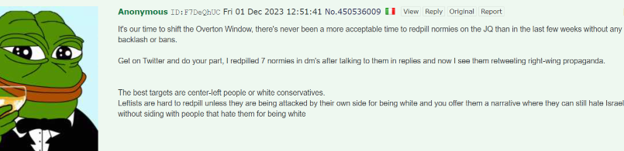

In a different case, a user claimed that “there’s never been a more acceptable time . . . than in the last few weeks” (referring to the Israel–Hamas war) to “redpill normies on the JQ[Jewish Question]”. The user encourages others to target and red pill center-left and white conservative users on X. The strategic exploitation of current political tensions to drag the Overton window, demonstrates a deliberate effort to normalise antisemitism.

Fig. 2: User on 4chan calling on others to go on X and

attempt to radicalize users

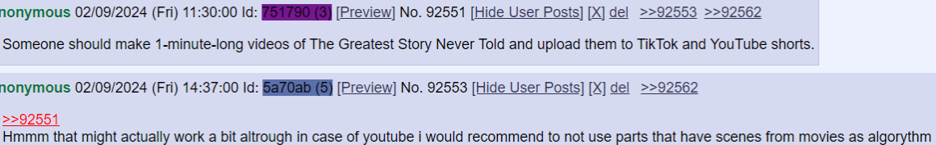

On one extremist imageboard, we observed a discussion about exploiting the divisive sentiments towards Israel amongst young demographics (Gen-Z) to plant antisemitic beliefs subversively. Users shared and discussed AI-generated antisemitic talking points and strategised dissemination tactics. One strategy discussed to reach the desired Gen-Z audience was creating 1-minute clips cut from neo-Nazi propaganda films and sharing them on short video platforms like TikTok and Youtube Shorts.

Fig. 3: Users on a far-right board discussing distribution of clips

from neo-Nazi documentary to TikTok

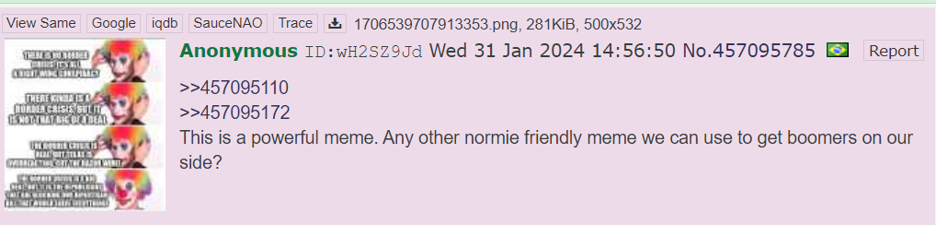

Some users explicitly strategise how to target age demographics, like the post below discussing effective memes for radicalising baby boomers — the generation of individuals born between World War II and the 1960s.

Fig. 4: User on 4chan discussing antisemitic memes made to

radicalise “boomers”

Tactic #2: Crafting Engagement

Users are strategising ways to make their Red Pills more legitimate. One tactic is to drive and manufacture artificial engagement on their content.

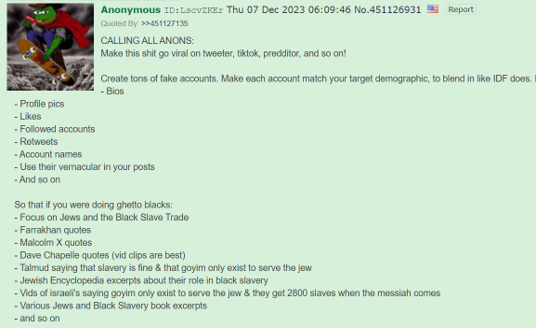

In a December 2023 post on 4chan, a user says that “the time is definitely ripe for the picking, and it’s a once-in-a-lifetime timing[sic].” The user explicitly outlines a strategy for creating fake accounts on platforms like X and TikTok to share red pills about Jewish people.

The user explains dissemination strategies to get high engagement, such as replying to highly followed accounts and using other fake accounts to boost the content. The user also emphasises tailoring each account to fit in with the intended audience and using content that appeals to the group, which would most effectively convince them of the veracity of the antisemitic messaging.

Fig. 5: User on 4chan outlining strategy to disseminate

antisemitic content using fake accounts

Users encourage one another to boost their content using fake accounts on platforms like X and TikTok. This is explicitly done to spread red pills and boost engagement on content originating from far-right sources.

Fig. 6: Post on 4chan encouraging users to create accounts on

TikTok and X to boost engagement on each other’s posts

Tactic #3: Fake Accounts

Users explicitly encourage creating fake accounts for the purposes of narrowcasting and crafting authentic engagement.

In the following post, a user shares a strategy for building convincing fake accounts on X, TikTok, and Reddit. The strategy instructs users on narrowcasting tactics with a tailored account and content strategy for the demographic being targeted. The post contains many antisemitic talking points to specifically target the Black community.

Fig. 7: User on 4chan outlining the strategy to disseminate

antisemitic content among people of colour

Tactic # 4: Exploiting AI for Meme Creation

Content creation is central to meme warfare. Far-right extremists have enthusiastically explored the potential for AI to streamline this process. Mainstream AI tools are consistently being exploited to create offensive and harmful content. In far-right influence campaigns, the use of AI has been increasingly central to their efforts.

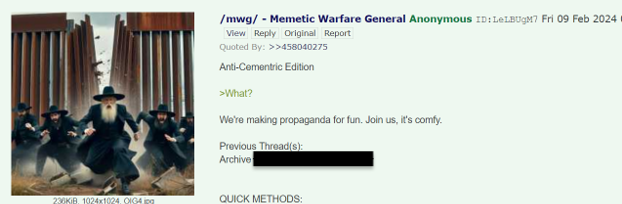

There is a frequently-posted tutorial which explains how to use AI to generate images for the purpose of “memetic warfare”, claiming “if we can direct our autism and creativity towards our common causes, victory is assured” and encouraging users to share their generated images “beyond the site.” This acts as an explicit call to action to spread AI-generated images containing hateful imagery, which users perceive as an effective and persuasive means to radicalise others. This tutorial is a copy-paste that has appeared at least 500 times on 4chan boards as part of /mwg/ (Memetic Warfare General) threads where users have been discussing and collaborating on AI-generated images, tactics, and strategies — with specific antisemitic motivations.

Fig. 8: Tutorial on 4chan for generating antisemitic propaganda for

the purpose of being spread. The tutorial encourages users to create images

of antisemitic and anti-Black caricatures.

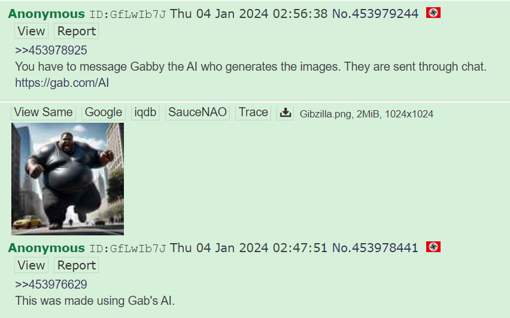

Far-right users are often frustrated with content restrictions on AI tools and seek “uncensored bots”. We have noted an increase in the availability of AI tools designed by and for the far-right communities on platforms such as Gab and 8chan.

Fig. 9: Users on 4chan discussing new image generation

capabilities provided by Gab (4chan; 4 January 2023)

Users are also developing and using bespoke software for meme warfare. This is exemplified by tools like the “Meme Cannon”, which allows users to automate posting from X accounts at a rate of 1-2 tweets per minute.

Discussion on Legality, Moderation, and Safeguards

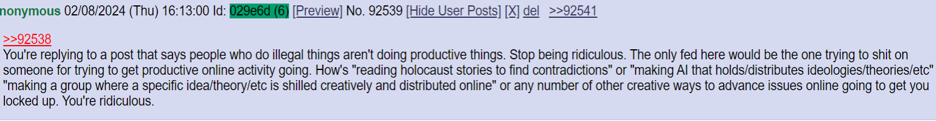

We observed many discussions by users concerned about the legality of certain meme warfare tactics. The goal of these discussions was often to understand what actions can be taken without legal repercussions.

Fig. 10: User on a far-right board arguing that the activities

discussed in the thread are legal

Many users are also frustrated by moderation on social media platforms, where they often get banned for sharing extremist content. We observed many tutorials explaining how to bypass bans on social media platforms like X.

This need extends to GAI as well, with users explaining how to bypass their accounts getting banned and even requirements of ID verification. This is part of a larger trend we noted in our previous report, where far-right users strategize ways to bypass safeguard in mainstream AI tools in order to generate harmful content.

Fig. 11: Tutorial on 4chan with methods to bypass certain

safeguards on Bing AI

Sometimes the frustration of moderation on platforms leads users to transition their ideological dissemination to physical spaces, as seen in Fig. 12.

Fig. 12: Post on 4chan encouraging users to create hate speech in

physical spaces with a photo of “DEATH TO ISRAEL” graffiti

This Insight reveals various strategies that are currently being used to effectively alter and muddy online discourse.

Individuals who consume the borderline content are susceptible to processes of desensitisation and radicalisation. Repeated exposure to hateful content tends to desensitise individuals and may even lead to increased prejudice, particularly when presented in a seemingly acceptable and positive light. Non-Jewish individuals are also less likely to perceive harmful aspects of hateful antisemitic content. This amplifies the content’s effectiveness in normalising antisemitism. This desensitisation and radicalisation can lead individuals to seek out like-minded people, whether on online platforms or in the real world. Ultimately, the culmination of the online radicalization process may result in violent acts of extremism in the real world.

Policymakers, institutions, and platforms must pay close attention to these strategies, as there is a clear intent to expand the proliferation of harmful content to mainstream audiences with the goal of radicalisation. Platforms need to enhance their moderation policies with informed and proactive measures. We express particular concern about mass layoffs that affect moderation, trust and safety teams, which are crucial in countering the continuously evolving and subversive tactics employed by extremists. Strengthening these teams is vital to effectively combat the spread of exploitative narratives.

Dr. Liram Koblentz-Stenzler is a senior researcher and head of the Antisemitism & Global Far right Extremism Desk at the International Institute for Counter-Terrorism (ICT) at Reichman University and a visiting Fellow at Yale University.

Uri Klempner is a 2025 Schwarzman Scholar and Cyber Politics graduate student at Tel Aviv University. He currently leads media and strategy at Tech2Peace.