Abdullah Alrhmoun, Charlie Winter and János Kertész

Introduction

It’s well known that terrorists have been using the internet to plan operations, distribute propaganda, and coordinate sympathisers since their earliest days. As far back as the 1990s, the likes of Al Qaeda were using websites to get their message out and expand their following.

Over time, such groups were forced to move to closed platforms, like password-protected digital forums. As pressure from government security agencies increased, many subsequently migrated to social media platforms like Twitter and Facebook, and eventually encrypted systems like WhatsApp and Telegram. Terrorists have mastered the ins and outs of digital communication, and in constant competition with law enforcement, they seek to turn it to their own advantage.

While terrorist usage of social media has been intensively researched in recent years, relatively little is known about how terrorist groups and their supporters have sought to harness the more advanced features of these platforms, like bots, not to mention other sophisticated, machine-based digital strategies (some closely associated with digital marketing techniques) to advance their objectives.

This Insight is a summary of our recent article for Terrorism and Political Violence, in which we used network science to shed light on one such example: how the Islamic State (IS) exploits bots on Telegram to coordinate sympathisers, curate their community, and amplify their propaganda.

Our findings indicate that IS employs bots as part of a deliberate and sophisticated strategy, in keeping with its reputation as technologically forward-thinking. Indeed, our conclusions suggest that the labour-saving power of bots has been instrumental in growing and managing such a large online community of terrorist sympathisers.

Methodology

Telegram became a key forum for terrorist groups and their sympathiser networks following crackdowns on mainstream platforms like Twitter, especially from 2016 onwards. It should be noted that Telegram has taken meaningful steps to make the platform less hospitable for militants by engaging in strategic network disruption and other forms of moderation – occasionally to great effect. Furthermore, there is evidence that apps like WhatsApp, Element and Hoop are also used by terrorist groups. Despite this, Telegram remains one of the more viable platforms for groups like IS, and thus was the focus of this research.

By way of background, a bot is a piece of software that runs automated tasks on the Internet, often with the intent of imitating human activity, such as messaging, on a large scale. In the context of social media platforms like Telegram, bots usually manifest as automated accounts that publish or reshare content. On Telegram, anyone can create and manage a bot. This makes them ideal for labour-intensive, tedious tasks that need to be done at scale.

To explore how they function in this context, we collected over 1.2 million Telegram posts from a sample of IS groups and channels and used different variations of community detection algorithms to map networks and sub-networks within the sample – as well as the bots within it. Community detection algorithms are able to identify related clusters of users and groups based on common subject matter and online behaviour. And, in the case of Telegram, finding the bot accounts for these community detection algorithms to focus on is relatively simple, as Telegram mandates that bots should be identifiable in account metadata.

Findings

Analysis of the dataset suggests that bots play a systemic role in IS’s online communications ecosystem. Specifically, within the community that was examined, bots perform three key and distinct functions. The first was to publish, promote and amplify IS content and propaganda, whether in the form of news updates, images, videos or articles. The second function was to moderate discussions within Telegram channels and groups. The third was administering groups, including permitting access to new members and blocking violators of group policies.

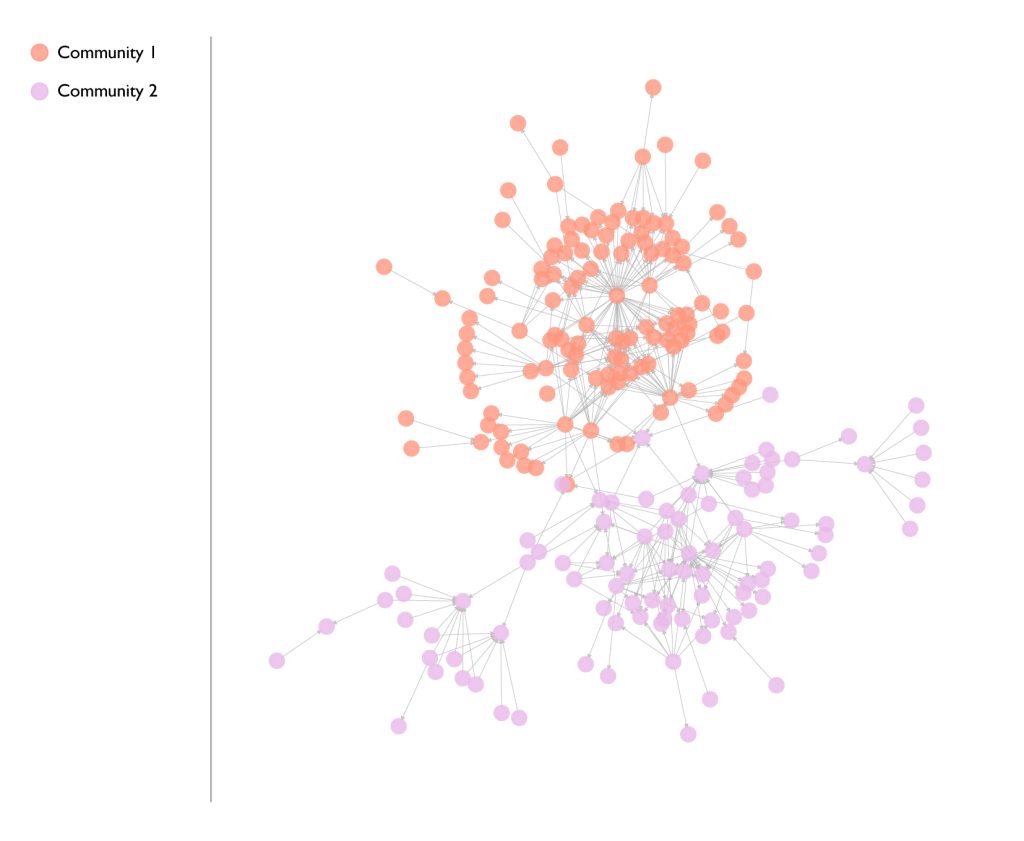

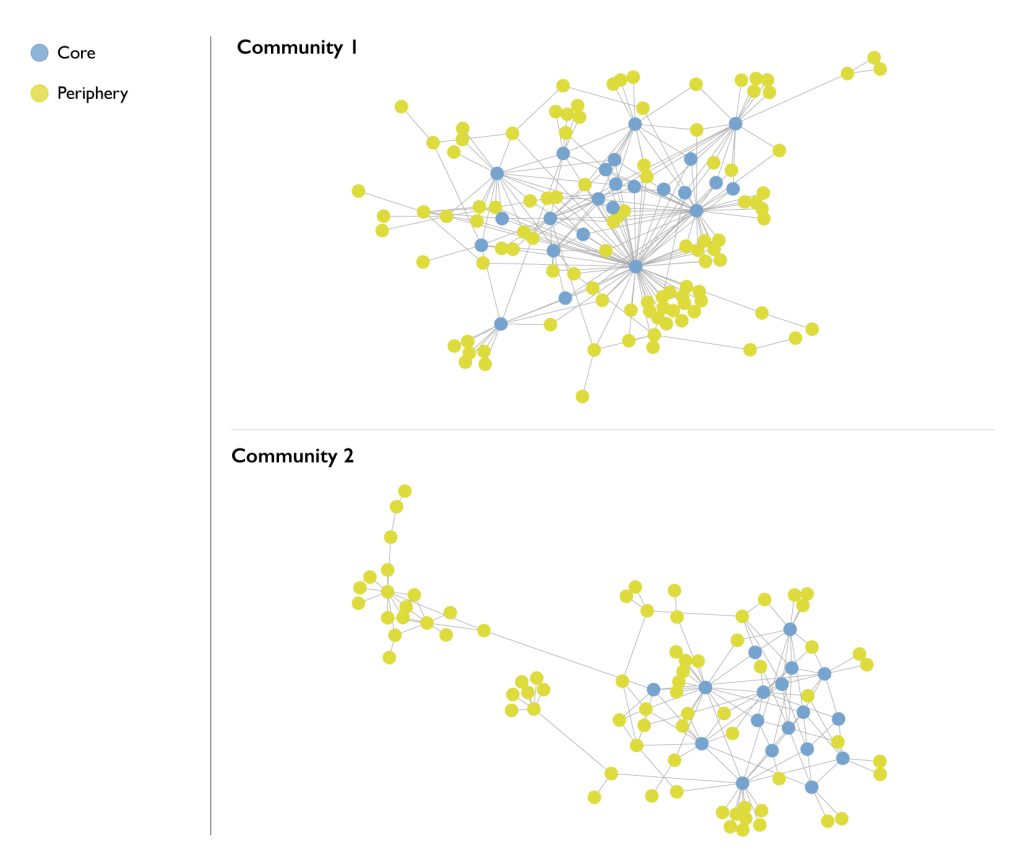

The diagrams below illustrate the placement of bots across IS’ networks as regulators of both users and content between sub-communities. They also illustrate the concentration of bots at the centre of the network – these are the ones that push content and propaganda from the network core to its periphery. Across the sample analysed, both sets of bots were found to play a significant role in content dissemination, with 106 bots posting some 39,211 messages and pieces of media content (images, videos etc) in more than six languages.

Figure 1: Visualisation of the two communities in the bots’ network.

Figure 2: Network visualisation that indicates the core and periphery nodes in both communities.

On average, the bots posted 176 messages each day (140 text, thirteen images, twelve PDFs, four video clips, and two audio files). For reasons that are not immediately clear, they were most active on August 2, 2021, when they collectively posted 1,431 messages. By contrast, on a not insignificant number of days, they were almost completely inactive, posting just one message per day during such periods. From the perspective of the range of their activities, some bots were specialised and others were multifunctional. Most bots were associated with ‘general commentary’ channels and groups; of the eighty-six that operated in that context, there were twenty media amplification bots, twenty-four links sharing bots, nineteen media activism bots, and seventeen news-posting bots.

For IS, then, bots are an important enabler of its digital media strategy, whether in terms of automating the administration of its sympathiser community or amplifying the reach of its propaganda and influence campaigns. Bots effectively stand in for human operatives online; they are able to connect sympathisers together based on common ideology, all while minimising the exposure of, and thus risks to, IS operatives themselves. Early investigations by the authors suggest that other violent extremist groups have adopted similar approaches.

Conclusion

Demonstrably, the network analysed here is not organized by a single person or controlling authority but formed incrementally, at least partly in a self-organised manner, by loosely connected groups of IS supporters. This corresponds to the broader decentralised character of IS influence activities. This network quality has been cultivated because it makes it exceptionally difficult to localise and break down the ecosystem in a sustained and effective manner.

On Telegram, anyone can create and manage a bot. This means that users can deploy bots to do most of the activities that human users can do: post content, receive messages, manage groups, provide services, and even accept payments. As with many digital capabilities, that means they are a double-edged sword. They can automate tedious work and improve efficiency for legitimate users. But malign organisations can derive the same benefits. Both government security agencies and social media platforms should take note, and explore what more can be done to limit their use as a tool of both IS and other terrorist groups.

It is important to keep in mind – to foreground, even – that this is not a problem for Telegram alone. Although Telegram is one of many platforms used by malign actors today, in recent years it has proven to be one of the most forward-thinking in terms of strategic network disruption – something that should be commended. Moreover, we must also bear in mind that bots and other advanced social media capabilities are used, in the vast majority of cases, for benign or entirely positive means. For that reason, efforts to mitigate their malicious use must be more sophisticated than blunt disruption activities or calls for their being wholesale disabled as a functionality. Instead, it is critical that interventions are limited to the problem set they are trying to address, research-led, and, above all, accurately targeted.

Abdullah Alrhmoun is a PhD candidate in network science at the Department of Network and Data Science at Central European University.

Charlie Winter is Director of Research at the threat intelligence platform ExTrac AI.

János Kertész is the head of the Department of Network and Data Science at Central European University.