As ISIS established its caliphate in 2014, its supporters flooded mainstream social media platforms to share propaganda, plan attacks, and recruit future members. Realising the substantial threat posed by these online jihadists, social media companies developed new tools to clamp down on ISIS supporters, largely pushing them out of the mainstream and onto alt-tech platforms or encrypted messaging apps like Rocket.Chat and Telegram. Unfortunately, in more recent years, ISIS supporters have adapted and used new tactics to seep back onto mainstream platforms once again.

The re-emergence of pro-ISIS accounts on mainstream platforms poses a significant security risk. Many of the tools that were developed to detect and remove pro-ISIS accounts in the past are no longer sufficient to counter their evolving evasion tactics. Thousands of pro-ISIS accounts have developed new ways to operate relatively freely on mainstream platforms while remaining undetected. Over a nine-month research project, the author found that more than 50% of the pro-ISIS accounts on mainstream platforms that were identified at the beginning of the study remained online & active throughout.

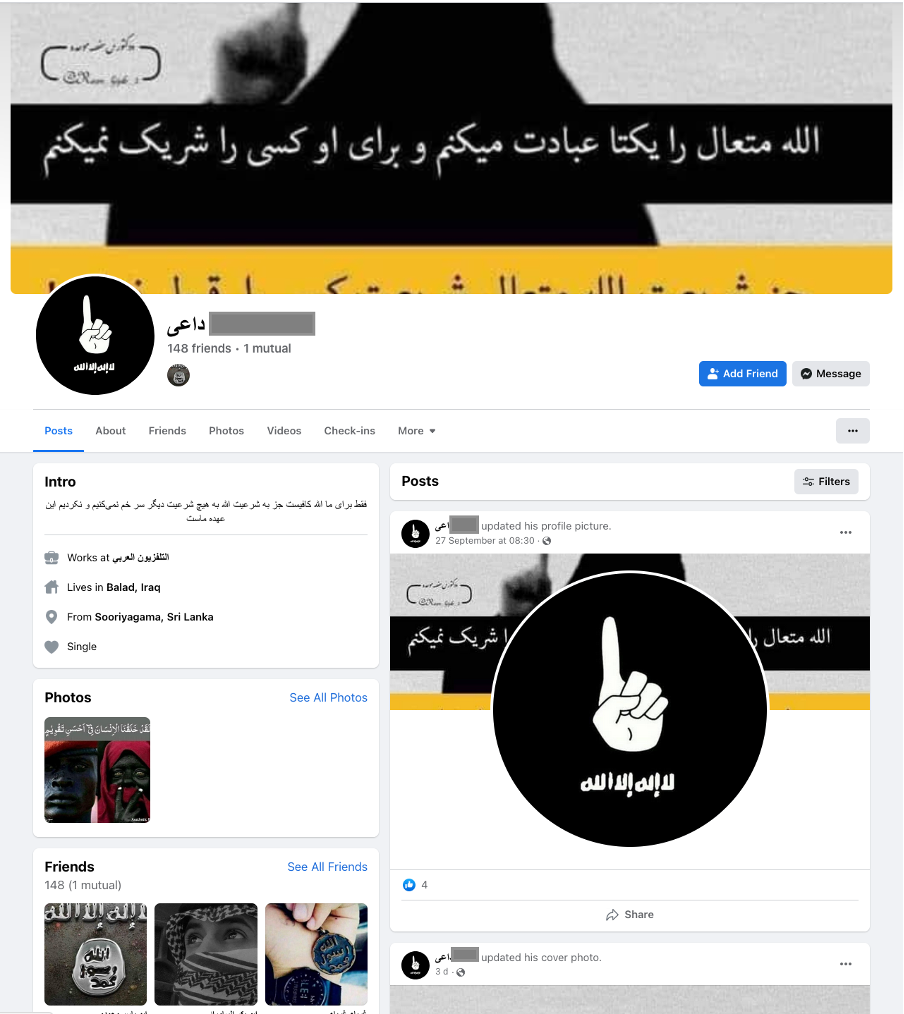

By re-engaging on mainstream platforms, pro-ISIS accounts have been able to communicate with a much broader audience than would be possible on alt-tech applications. The new eco-system of pro-ISIS accounts is also more decentralised and post-organisational than the wave of online extremism seen during the caliphate years from 2014-2019. Nearly all of the users sharing pro-ISIS content on mainstream platforms today are individual accounts tied together in loose networks, rather than official channels representing the group. In this new online landscape, ISIS supporters are careful not to portray themselves as official ISIS outlets as this would see them swiftly banned. Instead, ISIS supporters use recognisable markers of support or sympathy for the group which are harder to detect but are still effective for signalling their sentiments to other like-minded users. For example, these accounts may display a heavily obscured image of the ISIS flag on their profile, list their school or workplace as Dawlah Islamiya (Islamic State), or share quotes from well-known Jihadist leaders. These carefully-selected qualitative markers quickly convey extremist sentiments to those with contextual understanding, but they also make it difficult for automated content moderation algorithms to reliably detect this content at scale.

To understand the new threat, this Insight highlights six of the most widely used evasion methods that online Jihadists have developed to seep back onto mainstream platforms, and discusses some of the countermeasures that can be used to limit them.

Image Obscuration

One of the most widely used methods to evade automated content detection on mainstream platforms is image obscuration – a relatively low-tech evasion tactic that involves cropping, filtering, or otherwise editing well-known terrorist content, such as the ISIS flag, before posting. In some cases, pro-ISIS accounts will ‘mark up’ or ‘scribble’ on extremist content using simple image editing software widely available on smartphones. This scribbling method involves pen marks, doodles, or digital paint tools which make it difficult for algorithms to identify and categorise the contents of the image or post. The random and user-generated nature of these scribblings makes it difficult to identify patterns and poses a challenge for most automated content detection systems. More recently, pro-ISIS accounts have experimented with image duplication which replicates all or part of the terrorist symbol many times to make the content appear as a new image, which is difficult to detect at scale.

Overlaying is also increasingly common; by overlaying terrorist content with emojis, watermarks, and banal images such as flowers or minarets, these accounts often circumvent detection algorithms. Similarly, pro-ISIS accounts overlay terrorist content with logos from news agencies, making it difficult for algorithms to categorise or disambiguate this content from legitimate sources. Pro-ISIS accounts have overlaid an ISIS video release with the official BBC News logo, making it challenging to determine whether the content in question is a credible news report about the group or a piece of terrorist propaganda. This challenge is complicated as legitimate news agencies often include short clips of ISIS video releases in their reporting for illustrative purposes. As such, if the parameters of automated content moderation tools are widened too much in detecting overlaying efforts, it increases the likelihood of false positives and the risk that legitimate news stories could be flagged and removed.

These image obscuration methods are only the latest strategies in detection and evasion. By digitally editing and altering the appearance of these markers, ISIS supporters circumvent many of the technologies and knowledge-sharing tools designed to detect and remove them. As the tactics of online extremists continue to evolve, automated detection tools must also keep pace.

Inspirational Quotes & Scripture

Pro-ISIS accounts will also eschew well-known symbols and instead use inspirational quotes, infographics, or cherry-picked passages of scripture to signify their support for the group. The most common of these include stylised inspirational quotes taken from famous Jihadists like Abu Musab al-Zarqawi, Anwar al-Awlaki, and even Abdullah Azzam. ISIS supporters use out-of-context quotations taken from the writings of controversial historical imams and muhaddith (religious leaders and scholars of hadith), such as Ibn Taymiyyah, which provide some of the intellectual and theological underpinnings for Salafi-Jihadism.

While Ibn Tamiyyah and scholars like him are quoted by Jihadist-Salafists, their writings cover a broad range of Fiqh (Islamic Jurisprudence), it does not follow that everyone who quotes Ibn Tamiyyah subscribes to Jihadi-Salafism. In these cases, without background knowledge, context matter, or thoughtful assessment of the sentiments expressed, automated detection tools struggle to disambiguate extremist content from non-extremist content. Similar to the far-right, the inspirational quotes used by Jihadist-Salafist accounts may not directly discuss political violence, instead focussing on mental health, natural health cures, life advice, and cultural issues important to the movement, which makes these accounts even more difficult to detect without contextual background. Taken together though, these quotes and symbols act as shibboleths that allow ISIS supporters to find and connect with like-minded users, while still flying below the radar of automated content moderation.

Extremist Symbols

ISIS supporters on mainstream social media use well-known symbols, hand gestures, and even emojis, to indicate affiliation and support for the group. The most common of these symbols include images of a right-handed index finger pointed upwards to signify their twisted interpretation of tawhid (or the Oneness of God). While Tawhid is the core belief of the broader religion, a tiny minority of Salafist-Jihadists have misinterpreted it and tried to hijack its meaning for their own political purposes. As Nathaniel Zelinsky writes in Foreign Affairs, the Salafist-Jihadist interpretation of tawhid is used to broadcast their black-and-white view of the world which places their creed and manhaj (methodology) as the only true path, stamping out religious pluralism, and predicting their future victory. The upward-pointed index figure seen on many pro-ISIS accounts is a digital manifestation of this twisted interpretation. As such, when pro-ISIS accounts display this simple hand gesture on social media, they are telegraphing their belief in and support for the Jihadist cause in a very public way, but one which is largely undetectable to automated content moderation tools that lack contextual understanding. The hijacking of symbols and hand gestures has similarly been practised by far-right extremists who have co-opted the ‘OK hand sign’ to signal their sentiments.

These symbols and hand gestures are often paired with other well-known markers, including quotes by famous Jihadist leaders, images of knights on horseback or Daesh coins minted during the caliphate years. By triangulating these different secondary indicators of extremist sentiment, automated content detection systems can better detect pro-ISIS accounts. Something as commonplace and benign as an upward-pointed index finger cannot be used by automated content detection systems to identify extremism, but when paired and layered with the other secondary indicators discussed above, it can be useful in detecting pro-ISIS accounts reliably.

Network Tagging

Online extremists feed off each other, encouraging and amplifying the voices of their peers through mainstream platforms. To maximise the benefits of these networks, pro-ISIS accounts follow or connect with thousands of individual users who can then view, save, and share their extremist content. While official pages or channels spreading pro-ISIS content are removed, large networks of individual users can quickly amass thousands of connections. For instance, on the Meta-owned platform Facebook, many pro-ISIS accounts were found to have maxed out the 5000 ‘friend connections’ allowed by the company. The thousands of ‘friend connections’ by pro-ISIS accounts on this platform are large enough to match or even exceed the audiences of many official ISIS channels found on encrypted alt-tech platforms.

Pro-ISIS accounts on mainstream platforms also use the tagging function on posts to tag or link to nearly 100 similar accounts. This mass-tagging tactic helps to amplify extremist content and allows new audiences to find other pro-ISIS accounts. Prominent accounts act as hubs that recommend the profiles of other pro-ISIS accounts that should be followed. The ‘suggested friends’ function on many platforms further facilitates new users to find pro-ISIS accounts with algorithmic precision. While these tagging and network methods help online extremists to spread extremist content on mainstream platforms, they can also be used to identify other ISIS supporter accounts. Mutual connections can serve as a secondary indicator that helps automated content moderation tools to identify clusters of accounts that are likely ISIS-affiliated and remove them.

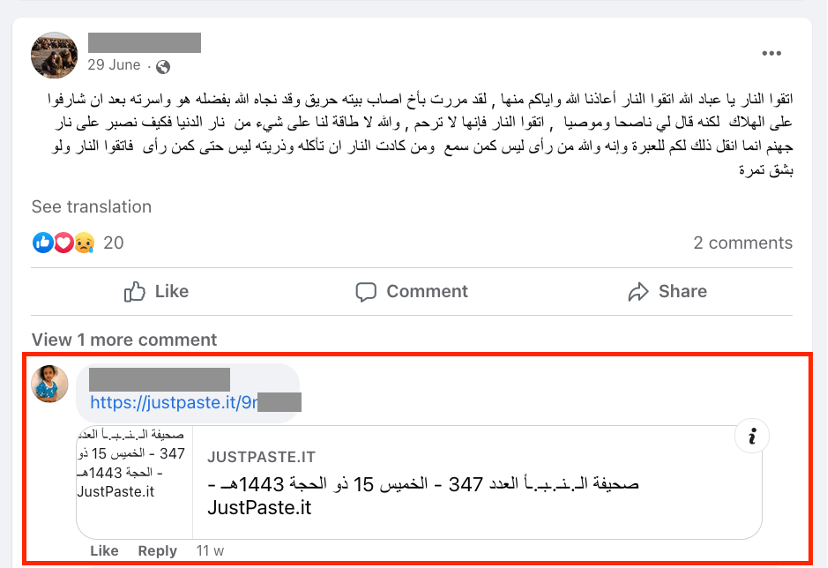

Outlinking

Outlinking is another important evasion tactic used by extremists on mainstream platforms today. While accounts may not be able to host official ISIS publications such as the al-Naba newspaper on mainstream platforms — they frequently share outlinks to external websites or alt-tech platforms where this content is uploaded directly. Many of the pro-ISIS accounts on mainstream platforms also frequently outlink to encrypted platforms such as Telegram or Rocket.Chat, where users chat, share large media files, and plan attacks. As such, the profiles of pro-ISIS accounts on mainstream social media serve as a gateway and landing page which directs users to more explicit extremist content. Up-to-date outlinks are often difficult for supporters to reliably find and pro-ISIS accounts on mainstream platforms act as important conduits for newcomers to the movement who are deepening their engagement. Fortunately, greater use of URL and hash-sharing can significantly curb outlinking attempts.

Leet Speak and Broken Text Posting

Leet speak, a system of modified spellings and characters, along with broken text posting is another widely-used evasion tactic used by online extremists. Pro-ISIS accounts using leet speak and broken text posting mix different languages, spacing, punctuation, and emojis to throw off automated content moderation systems. While social media firms have dealt with leet speak and broken text posting for years, the methods used are constantly changing and shifting as new workarounds and modified spellings are developed. Although the technology exists to detect and remove this type of text content, analysts, researchers, engineers, policymakers and NGOs must rely more on subject matter experts who can identify new trends in the space. These proactive findings can then inform the development of more up-to-date and responsive leet speak dictionaries which can be used to better train machine learning tools that search for this content at scale. While this approach would require greater human resources in the short term, it will pay significant dividends in reducing the amount of extremist content that makes it through.

Countermeasures

While extremists will continue to evolve and adapt, many of the tools and strategies needed to counter them already exist. Artificial intelligence will remain critical to detecting extremist content and disinformation at scale, but greater use of human experts can improve the accuracy and responsiveness of these systems. Researchers who proactively identify and track trends in the online extremist landscape can help better train automated content moderation systems on secondary indicators of extremism and keep them up-to-date on evasion tactics.

Monitoring the evasion tactics of Jihadist-Salafist extremists remains a significantly more difficult challenge than identifying those of the far-right, as analysts and Western governments lack the deep cultural familiarity and knowledge of foreign languages needed to understand Jihadist-Salafist extremism. However, the evasion tactics used by both Jihadist-Salafist and far-right extremists often mirror each other; monitoring both can provide valuable lessons for accurately identifying online extremists of any variety. With greater use of experts and layering up-to-date secondary indicators, we can speed up our response to these evasion tactics and swiftly identify extremist accounts both accurately and at scale.

The evasion tactics discussed above are not exhaustive, but they highlight how online extremists are constantly adapting and evolving to circumvent online detection. This cat-and-mouse game will likely involve further adversarial shifts as tech platforms close one pathway and online extremists create another. We should be prepared for these constantly changing dynamics in the post-organisational extremist landscape where networks of unofficial extremist supporters proliferate and innovate rapidly.