The last four to five years have seen a higher visibility surrounding the “Make America Great Again” (MAGA) pro-Trump movement which has increasingly been associated with far-right extremism. This became particularly evident as the 6 January insurrection at the US Capitol unfolded. The imagery of MAGA, amongst Violent Far-Right Extremist (VFRE) groups such as the Proud Boys, Oathkeepers, and Three Percenters (among others) remained front and centre as the crowd stormed the Capitol building. Prior to 6 January, these groups and individuals maintained an active presence on multiple online platforms planning and chatting about how they hoped the event would unfold. Although they were active on mainstream platforms such as Twitter and Facebook, some of the busier platforms included more fringe spaces such as Telegram, Parler, Gab, and MeWe- during the insurrection, Thomas Caldwell, a member of the Oath Keepers, received Facebook messages updating him on the whereabouts of law makers and after he posted that he was inside the building, he began receiving specific directions. Many questions still remain regarding the exact degree of planning and direction that took place on social media before and during the storming of the Capitol, but as investigations continue, information seems to indicate the existence of concrete coordination efforts along with the already widely known general calls for violence leading up to 6 January.

This article will explore chatter about and responses to the insurrection on the platforms of MeWe and Telegram. It will also consider the implications of current deplatforming efforts and provide policy recommendations followed by insights from one of the authors, Brad Galloway, who is a former far-right extremist.

MeWe

Telegram has received much attention in the news following Twitter’s permanent suspension of President Donald Trump’s account and the deplatforming of Parler accounts however, another social media platform, MeWe, moved from not even placing in the top 1,000 downloaded apps to spot number 12 in US rankings. Prior to the insurrection and the current increase in popularity, MeWe had already gained a diverse following across numerous communities ranging from Trump supporters, QAnon proponents, militia groups, and white supremacists. A further influx of new users trickled in as Facebook began removing “Stop the Steal” groups in November of 2019 from its platform when the social media company recently announced that it is completely banning the phrase “stop the steal.” In response, deplatformed Facebook users searched for an alternative platform and have found a more stable home on MeWe.

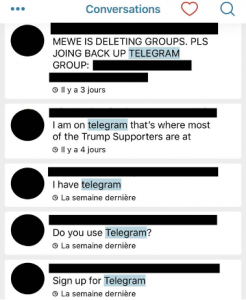

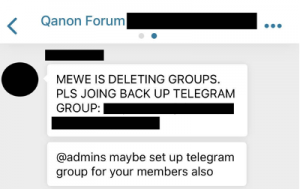

Leading up to the election and the 6 January insurrection, users continued sharing disinformation, misinformation, conspiracy theories, prepper tips, and also discussed coordinating in-person gatherings. Although MeWe stated on Twitter that it is not removing “Stop The Steal” unless it violates their TOS, MeWe users posted complaints about the disappearance of Stop the Steal and militia channels and groups. In MeWe chats, users are arranging Telegram contingency plans. Per the authors’ observations, numerous local militia groups (where the individuals know each other in real life) and QAnon chat admins shared Telegram links to private invite-only back-up groups to continue communications in case of a MeWe ban.

MeWe users who are unfamiliar with Telegram are asking about the app and these new users are now funneling into a space where more extreme rhetoric remains on display and largely unchecked. As Amarnath Amarasingam explains, “It’s (Telegram) not simply pro-Trump content…it’s openly antisemetic, violent, bomb making materials…people coming to Telegram may be in for a surprise in that sense.” In addition, extremist Telegram communities are providing guides for novice Telegram users on how to properly secure their account by implementing operational security (OPSEC) measures to avoid tracking by journalists, researchers and law enforcement.

Telegram

Although the presence of the extreme right and white supremacists on Telegram is not new, the app saw a +146% increase in Apple Store downloads during the week of the insurrection. Platform usership has undoubtedly ballooned and in response, the more hardcore white supremacists elements are developing strategies to radicalise deplaformed Trump supporters. Telegram recently banned a handful of neo-Nazi channels but many of these channels have created back-ups and the majority of harmful content remains undisturbed. The impression that these bans have had any sort of impact on the intricate extreme right/white supremacist ecosystem would be wildly inaccurate and unless a comprehensive campaign similar to the November 2019 Europol anti-ISIS campaign to remove Islamic State content and pro-IS users from the platform are employed, these efforts will barely make a dent. This is not to suggest that a campaign of that nature would be a fix-all solution or that it could effectively be employed given important differences between deplatforming the far-right/white supremacist content versus IS content (as will be discussed in the following section). Instead, the authors wish to emphasise the futile nature of a half-hearted strategy that aims to remove a handful of channels every so often; as these channels are quickly re-establishing their subscriber numbers and planning ahead by creating back-ups.

In terms of content, the following are some of many major themes that emerged on Telegram during the insurrection and the following day:

- Expressing anti-Trump and anti-GOP sentiments

- Espousing anti-police narratives and declaring that they are not on the side of the people

- Hailing Ashli Babbitt as a martyr of the white race

- Claiming that Black Lives Matter protests and Antifa were met with much less force on the part of the police than the insurrectionists – this was often accompanied with racist rhetoric (specifically anti-Black and anti-Semitic)

- Advocating for a “no political solution” position in the wake of the insurrection

- Criticising the insurrectionists for not being organised enough to do anything significant upon entering the Capitol

- Viewing the insurrection as a bold historical moment when white people stood up against tyranny despite a certain level of disorganisation

Mainstream Platforms

In addition to increased levels of activity on fringe platforms, mainstream social media have also dealt with the sharing of extremist content, disinformation and misinformation. According to the George Washington University Program on Extremism’s growing data base of criminal complaints related to the insurrection, 82% of the records “contained some type of evidence from social media” (with Facebook being the most cited platform) and most of this evidence was shared on social media while the insurrection was underway. In terms of pushback from these platforms, Facebook started banning ‘Stop the Steal’ for “sowing violence” back in November of 2020. However, the task of keeping up with this nefarious content has been difficult due to the sheer volume of posts and continued determination of some users to remain on these platforms. When it comes to deplatforming far-right communities and white supremacists, it may be tempting to draw parallels with deplatforming Islamic State from Twitter but Maura Conway provides crucial insights on differences:

- Far-right/white supremacist spaces are “fast-changing scenes”

- Most far-right/white supremacist groups do not carry a formal terrorist designation by the international community unlike with the case of the Islamic State

- Far-right/white supremacist ideologies have a much wider global supporter base including politicians, political parties, media outlets, and voters

- Far-right/ white supremacist groups have been able to rely on high profile sites that have received high traffic over the years

- Mainstream and fringe far-right personalities have profited financially from virtual far-right spaces

These five primary points highlight why dealing with the issue of far-right and white supremacist presences across social media platforms pose such a difficult task. Seemingly, the discussion on deplatforming VFRE groups often expands to include questions on what else could be done to limit online activity of these groups such as regulation or the removal of users or content. A recent Vox-Pol article by Ofra Klein discusses the implications of users being censored or removed from platforms online and offers the reminder that “far-right users, as early adopters of the internet, have adopted creative strategies around censorship.” This is important because these individuals or groups don’t just disappear – they often resurface elsewhere, including potentially offline. Nonetheless deplatforming extremists in mainstream spaces still has an impact. As Cynthia Miller-Idriss and J.M. Berger respectively explain, “Deplatforming is an important strategy” and “it’s not a total solution by any stretch…but it’s better than doing nothing.”

Recommendations and Conclusion

The question “What can we do about this?” remains and it is becoming ever-more pressing by the day. There will not be a one-size-fits-all solution but here are possible policy suggestions for social media companies:

- Even if extremist content appears to be moving away from your specific platform, still pay attention to cross-platform pollination such as page or group links from platform ‘X’ being shared on platform ‘Y’. Deplatformed individuals may try to regroup elsewhere and/or strategise a return to the original platform under a less conspicuous name to avoid another ban.

- Rely on human researchers who are observing these spaces to report on strategies that extremists are sharing in their own networks to circumvent and avoid bans, moderators, detection, etc. This should be followed up by monthly reports from researchers and content moderators. Researchers and content moderators themselves should also follow extremists’ instructions on circumventing the system to see how effective such measures are in keeping extremist content under the radar.

- Take note of trending hashtags associated with extremist ideologies, hashtag hijacking by extremists, and new extremist terminologies entering into these spaces.

- Continue human content moderation alongside AI-generated moderation.

- Remain flexible as new developments arise: this may include changes in government policy, new adaptations by extremists in the virtual space, a shift in platform migration patterns, etc.

- Incorporate insights from former VFRE in online intervention work.

One of the authors, Brad Galloway, has significant experience with the online presence of VFRE groups – as he himself spent 13 years within international far-right circles before leaving in 2011. He noticed the ways in which VFRE increasingly utilised the Internet to organise and communicate as it grew to prominence. One particularly noticeable observation was the VFRE’s usage of Napster, Yahoo chat, and MSN messenger in the earlier Internet days and the eventual shift to the primary mainstream social media platforms of the present: Facebook and Twitter. However, extremist activities were and are not limited to those spaces and, as Galloway notes in an interview with Ryan Scrivens, forums such as Stormfront, played a vital role in acting as collection points for VFRE adherents where they could organise. As outlined in the final recommendation point, former extremists have an important role in understanding how and what VFRE activity looks like in the online context. However, Galloway emphasises that ethical considerations must be kept in mind whenever formers are engaging in online intervention work. A key component would include an encompassing multi-sectoral approach to succinctly address these issues.

There is no ‘golden ticket’ solution to combat online activity by VFRE’s leading up to tragic events such as the 6 January insurrection, however, building on what we have learned to date, remaining adaptable, and working across sectors/areas of expertise are certainly good places to start.