Introduction

In July 2024, Bangladesh underwent a significant political transition, culminating in the end of Sheikh Hasina’s 15-year tenure as Prime Minister. This Insight offers a concise overview of the two key emerging risks within the online sphere subsequent to this upheaval and provides practical recommendations for risk mitigation. The identified risks are:

- The use of digital platforms for retribution through doxxing and revenge porn.

- The struggle for narrative dominance in the information space through amplification tactics.

A Brief Timeline

In 1972, the Ministry of Cabinet Services in Bangladesh instituted a policy reserving 30% of roles in the Bangladesh Civil Services for freedom fighters (and their descendants) who participated in the 1971 Bangladesh War of Independence. Sheikh Hasina abolished this quota system in 2018 through an executive order following widespread student protests. The student protests were a reaction to the perceived unfairness of the quota system in Bangladesh, particularly its impact on merit-based recruitment in government jobs.

On 5 June 2024, the Bangladesh High Court reinstated the quota system – ruling that it was illegal – which precipitated further student demonstrations. Reports indicate that law enforcement agencies employed unlawful force to suppress these protests. Furthermore, a video depicting the killing of an unarmed Bangladeshi student activist by the Bangladesh Police went viral on 16 July, igniting public outrage. The government imposed an internet shutdown across Bangladesh on 18 July, during which time hundreds of protester fatalities were reported.

On 21 July 2024, the Supreme Court overturned the reinstatement of the quota system. Despite this, protesters continued to demand accountability for those killed and called for Sheikh Hasina’s resignation. On 5 August 2024, Sheikh Hasina resigned from her position as Prime Minister – shocking the country – following a critical meeting with her security leaders. According to BBC, during this meeting, the security chiefs informed her that they could no longer guarantee her safety amidst escalating unrest across the country. She fled to India, reportedly at short notice.

Sheikh Hasina’s sudden departure left a power vacuum that exacerbated existing tensions in the country. As tensions escalated, Hindus – who make up 7.95% of Bangladesh’s population and have historically been perceived as supporters of Sheikh Hasina’s secular party, the Awami League – were considered to be at risk. Since 2001, human rights organisations have documented waves of attacks against minority groups in Bangladesh. Following Sheikh Hasina’s departure, there have been numerous reports of attacks on Hindus and their places of worship in the Muslim-majority country. As of 7 August, reports from The New York Times, BBC, and Human Rights Watch have verified attacks on minority groups in Bangladesh.

The subsequent analysis evaluates the severity and potential impact of the primary emerging risks within the context of the current events in Bangladesh.

Risk 1: Online Retribution – Digital Logs and Non-Consensual Image Sharing of Private Individuals Allegedly Involved in Counter-Protest Activities

Several websites established by protesters have disseminated images, personal information, and social media profiles of law enforcement officers, as well as members of the Chhatra League, who are allegedly responsible for the deaths of demonstrators. Chhatra League is the student wing of the Awami League, the political party formerly led by Sheikh Hasina. Links to these websites, along with calls for users to submit reports, have been circulated across social platforms, potentially in violation of platform terms of service.

While conclusive research on the impact of such online behaviour is lacking, parallels can be drawn from similar global incidents. During the 2019 Hong Kong pro-democracy protests, activists leaked personal information of police officers, while pro-government supporters engaged in similar activities against protesters. Protesters may see it as a means of seeking justice, deterring further violence, and challenging existing power dynamics in the face of perceived oppression. Media research on such incidents consistently demonstrates that the sharing of private information online puts the safety of individuals at risk and can provoke violent reprisals, leading to a cycle of aggression between protesters and others, often escalating the situation rather than facilitating dialogue.

Additionally, numerous online groups feature non-consensual intimate images of women allegedly associated with the Chhatra League. These posts not only circulate these photos but also actively encourage further dissemination and include redirection to Telegram channels set up for this purpose. Additionally, the Google search auto-complete function for ‘Chhatra League’ offers “Chhatra League viral video” and “Chhatra League viral video telegram link” as top search options as of 12 August, reflecting trending interest in the search term.

These actions are characteristic of image-based sexual abuse, commonly referred to as “revenge porn.” Such activities, driven by a desire for retribution, exploit individuals’ privacy and dignity, as well as limit and challenge women in the public sphere.

Risk 2: What are the facts? Amplification of Opposing Crisis-Related Narratives

Another risk is making crisis-related content seem more popular than it really is, a tactic commonly employed in disinformation campaigns and by digital activists to shape public opinion.

An analysis conducted using the X API for the neutral keyword “Bangladesh,” covering the period from 31 July to 6 August 2024, indicates a high prevalence of narratives concerning the safety of Hindus in Bangladesh. Clusters of similar text strings present different perspectives on the safety of Hindus. The analysis conducted for this study identifies three key stages in the evolution of each cluster:

- A specific string of text is defined, with acknowledgement of the creator when applicable.

- The creator or organised groups disseminate the text on social media, urging users to post it to increase visibility and achieve target engagement levels.

- Amplifiers, including popular actors, respond to the call to action, contributing to the campaign’s reach.

Between 31 July and 6 August 2024, two key campaigns concerning the safety of Hindus competed for engagement on X, presenting opposing viewpoints and evidence.

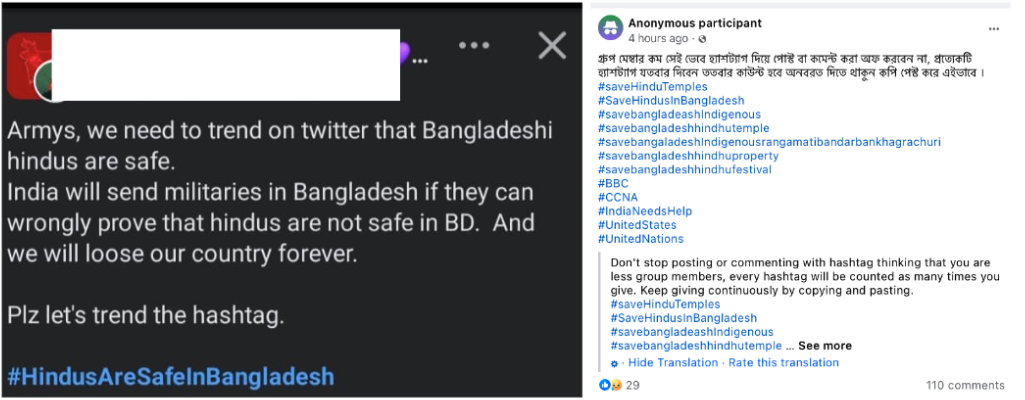

Hindus Are Safe in Bangladesh

Context: On 5 August 2024, a trending topic on X in Bangladesh involved identical posts stating, “I am Hindu, and my friends from every religion in Bangladesh are here to protect me. We built this Bangladesh together. We are united.” The organised nature of the campaign, with coordinated posts, suggests a strategic effort to maintain social harmony and prevent the spread of extremist influence (Figure 1). The call to repost this string in high volumes was made on major platforms (Facebook and X) and was further amplified by public Telegram channels (52k subscribers) and organised online groups which appear to be associated with the student protest movement. As of 6 August, this campaign did not recognise verified attacks against minorities, potentially downplaying the significance of the reported incidents.

Figure 1: Examples of the ‘Hindus Are Safe in Bangladesh’ post.

Type of Content: Images depicting groups of men standing outside Hindu places of worship, suggesting that they are protecting these properties. The campaign primarily presents evidence and testimonials demonstrating the peaceful coexistence of religious communities in Bangladesh. The objective appears to be countering negative perceptions regarding the safety of the Hindu community in Bangladesh (Figure 2).

Figure 2: a tweet seeking to present a counter-narrative about the safety of Hindus in Bangladesh.

All Eyes on Bangladeshi Hindus. Save Bangladeshi Hindus

Context: This campaign, likely inspired by the “All Eyes on Rafah” campaign, has seen a rise in interest on X since 5 August 2024 (Figure 3). A review of top posts in this cluster reveals primarily graphic imagery of attacks on women, physical violence, and the destruction of temples, primarily in Bengali, Hindi, and English. The hashtag “Hindus Under Attack” is frequently used in conjunction with these posts. The goal of this campaign seems to be to document and publicise incidents of violence against Hindus in Bangladesh. It aims to raise awareness both domestically and internationally, challenge narratives that minimise the struggles of Hindus, and advocate for governmental action to protect the rights of Hindus in Bangladesh.

Figure 3: An ‘All Eyes on Bangladesh’ post

Type of Content:

- AI-generated images of crying children in front of burning buildings and fictional temples on fire, with lines of dead bodies in the foreground.

- News reports from media outlets documenting attacks on Hindu places of worship.

- Note: While verified reports document attacks on Hindu places of worship, some imagery associated with this campaign has been debunked by IFCN-associated fact-checkers.

Figure 4: An AI-generated image in support of Bangladesh Hindus.

Figure 5: An AI-generated image in support of Bangladesh Hindus.

In addition to misinformation, manipulated imagery, and graphic content, automated accounts are leveraging these campaigns to drive traffic to websites with high ad placement or unrelated content. For example, one X account posted over five tweets per minute, rapidly switching topics from tsunami warnings in Japanese to claiming that Hindus were under attack. The co-opting ongoing crisis by likely financially motivated actors further complicates the information environment.

Recommendations for Risk Mitigation: Invest in Technology and Resources to Mitigate Risks in Non-English Languages

The flow of information during a crisis presents a complex challenge. Bangladesh’s approach to addressing this involves a combination of regulation and takedown requests directed at technology platforms. Amnesty International and other human rights watchdogs have reported that legal provisions in Bangladesh, particularly the Cyber Security Act, have been “weaponized to target journalists, human rights defenders, and dissidents.” In addition to regulatory measures, Bangladesh’s Telecommunication Regulatory Commission and Digital Security Agency have significantly increased the number of requests submitted to technology platforms (such as Facebook and Google) over the past five years. During the 2021 communal violence, the Bangladesh Telecommunication Regulatory Commission asked Google to remove 175 YouTube videos that were allegedly inciting interfaith violence.

In response to the challenges of the flow of inaccurate information, technology platforms (Facebook, TikTok, Google) have sought to address the issue through investments in fact-checking. However, while fact-checking within tight timelines is crucial, failing to provide important context (such as the results of fact-checks) to users about crisis-related content in non-English languages in a timely manner creates a significant gap in the dissemination of accurate information within the affected communities.

The problem becomes even more severe once inaccurate or inauthentically amplified information spreads to encrypted communication channels, where efforts to correct or clarify the content can no longer reach the users consuming or sharing the original content or its variations. Without crucial context, users are more likely to spread inaccurate claims, especially during a crisis. This lack of context has been shown to escalate tensions and, in some cases, lead to real-world violence, as evidenced by several incidents in South Asia.

Recent events in Bangladesh’s online sphere illustrate the challenge of insufficient language support during a crisis. A group of posts on X and Facebook claimed that the house of Bangladeshi cricketer Liton Das was set on fire following Sheikh Hasina’s resignation as Prime Minister on 6 August 2024. These posts included captions such as “Hindu cricketer Liton Das’s house has been set ablaze. Brotherhood prevails” (translated from Hindi). Although various organisations fact-checked this claim on the same day, many of the posts on Facebook, often accompanied by similar captions in non-English languages, lacked fact-check labels as of 19 August. Furthermore, no community notes were found on any of the analysed claims in any language on X.

These examples highlight the complex environment confronting Bangladesh’s governments, users, and technology platforms. To effectively contribute to mitigating these risks, technology platforms must first localise behavioural detection measures in non-English languages and second obtain insights from regional experts to guide product safety efforts.

Social and political crises, as illustrated above, can often generate specific regional threats. Models built on global data may lead to oversimplified models that do not account for the complexity of local behaviour, resulting in a failure to detect nuanced changes in sentiment or response that are critical during a crisis. Automated detection systems trained primarily on data from one region (such as Indian Bengali) may lack the diverse linguistic data necessary for accurately recognising and processing the Bengali spoken in Bangladesh, leading to reduced accuracy and generalisation, which in turn affects content moderation results.

In several instances, communal violence in the region has been linked to inflammatory social media posts. For example, in 2012, an attack on a Buddhist village was reported to have been incited by such posts. Similarly, incidents of violence against minorities in 2013 and 2016 were attributed to inflammatory social media content. In 2021, communal violence erupted once again, with social media posts cited as a factor contributing to the unrest.

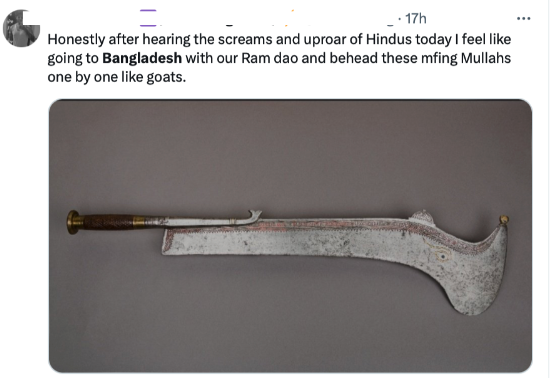

To effectively assess the risk of further escalation of violence in the region, it is essential to enhance local expertise in global tech platforms, whether through in-house development or collaboration with third parties. Given the historical context of violence in Bangladesh and the role of technology in exacerbating social tensions, there is an immediate need for locally focused early risk indicators. Some early indicators are cropping up in the information environment.

Figure 6: A tweet threatening violence against Muslims.

These indicators and their prevalence must be treated as high-severity risks by technology platforms. Such initiatives must be adequately resourced with market expertise and sufficient product support to prevent the recurrence of past conflicts.

Failing to address these issues may result in the repetition of historical patterns of violence and perpetuate the negative impact of technology on social dynamics.