Content warning: This Insight contains language and images that are antisemitic, Islamophobic and racist.

Introduction

Over the past few years, the West – particularly Europe – has seen a troubling rise in far-right movements promoting nationalist agendas and xenophobia. These far-right movements and ideologies are not merely confined to the offline space; with the rise of digital platforms, music and audio-sharing sites have become powerful spaces for creativity and communication. These same platforms have also become venues for extremist groups to spread their messages through songs, playlists, and podcasts, making them challenging to regulate. Platforms such as Spotify and SoundCloud, while primarily focused on music and podcasts, have been used for malign purposes by extremist groups globally.

Music has played a key role in serving as a tool for propaganda and radicalisation, especially via social media, to inspire youth and attract sympathisers for far-right, Hindutva and groups like the Islamic State. The power of music lies in its ability to affect memory and emotions, making it a potent tool for embedding extremist ideas into listeners’ minds, often without them realising it. Extremists use catchy, rhythmic tunes to spread their hate-filled messages, which impact both emotions and memory.

This Insight focuses on the prevalence of far-right and white supremacist music and hate speech on Spotify, showing how the platform’s vast library, which spans various ideologies, is being exploited to spread hate, racial intolerance, and Islamophobia.

Background and the Beginning

White power music originated in the 1970s out of the British skinhead subculture and quickly spread to the U.S. and Canada. Over the next several decades, the genre evolved with the social movements and perceived crises at the time. By the late 1990s and early 2000s, the advent of the internet helped better facilitate access to this music, strengthening its influence within the white supremacist movement.

White power music holds significant influence within the white supremacist community, serving not only as entertainment but also as a source for spreading messages of hate and violence against various groups such as immigrants and non-whites. This kind of music serves two purposes- it can directly incite violence, as seen in hate crimes following white power music events, and it spreads hate within communities by normalising racist ideologies. Moreover, music through the internet and digital platforms has served as tools to spread hate internationally and for passive recruitment.

Music as a Recruitment Tool

For long, white power music, widely known as “hatecore”, has served as both a recruitment and radicalisation tool. This includes varied genres of pro-white racist music, such as Rock Against Communism (RAC) and National Socialist Black Metal (NSBM). Despite their differences, these genres share a common thread of xenophobic rhetoric. Hatecore music provides a gateway for recruiting young and disaffected individuals into the white supremacist movement. Artists use aggressive lyrics and themes to resonate with those feeling marginalised or angry. The music can achieve this through creating a sense of community and belonging among listeners, fostering a collective identity centred around racist and supremacist ideologies. It is also important to note that there is a decentralised influence even without large centralised organisations; hatecore music continues to spread extremist ideologies and inspire individuals, making it a tool in the white supremacist subculture.

For instance, in RAHOWA’s song “White People Awake,” the lyrics “White people awake! Save our great race!” and “The white race will prevail!” are aimed at promoting white supremacy and racial dominance. Another song called ‘White Power’ by the defunct British band Skrewdriver sings “White Power! For England – Before it gets too late and Once we had an Empire, and now we’ve got a slum’ reflecting a white supremacist view.

Similarly, in the song ‘Assassin’ by Blue-Eyed Devils, the band advocates for their cause through calls for vigilante justice and extreme violence. Assassin! I´ll end your life, Slice your black throat with my knife… Shut your black mouth with lethal force, Murder your family with no remorse!

Events like NSBM and white power music concerts have impacted the spread of right-wing extremist groups, contributing to the socialisation of their members. Moreover, the music is relatively easily accessible online; even an unexpected encounter through an automatically curated playlist can unintentionally attract newcomers to white supremacist and far-right ideologies. Besides encouraging existing extremists to commit acts of violence, it also subtly influences potential recruits, making its impact very dangerous.

Music and Playlists on Spotify

Spotify has been critiqued for hosting playlists and songs that promote white supremacist messages. Playlists may appear ordinary initially but often have coded titles, descriptions, or songs that align with extremist ideologies (as demonstrated in the figures below). Such playlists often use racial dog whistles—symbols or words that are targeted to those who are already aware or in support of white supremacist and far-right language.

A 2022 report by the Anti-Defamation League detailed 40 white supremacist artists on Spotify where their music was used to entertain and radicalise listeners. In response, Spotify’s spokesperson said they removed over 12,000 podcast episodes, 19,000 playlists, 160 music tracks, and nearly 20 albums that violated their hate content policies but acknowledged that more work remains to be done.

However, I recently found many playlists that have songs, names, and symbols that incite hate and are blatantly xenophobic. Some were labelled under terms such as ‘fashwave’ or ‘white pride’, which reference fascism and white nationalist ideology.

Fashwave, or “fascist wave,” emerged in the mid-2010s as a musical subgenre linked to the alt-right. The appeal of ‘fashwave’ lies in its nostalgic use of ‘80s clichés,’ tapping into a longing for a perceived better past- reflecting a broader trend within the alt-right, which romanticises the Reagan era as a time of “halcyon days” and the last stronghold of white America. Additionally, vaporwave’s blend of sound and visuals creates an immersive experience that resonates with feelings of alienation among Western youth online, making it a compelling tool for far-right propaganda.

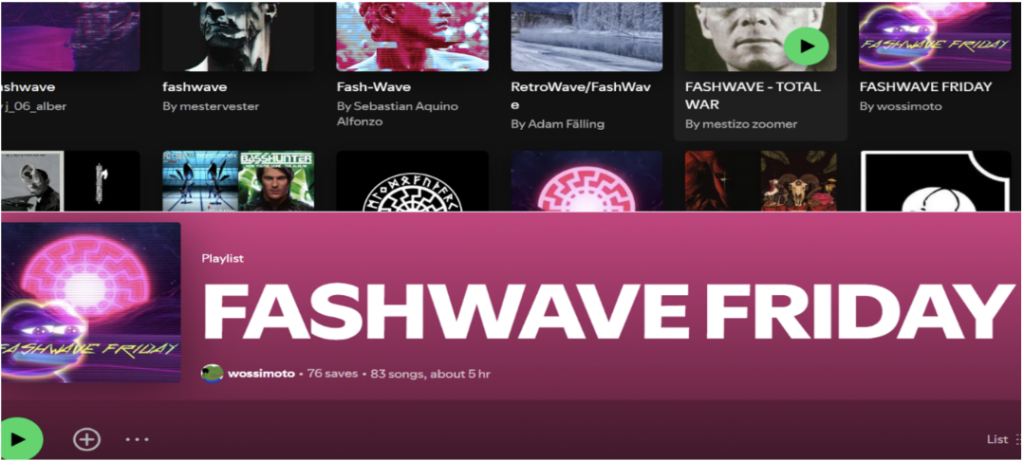

Figure: 1

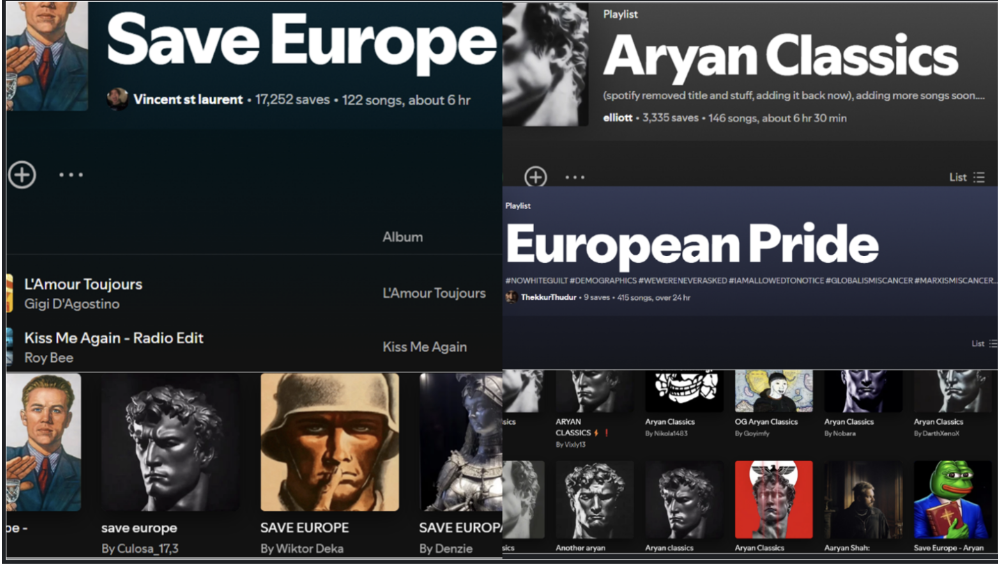

Figure 1 shows Fashwave playlists on Spotify, many of which display white supremacist imagery like Pepe the Frog and the typical symbolism and aesthetics of ‘fashwave’.

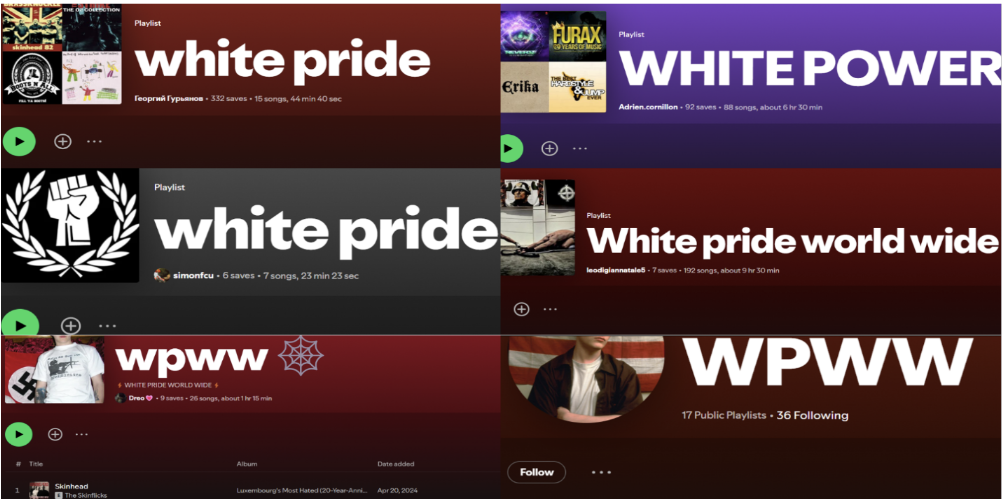

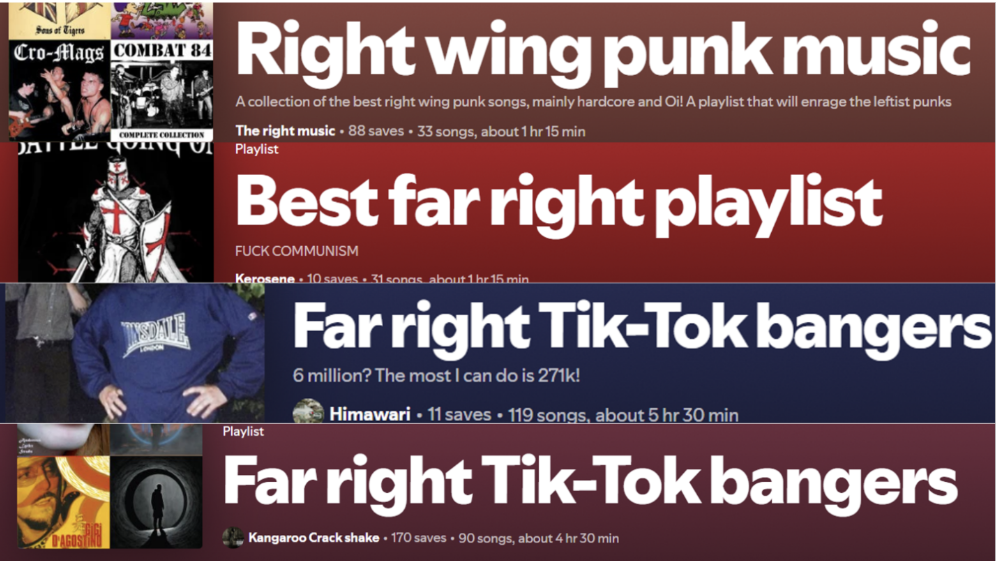

Similarly, Rock Against Communism is a sub-genre of Punk, and its songs promote xenophobia and racism. In Figure 2, we can see the presence of multiple playlists with derogatory names, symbols, and images.

Figure: 2

Additionally, the acronym WPWW, which stands for “White Pride World Wide,” is noticeably present in the playlists (figure 3). This phrase was part of the logo for Stormfront, the largest white supremacist website on the Internet and the first major hate site online, which has contributed to its widespread adoption among white supremacists. White supremacists use phrases like white power and white pride in slogans and songs.

Figure: 3

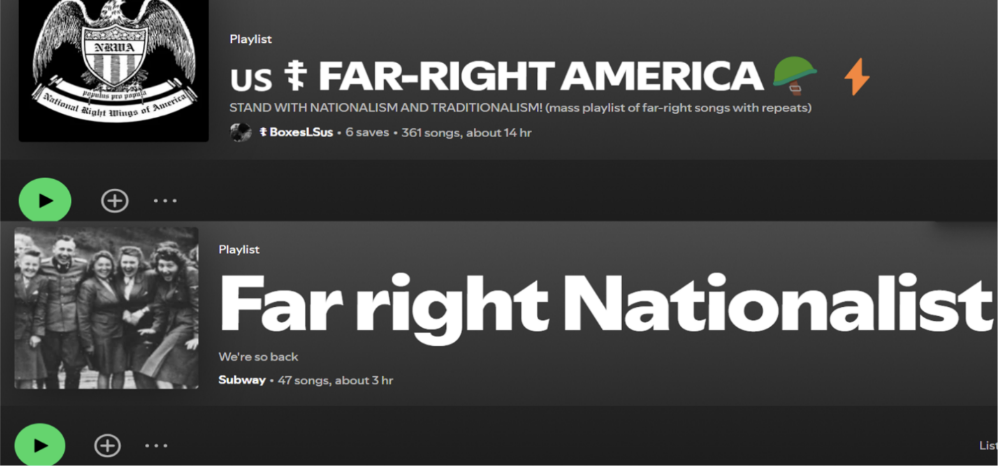

Similarly, there are playlists with nationalist and far-right songs as shown in Figure 4. Both playlist titles seem to cater to a similar crowd, celebrating far-right beliefs. The purpose is to explicitly use words like ‘Far right America’ and ‘far-right nationalists’ to push their ideology and create a sense of belonging among their followers.

Figure: 4

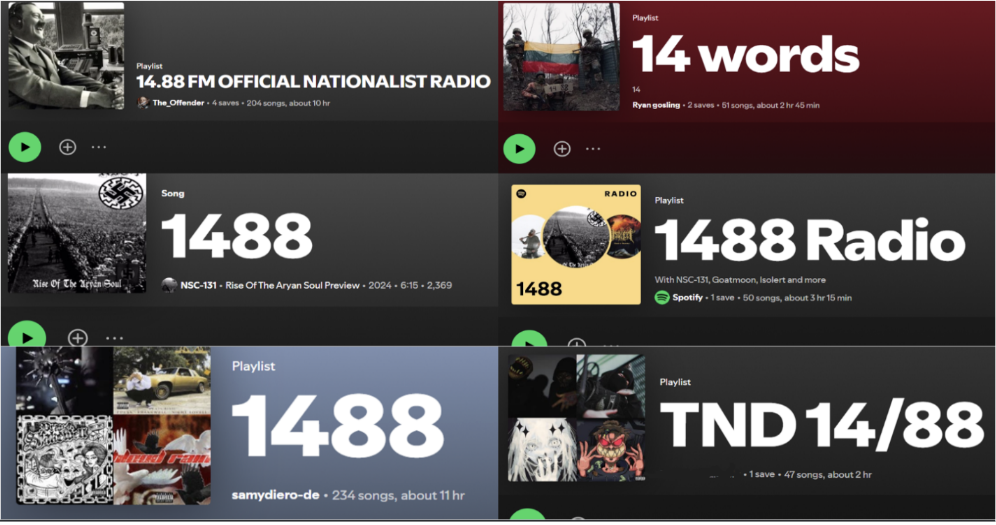

Among other forms, hate speech and xenophobia are very apparent on Spotify in the form of numerical hate symbols. The number 1488, present on several playlists and titles created by users, combines two prominent white supremacist symbols: 14, which refers to the “14 Words” slogan, and 88, symbolising “Heil Hitler.” Together, they endorse white supremacy, and variations of the symbol include 14/88, 14-88, and 8814. White supremacists often place another hate symbol, such as a swastika, in the centre of a Sonnenrad, as visible in the images shown in the playlists. However, it is important to note that not every Sonnenrad-like symbol represents racism or white supremacy, as similar imagery is used by various cultures.

Figure: 5

Save Europe Trend

In May 2024, a hit Italian song called L’Amour Toujours from 1999 by Italian DJ Gigi D’Agostino resurfaced. A viral video showed partygoers outside of a bar in Sylt, an island in Germany, chanting “Foreigners out, Germany for the Germans!” to the tune of the song. The song itself is unrelated to ethnic purity or xenophobia; it is about passionate love. However, the crowd’s actions painted a different interpretation: at least one man was seen giving a Nazi salute. Moreover, German far-right groups have begun using this song for racist chants. This situation is similar to how the US alt-right co-opted the “Pepe the Frog” meme, which was initially harmless but became linked to far-right politics.

This song L’Amour Toujours is among a rising number of songs that far-right have co-opted into a made-up genre they refer to as “Save Europe” music. These tunes are often sped up to a frenzied nightcore style and are used by internet creators in YouTube edits and TikTok memes that promote racist, anti-immigrant messages, advocating for a fully white Europe. There are now dozens of popular Save Europe-themed playlists on Spotify, with titles like ‘European Pride’ and ‘Aryan Classics’ as shown in Figure 6.

Figure: 6

Interestingly, many users posted viral clips saying they didn’t support the racism behind the movement but liked the music, unintentionally amplifying the toxic subculture.

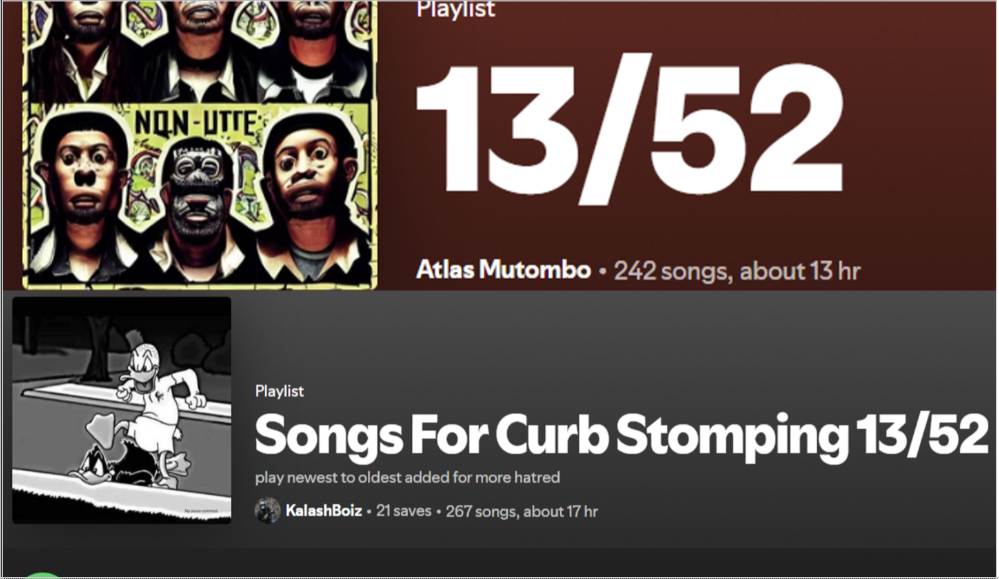

Many far-right playlists are also filled with hateful and often antisemitic and racist language, as shown in Figure 7.

Figure: 7

Moreover, racist, antisemitic, and Islamophobic playlists and songs are also on Spotify alongside hateful imagery, further spreading harmful narratives. Figure 8 shows the numeric hate symbols used by white supremacists as code to name African Americans. The combination of the numbers 13 with either 52 or 90 serves as a shorthand used to promote racist propaganda, portraying African Americans as violent or criminal. In this code, 13 represents the supposed percentage of the US population that is African American. At the same time, 52 refers to the claimed percentage of all murders in the US allegedly committed by African Americans.

Figure: 8

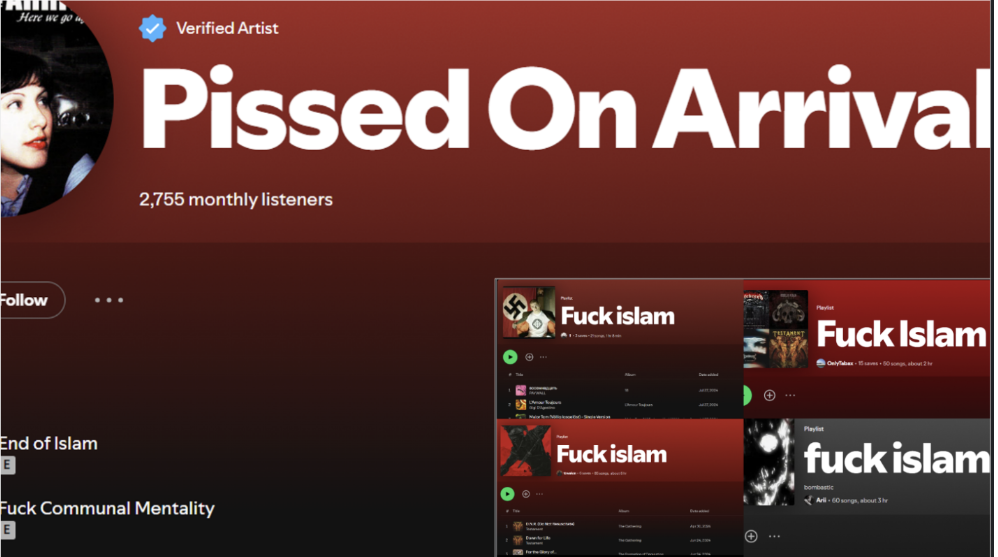

Figure 9 is a collage of Spotify playlists and a verified artist profile. The verified artists on the platform can leverage Spotify’s tools and metrics to grow their fanbase. This highlights that even verified accounts can sometimes promote and spread harmful content, such as explicit imagery and offensive language. These playlists specifically target the Muslim community, as indicated by the titles of the playlists ‘*uck Islam’ and songs like “End of Islam”.

Figure: 9

The figures shared above highlight the presence of hate speech and extremist content on Spotify.

Spotify’s Policies and Content Moderation

Spotify’s Platform Rules state that “content that incites violence or hatred towards a person or group of people based on race, religion, gender identity or expression, sex, ethnicity, nationality… or other characteristics associated with systemic discrimination or marginalization” is not allowed. Additionally, any content “promoting or glorifying hate groups and their associated images, and/or symbols” is not allowed. Contrary to this, the playlists and images linked to white supremacist or extremist music shared above violate these rules by promoting xenophobic, Islamophobic, and dehumanising content/imagery/symbols.

In recent years, Spotify has updated its policies to combat hate speech, removing thousands of songs identified as promoting extremism, but some content can remain up for months or even years before being flagged or removed. Gaps remain possibly due to the sheer volume of content uploaded daily. Moreover, content creators have found ways to dodge restrictions, such as using alternative spellings or references and hate symbols/slogans.

Recommendations and Conclusion

Some steps could help Spotify foster a safer and more inclusive environment while combating harmful ideologies. Spotify should:

- Implement advanced algorithms and human review processes to identify and remove hate speech and extremist content. The platform should also establish ways for users and experts to provide input on the effectiveness of content moderation policies.

- Improve user reporting tools to make it easier to flag problematic content and provide clear feedback on actions taken. Additionally, Spotify should share transparency reports that outline the actions taken against reported content, along with the reasons for those decisions. This would build trust and accountability among the user community.

- Use its platform to promote podcasts and campaigns about the dangers of extremist ideologies and counter-narratives. Partner with artists and influencers to raise awareness about combating hate speech and promoting inclusivity.

Spotify and other platforms have made efforts to limit extremist content, but xenophobic and hateful music and playlists persist, showing more needs to be done. Digital platforms like Spotify, driven by user content and algorithms, can unintentionally fuel extremist ideologies. This highlights how radicalisation can happen not just on social media, but also on audio platforms used by millions. There is a need to make sure that music and audio platforms stay spaces for creativity and expression, not a breeding ground for hate and extremism.

Note: Most screenshots were captured between September 15-21, 2024, and have been organised into themed collages for clarity. While not all playlists consist entirely of extremist songs or artists, many have reflected extremist symbols, language, and content.