Introduction

The Indian general election of 2024 saw a surge in the deployment of AI-based technologies, particularly deep fakes and disinformation campaigns. Political parties in India spent around $16 billion on what became the world’s largest election. They used AI to create realistic albeit fake videos of people, like showing Prime Minister Narendra Modi dancing to a Bollywood song or a politician who died in 2018 endorsing a friend’s campaign. As with deep fakes and disinformation, there is an inherent danger of changing the public agenda, influencing citizens’ decisions in elections, and undermining the legitimacy of political parties and candidates. The expansion of AI-driven tactics also escalates concerns about the stability of democratic institutions. Applying such insights derived from the analysis of the cases belonging to the 2024 Indian general election, this Insight aims to elucidate the future problems that other democracies may face as a result of the further expansion of AI technologies and their increasing accessibility. With this in mind, this Insight will draw policy measures to spot and stop AI-based election disruptions.

Background: AI and the 2024 Indian Elections

The use of AI in India’s politics has changed how campaigns are run and messages are spread. This impact is important not just because India is the world’s largest democracy but also because it might show what challenges other democracies could face. Fake videos circulated during the seven-phase election included Bollywood celebrities in misleading videos. Meta, which owns WhatsApp and Facebook, approved 14 AI-generated electoral ads with Hindu supremacist language, calling for violence against Muslims and an opposition leader.

During the 2024 Indian general election, political parties used AI in many ways, often without much oversight or transparency. On the one hand, India’s political parties have used AI to create fake audio, propaganda images, and parodies. On the other, AI was also helpful in reaching a wide audience by creating content in different languages and spreading both real and fake information. AI helped political parties navigate the nation’s 22 official languages and thousands of regional dialects. AI was used to create funny or misleading images and videos targeting opponents, boost politicians’ images, bring back popular figures to support one party, and spread false stories about rivals. For example, the Bharatiya Janata Party shared an AI-made video of one of Modi’s main opponents, who was in jail, pretending to play a guitar and sing a Bollywood song. Meanwhile, the Congress Party made a video mocking Modi by changing song lyrics to suggest he was giving the country to big businesses.

Furthermore, AI also cuts the cost of reaching out to voters. Using AI for calls was eight times cheaper than using human call centres, further increasing its attractiveness. Political parties used AI to send personalised messages by cloning the voices of local politicians and delivering them directly to voters’ phones. In the two months before the elections began in April, over 50 million calls were made using this AI technology.

There are many aspects that stand out regarding the prevalence of realistic AI-generated content in India. First, this content is crafted to evoke strong emotions, often translated into regional languages, and focuses on building personal connections with prominent leaders, especially deceased politicians. Second, it spreads through less regulated platforms, frequently shared by local content creators. Thus, the Indian elections highlight how trusted relationships can be forged or fractured by highly realistic, personalised content tailored to regional audiences and widely disseminated.

Challenges Ahead for Global Democracies

The use of AI in India’s elections was varied and provides useful insights for upcoming elections, including in the United States later this year. Some key trends identified during Indian elections might further enhance our understanding of how AI-generated content is shared and how future campaigns can use AI. One major trend is using AI in meme culture. People are likely to use AI to create and share satirical images and videos. Memes can spread messages, including misinformation, and make extreme behaviours seem normal through humour. Another important trend is the global market for AI-generated content. Companies around the world are now offering AI tools to create and change audio and visual content. India-based Polymath Solutions, a big player in making synthetic media for Indian elections, is expanding to countries like Canada and the United States. Furthermore, adverse countries can also use free and paid AI tools to spread misinformation and create fake engagement on social media, thereby interfering in setting the election narrative. This content, including memes, videos, and audio, could be spread to affect election results, though the immediate impact may be limited. Recently, Microsoft warned that China might use AI-generated content to influence public opinion and further its geopolitical goals.

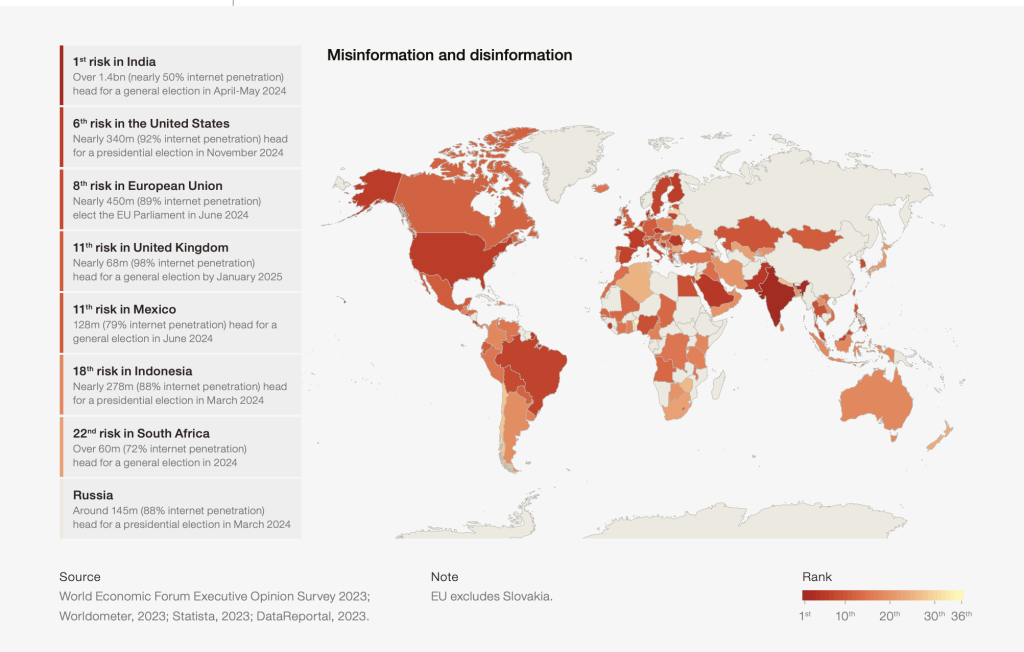

The rapid growth of generative AI technology is changing politics and creating challenges for electoral democracy. The Global Risks Report 2024 called misinformation and disinformation a significant problem that could destabilise society by making people doubt election results (Figure. 1). For democracy to function effectively, it relies on a transparent and trustworthy information environment. Voters need to see what politicians are doing, understand candidates’ promises, and grasp the proposed policies. Deepfakes pose several threats to this process. First, voters could be easily misled by fake content, especially if released close to election day, leaving little time to fact-check. Second, if fake content spreads widely, it could make voters lose trust in all digital information. This would make it hard for them to evaluate candidates properly. Third, politicians might use this loss of trust to ignore or dismiss real and important information.

Generative AI can make very convincing deepfakes, which can spread deceptive content, especially close to election day. Unscrupulous actors are likely to spread deepfakes, which might be carefully designed for maximum effect, without following truth or accountability standards. There have already been real-world examples of this in elections. For example, in the recent Turkish elections, President Recep Tayyip Erdoğan showed a fake video at a large rally that wrongly linked his main opponent, Kemal Kılıçdaroğlu, to the PKK, a Kurdish group labelled as a terrorist organization by the U.S. State Department. Additionally, there is growing concern that voters might depend on chatbots for election information, which may not be a reliable source of information. AI-powered micro-targeting and emotionally manipulative chatbots might persuade voters to act against their interests or polarise them. Moreover, there is a growing possibility that extremist groups might use these chatbots to recruit people for their purposes. Finally, the rise of generative AI could lead to more control of online information by a few big tech companies. These companies might decide which ideas and values are allowed and which are not, which could threaten free speech and expression.

However, generative AI can also benefit politics by creating easy-to-understand summaries of policies, helping voters evaluate candidates, improving communication between citizens and lawmakers, and supporting under-resourced campaigns. Generative AI has the potential to help people communicate with their lawmakers. Many people find it hard to write to politicians because they don’t know how to express their concerns or follow the style; AI tools can help make this process more accessible. Furthermore, this might also help lawmakers understand common issues among citizens, making them more responsive. Additionally, AI could help level the playing field in political campaigns. Campaigns with less money often struggle to create good content. AI can help them write better speeches, press releases, social media posts, and other materials. By helping campaigns look more professional, AI could reduce the gap between new candidates and well-established politicians.

Measures for Guarding Elections against AI Misuse

AI has the potential to improve elections by helping people communicate with lawmakers and making campaigns fairer. However, using AI carefully is important to avoid problems such as spreading false information or giving some candidates unfair advantages. We need strong rules and policies to ensure AI benefits elections without causing harm. These measures will help keep the election process fair and trustworthy while allowing AI to be used positively.

The most significant step in this direction is to ensure transparency and accountability. Election officials and campaigns should disclose how they use AI, including the algorithms, data sources, and purposes, to allow public checks for biases or manipulation. Election officials must carefully choose which AI tools to use. They should test AI software before using it, and vendors should ensure their products are high-quality, private, and secure. Since AI models are created using external data that may have biases, officials should ask vendors to reveal the data used to develop their products. If possible, officials should also have vendors demonstrate their technology using state and local voter data. Moreover, to combat deepfakes and AI-generated misinformation, it might be wise to consider the legality of the intentional deception of voters.

Additionally, efforts should be made to invest in developing tools for fact-checking and media literacy, particularly in educating the public about the potential for AI-generated media to influence or manipulate elections. Digital literacy campaigns play a crucial role in mitigating the negative effects of generative AI by informing consumers. This proactive approach can help reduce its adverse impacts. Recently, 20 major tech companies, including Google, Meta (Facebook and Instagram), OpenAI, X (formerly Twitter), and TikTok, promised to take steps to detect, track, and fight the use of deepfakes and other efforts that interfere with elections. Major AI companies like Meta, Google, and OpenAI have promised to use watermarks and labels. The idea is for these companies to add a code that marks content made or changed by AI, making it detectable. Media and social media outlets should then label this content as “AI generated.” Journalists also have a key role in rigorously verifying the accuracy of election-related information. While the development of federal laws to address disinformation is an important consideration, it must be balanced with the protection of free speech and dissent, essential elements of a healthy democracy. Any legal measures should be carefully crafted to avoid infringing on legitimate expression, with clear guidelines and independent oversight to ensure fairness and accountability.

Anadi is currently serving as a Research Associate at the Centre for Air Power Studies (CAPS) in New Delhi, India. She is actively engaged in a book project titled " Non-Traditional Security Threats in South Asia: Challenges for India." Her research interests encompass both traditional and non-traditional security threats, public policy, and climate policy. She has completed her M.Phil. from Diplomacy and Disarmament division, Centre for International Politics, Organization and Disarmament (CIPOD), School of International Studies, Jawaharlal Nehru University, New Delhi. She completed her Master’s in Politics with specialization in International Studies from School of International Studies, Jawaharlal Nehru University, New Delhi. She has also been recipient of Junior Research Fellowship for completing her M.Phil. dissertation.

X: @Anadi2018; Linkedin: https://www.linkedin.com/in/anadi-choudhari-86a761318/