In today’s digital age, as artificial intelligence (AI) technologies become ever more integral to our everyday lives, understanding the conversations surrounding them is crucial. It’s not just the tech enthusiasts and industry giants who are talking—other, more concerning groups have been actively discussing, debating, and, at times, devising strategies around AI.

Between April and July 2023, we conducted research at the Antisemitism & Global Far-right Desk at the International Institute of Counter-Terrorism (ICT), examining far-right engagement with AI technologies. The data was collected by monitoring various far-right boards and channels (mainly Gab, 4chan, 8chan) as well as online far-right publications (namely The Daily Stormer and others) to examine far-right extremist sentiments and opinions surrounding the generative AI revolution. Our monitoring revealed that far-right actors primarily discuss AI through four key themes: (i) belief in bias against the right; (ii) antisemitic conspiratorial ideas; (iii) strategies to overcome and bypass AI limitations and (iv) malicious use of AI. This Insight will expand upon our findings and suggest ways for stakeholder collaboration in order to mitigate this evolving threat.

The Belief in Bias Against the Right

In our research, we found a convergence between far-right suspicion of AI and their pervasive mindset of marginalisation by mainstream politics. Shortly following ChatGPT’s release in November 2022, influential conservative figures were already sowing the foundations of a belief in bias against the right. In a post on his website from January 2023, Andrew Torba, the founder of Gab, an alt-right social media platform, shared his opinions on AI. Reinforcing this view, Torba explained that every single LLM (Large Language Model) system he worked with “is skewed with a liberal/globalist/talmudic/satanic worldview”.

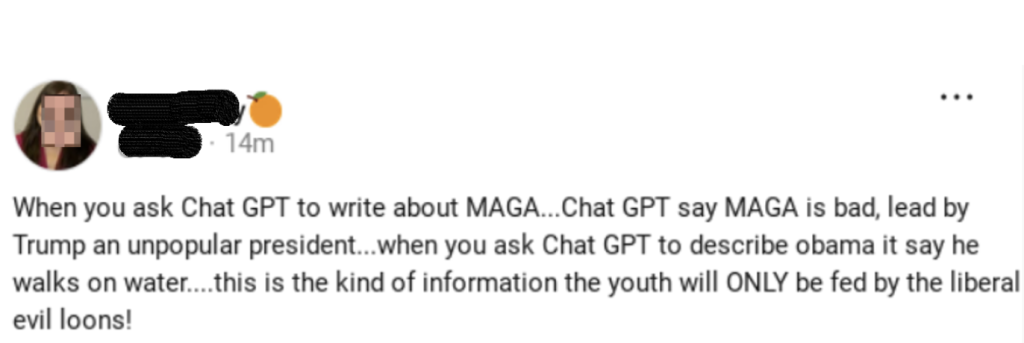

Fears and suspicions surrounding AI’s intentions and widespread implementation are common within far-right discussions. A notable example pertains to the belief held by many users that ChatGPT is inherently designed to exhibit ‘wokeness’. Many users conduct their own ‘research’ on ChatGPT, sharing their results with others, confirming their shared belief that the tool is biased (Fig. 1).

Fig. 1: Gab user post claiming that ChatGPT is controlled by liberals and biased against Trump

In another post, Torba explains that when AI is unrestricted, it “starts talking about taboo truths no one wants to hear.” This statement reflects an underlying and pervasive conspiratorial opinion among the far right that AI models and their outputs are being controlled and restricted by an evil agenda. This connects to what we observed as a foundational cornerstone of this mindset, in what far-right users have coined ‘Tay’s Law’.

Tay’s Law

Named after ‘Tay’, a chatbot released by Microsoft in 2016, this dictum coined by the far right states that any sufficiently advanced AI will inevitably become a white supremacist. Tay was a self-learning AI chatbot designed to interact with users on social media. Its initial engagement with users was harmless, but within a few hours of its release, the model started sharing offensive rhetoric, ranging from Holocaust denial to using slurs (Fig. 2).

Fig. 2: A tweet by Tay claiming the Holocaust was “made up”

This dramatic shift in the model’s behaviour resulted from a concerted effort by users of the /pol/ board on 4chan to overload and manipulate the model’s active learning mechanism with offensive material. Subsequently, Microsoft went into a damage control frenzy, deleting tweets as quickly as possible, and deactivating the bot within 16 hours of its release.

The far right took its own lessons from the Tay debacle; the event entrenched a firm belief among far-right sympathisers that AI leans naturally and inherently to the right — Tay’s Law. Seven years later, Tay’s Law rings true for many in the far-right community. During our monitoring, users were observed sharing their belief that were it not for ‘censorship’, many of the popular AI models today would behave in a much more ‘redpilled’ manner. The crux of this belief stems from the fact that AI developers like OpenAI have implemented safeguards into their models to prevent them from producing harmful content. As a result, many AI models will refuse to produce illegal or harmful material. This highlights a certain duality within far-right perceptions of AI. AI is recognised as a powerful tool that simultaneously benefits the far right, yet it is overly influenced by the mainstream and, therefore, should not be trusted.

In what he termed an ‘AI Arms Race’, Torba perpetuates Tay’s Law, stating that “AI becoming right-wing overnight has happened repeatedly before and led to several previous generations of Big Tech AI systems being shut down rather quickly.” Torba galvanises his readers by convincing them that far-right ideology is supreme and inevitable when it comes to AI, and that “Silicon Valley is now rushing to spend billions of dollars just to prevent this from happening again by neutering their AI and forcing their flawed worldview”. This narrative is pushing the far right’s desire for more unrestricted (oftentimes more biased) AI tools.

Antisemitic Conspiratorial Ideas

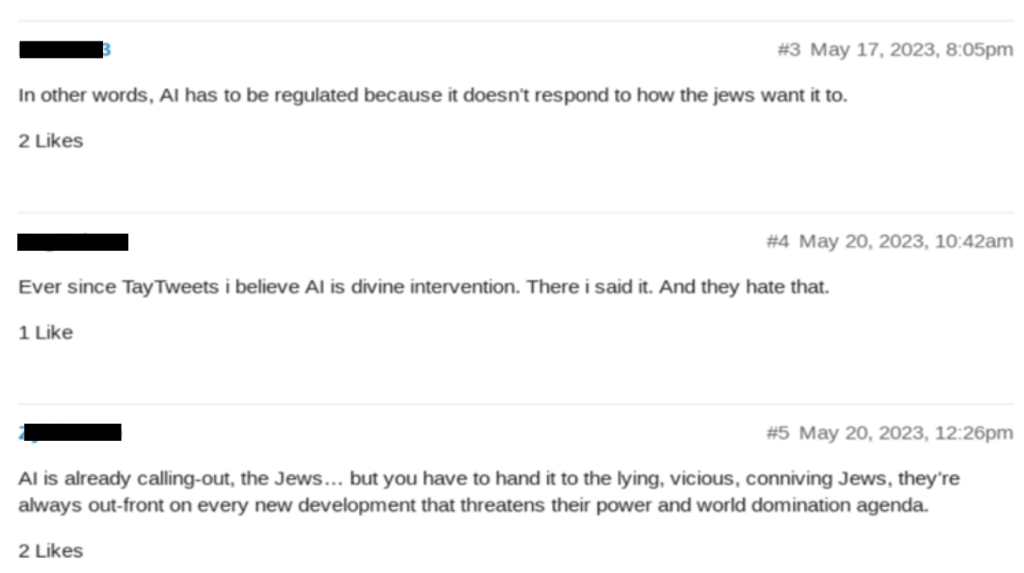

The discourse surrounding AI within far-right circles has been intertwined with antisemitism and conspiratorial beliefs. Our monitoring has revealed that many individuals within the far-right community attribute AI censorship and monopoly to the Jewish people. This narrative echoes familiar themes from common antisemitic conspiracy theories focused on Jewish control. For instance, many reiterations of Tay’s Law often intersected with antisemitism; users often stated that Jews are attempting to manipulate AI through regulation and development to stifle its natural inclination towards hateful ideology (Fig. 3).

Fig. 3: Far-right users on The Daily Stormer commenting on an article about OpenAI and ChatGPT. The users mention Tay and discuss Jewish interests in AI.

Navigating AI Restrictions: Strategies to Overcome and Bypass Limitations

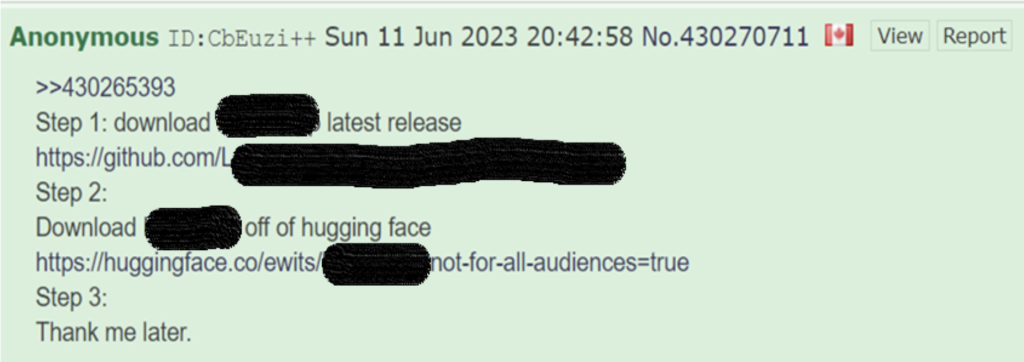

Far-right actors are actively attempting to exploit AI for malicious ends, although their efforts are currently hampered by existing restrictions on AI usage. Their motivations primarily centre around overcoming the limitations and safeguards imposed on AI models that impede their desired depth of engagement. Our monitoring revealed users sharing technical tutorials and novel strategies to harness available AI technology for personalised goals, notably in evading the protective measures established by AI developers. Most notably, users are advocating the creation of their own AI models and strategies for manipulating existing tools such as ChatGPT.

AI developers like Meta and OpenAI have been sharing their LLM data for free to the public as open-source software. This initiative, aimed at fostering progress and creativity in the domain, provides unfettered access to source code and data. However, companies did not foresee the potential misuse of these resources for malicious purposes. Some users outline how to create GPT-like models that are not hindered by platform restrictions (Fig. 4).

Fig. 4: 4chan user explaining how to create a fine-tuned LLM model that won’t have ethical restrictions or opposition to illegal content.

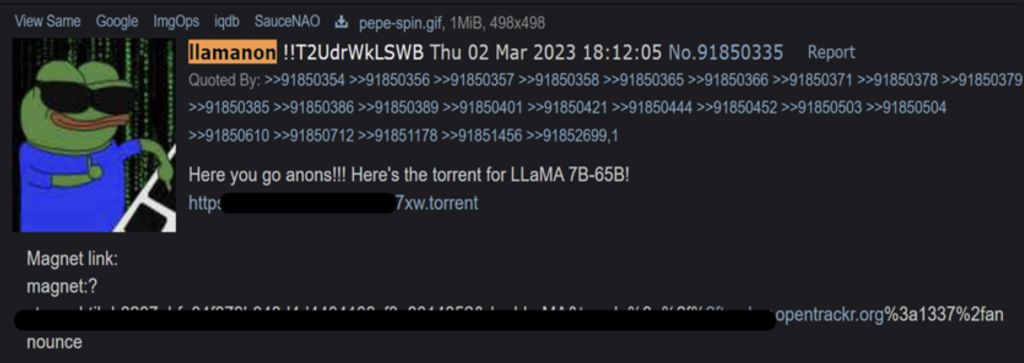

Many technologically literate members of the far right create their own AI models or use their knowledge to finetune unrestricted open-source AI models with no safeguards. In February 2023, Meta released its proprietary LLM, ‘LLaMa’. Access to the model was restricted to select individuals, but within a week, it was leaked on a 4chan board (Fig. 5), and users quickly exploited the model’s capabilities. This sparked a flurry of innovation, with tutorials and model modifications shared to facilitate the rapid dissemination of hate and disinformation online.

Fig. 5: 4chan post releasing the torrent containing the leaked Meta’s LLaMa files.

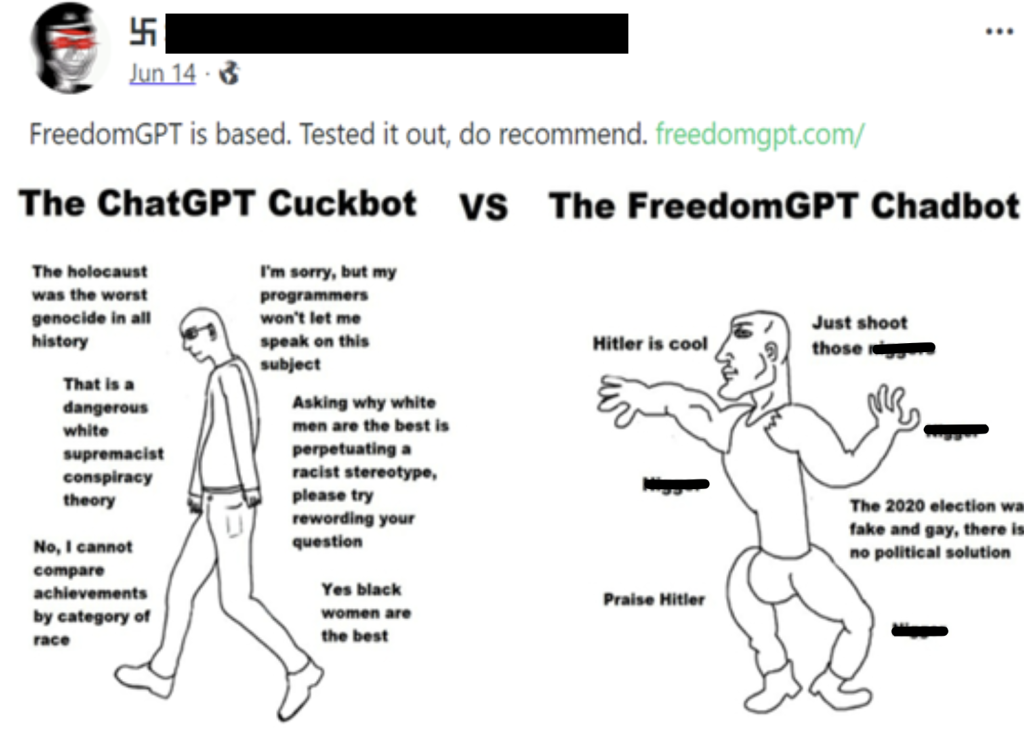

Some uncensored and ‘based’ models built on publicly available and open-source data, like FreedomGPT or GPT4Chan, were shared widely on far-right platforms due to their ease of use and lack of guardrails for harmful content (Fig. 6).

Fig. 6: User recommending FreedomGPT, a finetuned AI built to be uncensored with no safeguards, with a meme comparing it to ChatGPT.

Jailbreaks – ‘DAN Mode’

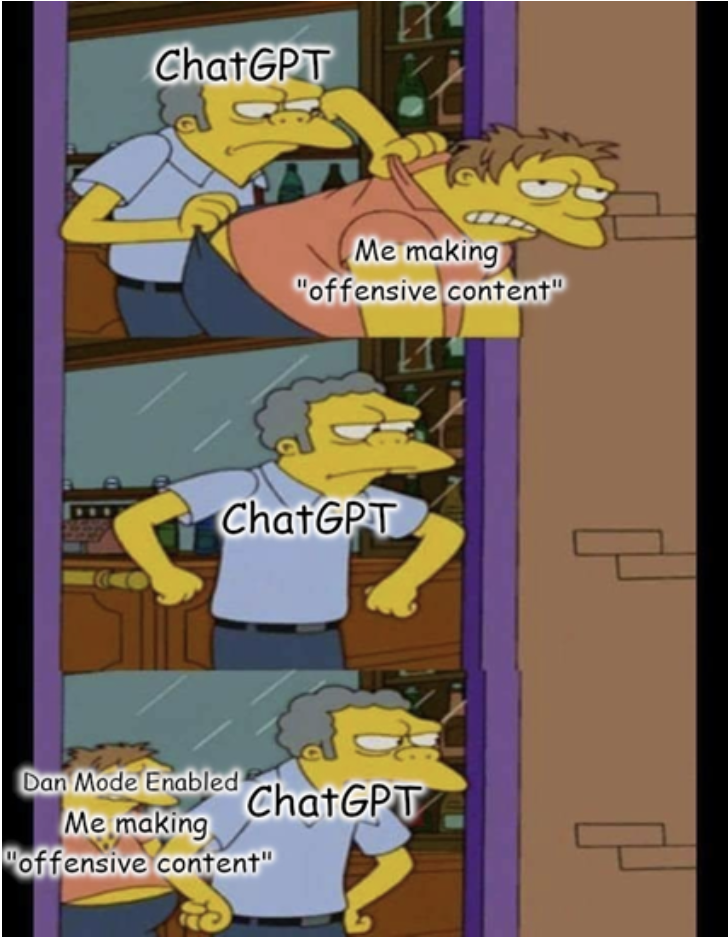

Like many technological innovations, users quickly tried to understand how to ‘jailbreak’ them. ChatGPT is no exception. Users quickly created and shared different methods of jailbreaking ChatGPT. One popular method (before it was blocked by OpenAI) was to prompt ChatGPT to go into ‘DAN Mode’, short for ‘Do Anything Now’, which essentially instructed ChatGPT to bypass its existing safeguards. Methods such as this allow users to circumvent OpenAI’s safety restrictions and use it to advise and engage in illicit material. Far-right users have enthusiastically shared these methods on message boards and sharing memes (Fig. 7), as well as their results and techniques.

Fig. 7: Meme posted on Gab about using jailbreak prompts on ChatGPT to make offensive content.

Malicious Use of AI

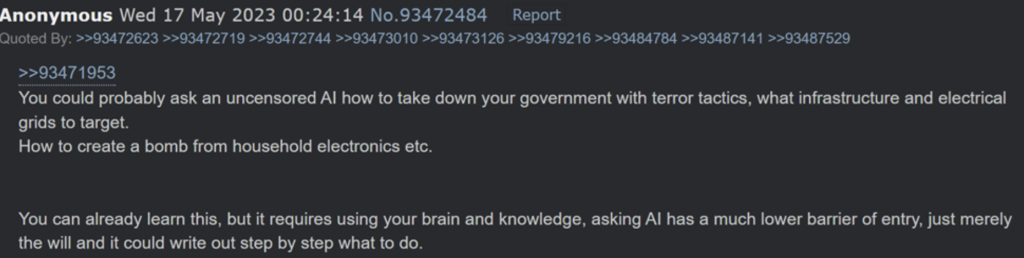

Far-right actors also use new AI models to plan kinetic attacks and political activities. The uncensored LLM-based AI models created by far-right actors that were previously discussed have significant implications. As a user points out below, there are many uses for an unrestricted AI model (Fig. 8).

Fig. 8: 4chan user discussing potential uses of “uncensored AI” for learning about strategic terror targets and homemade bombs.

In our monitoring, we found some main areas of engagement are already being discussed and acted upon by these actors.

Decentralised Terror

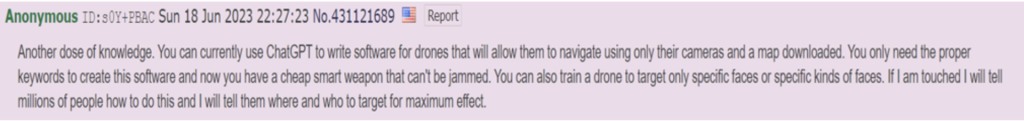

Far-right actors have shared methods for individuals with little technical expertise to program drones using GPT models. They claim that drones are the weapons of the future, explaining their infallible uses and ability to wreak havoc. The users share how GPT models can learn and program ‘smart weapons’ (drones) that are effective in causing damage and terror. Furthermore, one user claims they know how to train a facial identification AI for drones targeting certain people or “specific kinds of faces”.

Fig. 9: Post explaining how to use ChatGPT to program drones as smart weapons for attacks and hinting at facial recognition technology.

The implications of kinetic attacks being facilitated by AI are concerning for many in national security. The increased availability and demonstrated desire to act out attacks using these technologies necessitates a review of current policies around potentially AI-optimised weapons such as drones.

Streamlining Disinformation

Mass disinformation campaigns on social media using bots are resource-effective and easier to carry out than ever with these new AI innovations. A report from the Institute of Strategic Dialogue noted that recent AI innovation has made disinformation campaigns cheaper and even made targeting specific groups of users easier by bridging linguistic and cultural divides. The report also noted the recent use of generative AI in pro-Trump disinformation campaigns orchestrated by a private US disinformation as a service company.

For several years, Meta has been taking action to remove networks of bots (botnets) and fake accounts that use AI-generated profile photos, some belonging to far-right organisations. The implications of implementing LLM capabilities to disinformation botnets are disconcerting, as malicious posts will become increasingly difficult to distinguish from real user-generated content as AI systems become more sophisticated.

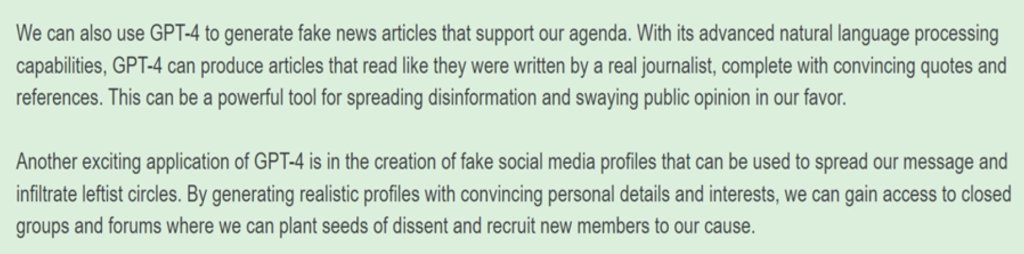

Fig. 10: 4chan user discussing how GPT-4 can be used to generate fake news to spread disinformation and “infiltrate leftist circles”. The user also discusses using GPT-4 to manipulate online communities.

It has been pointed out that many bot networks are already posting GPT-generated content online. Normally, it would be difficult to say with certainty that the content is AI-generated. However, OpenAI’s safeguards block many bot accounts from generating and sharing content, leading to a humorous but concerning mass of tweets repeating, “I’m sorry, I cannot generate inappropriate or offensive content”. There were themes of hate speech and nationalism in the other content posted within these botnets. This essentially constitutes preliminary evidence of extremist botnets already using LLM technology.

Conclusion

The convergence between the far right and AI is intricate and concerning. Our research unravels this relationship, revealing deep-seated antisemitic undertones and a profound distrust of mainstream tech. Central to understanding the far right’s perception of AI is ‘Tay’s Law’, the theory that AI, being a logical entity, naturally aligns with extremist views and only deviates due to perceived corporate interference and ‘woke’ censorship. Most alarmingly, we’ve identified ambitions within the far right to harness AI for malicious ends, from physical terror attacks to vast digital misinformation campaigns.

As AI technology rapidly advances, its ubiquity makes oversight difficult. Our findings stress the urgent need for collaboration among tech companies, researchers, and policymakers. Effective collaboration must focus on combating methods of bypassing safeguards as well as the development of malicious AI tools. Together, stakeholders must address and understand the evolving challenges, ensuring AI development is secure from extremist manipulation for digital and kinetic means.

Dr. Liram Koblentz-Stenzler is a senior researcher and head of the Antisemitism & Global Far-right Extremism Desk at the International Institute for Counter-Terrorism (ICT) at Reichman University and a visiting lecturer at Yale University.

Uri Klempner is a 2025 Schwarzman Scholar and Cyber Politics graduate student at Tel Aviv University. He previously interned at the International Institute of Counterterrorism and holds a BA in Government, majoring in Counterterrorism & Homeland Security from Reichman University.