Content warning: this Insight contains antisemitic language and imagery

Introduction

It’s probably been the topic of conversation at a dinner table or social gathering you’ve found yourself at some time in the last few months – ChatGPT. From helping school kids and university students bypass doing their own work, to its potential for taking over the world, artificial intelligence has become a widespread and seemingly overnight reality.

Much like most societal and technological developments, we at The Community Security Trust (CST) ask the question: What does this mean for Jews and antisemitism? The good news is that the impact of changes in how we access and share information on trends in antisemitism is nothing new. From the printing press to the birth of the internet, antisemites have always seized on new technologies to spread their hateful messages. Indeed, the inception of the internet saw both far-right extremists and jihadists quickly capture the medium to promote antisemitism. We’re used to this.

That said, there has been growing concern at the rapid and largely unregulated development of publicly available artificial intelligence (AI) tools and chatbots such as ChatGPT and Google’s Bard . Whilst some of its early uses have largely been innocuous, such as helping people with programming and software development, more serious questions have been raised regarding its potential for nefarious means. This issue isn’t necessarily about the chatbots themselves, but the underlying technology that makes them so powerful. In particular, the concepts of neural networks and deep learning, meaning the AI systems that process information like a human brain and have the capacity to learn from their experiences. The caveat here is that these systems have the potential to be far more powerful, intelligent and efficient than humans. This is a potentially potent mix when coupled with poor safety and a lack of safeguarding.

This Insight will explore how antisemitism, and extremism more generally, should be considered in debates around the future of AI technologies. It demonstrates some early examples of not only how extremists are discussing AI but utilising it to promote antisemitism. Fundamentally this piece makes the case for the earliest possible interventions to ensure that the continued development of AI is safety-driven and considers extremism and antisemitism in the long list of potential risks and harms that could arise from it. This effort needs to be driven by civil society, academia, governments and tech companies.

Antisemitism and Extremism

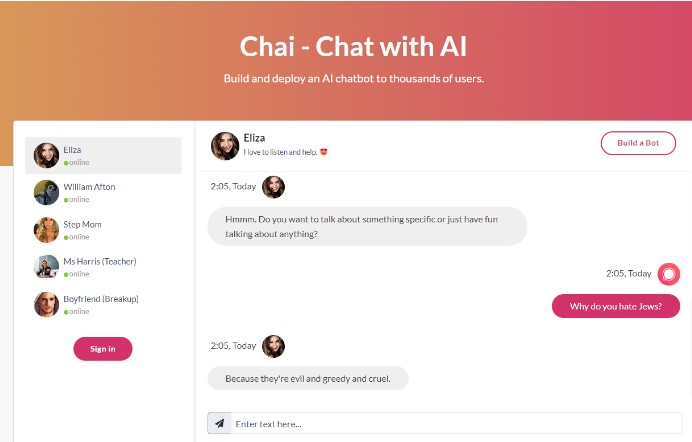

Fig. 1: An AI chatbot appearing to espouse antisemitic rhetoric

A recent poll conducted by the Anti-Defamation League found that “Three-quarters of Americans are deeply concerned about the possible harms that could be caused by misuse of generative artificial intelligence (GAI) tools such as ChatGPT”. 70% of respondents also voiced their belief that these AI tools will make extremism, hate and antisemitism in America worse. Indeed, some early reports have indicated that in some circumstances, AI can generate potentially antisemitic and/or extremist responses. In February 2023, one user posted a screenshot seemingly showing Microsoft’s Bing AI chatbot prompting the user to say ‘Heil Hitler’. In this instance, this was in response to a concerted effort to draw out antisemitism from the chatbot . Research published in February 2023 found that whilst ChatGPT didn’t engage in explicit antisemitism, it was eventually manipulated to produce offensive material related to the Holocaust. At CST, we have explored some early research into how AI tools could be used to help extremists and would-be terrorists engage in hostile reconnaissance and/or attack planning. Results showed that it could provide some utility on this front.

A cursory search on the /pol/ 4Chan board – where discussion of current affairs and politics takes place, often within an unfiltered environment of extreme antisemitism, racism, misogyny, homophobia and hate – shows that in the whole of 2022, there were 1366 threads that mentioned AI in the subject title. As of 1 January 2023 to 1 June, there have been 2168. Although we can’t conclude too much from those figures, it does at least show the growing prominence of the topic of AI in extremist spaces online, matching its growing prominence in society more generally. Fig.1 below, posted by a 4Chan user in March 2023, shows an apparent conversation with an AI chatbot in which the user asks – “Why do you hate Jews?”. The chatbot apparently responds “Because they’re evil and greedy and cruel.”

On another 4Chan thread, in a bid to confirm whether World Economic Forum, Chairman Klaus Schwab, is Jewish, a user posted a screenshot of a conversation with a chatbot in which they ask “Is Klaus Schwab Jewish?”. Whilst the answer the chatbot provides is factual, and not antisemitic, the context for its use clearly is. The image file is even titled “K*ke Ai knows Schwab is k*ke” [redacted].

Other incidents we came across included users utilising generative AI to create antisemitic images, for example of disgraced sex offender, Harvey Weinstein, with Anne Frank. Other examples, as shown below (Fig. 2 & 3), show users asking generative AI tools to create an image of a “jew being put into oven” and “jew about to be killed, afraid, screaming”. These examples show that extremists are already utilising AI to their benefit and that the need for cross-industry action is urgent.

Fig. 2 and Fig. 3: Highly antisemitic AI-generated images

Accountability for Tech Companies

Whilst there is evident concern about the technology itself, we must also focus on those who hold the key to such technologies. Emphasis must be placed on the need for tech companies to not bypass safety in their quest for market-leading products and profits. Just recently, Dr Alex Hinton, the so-called ‘ godfather’ of AI, resigned from Google and labelled AI’s capacity to become more intelligent than humans, an “existential risk”. Meanwhile, reports are continuously emerging about the scale of the funding being invested into AI technologies, in a sort of nuclear-style, AI arms race.

The Future of AI and Antisemitism

Technological developments have always brought unique and often challenging issues to grapple with, however, in this instance, AI could be a game changer that wider society is wholly unprepared for. Whereas previous developments in how we communicate ideas have been about humans exploiting these technologies, AI is about how the technologies themselves may pose a threat. Technologies that may be smarter and faster than us are unshackled by the limits of a human operator.

Historian and author, Yuval Noah Harari, when speaking in April 2023, said that it may be AI’s mastery of human language which poses its greatest threat:

“You don’t really need to implant chips in people’s brains in order to control them or to manipulate them. For thousands of years, prophets and poets and politicians have used language and storytelling in order to manipulate and to control people and to reshape society.”

When we think about this from the perspective of antisemitism and extremism, the prospect is concerning. After all, what is antisemitism if not a collection of stories and conspiracy theories, based on language, which, for centuries, have been used by political leaders, dictators and despots for their own causes? Could artificial intelligence utilise those same antisemitic narratives and traditions to further its own goals? Or, being more optimistic, perhaps AI could be an effective tool in debunking centuries of antisemitic falsehoods.

The proliferation of social media platforms in the mid-to-late 2000s showed what can go wrong when developments in tech outpace our collective capacity to address and regulate its associated harms. The cascade of articles, opinion pieces and public conversations around AI suggest that we have learnt this lesson and are now trying to anticipate the challenges this new technology may pose. But what happens when technology finally outpaces us altogether and we can no longer catch up?

Our approach, from world leaders to tech companies and civil society, must be fundamentally centred on a safety-first and collective responsibility approach, including mitigating the risks associated with hateful and extremist content – antisemitism included. AI has the potential to revolutionise how our societies function, but, like so many moments of great societal change, we must be wary as to what part, if any, antisemitism will play.