Following the role that QAnon adherents, and other actors, played in the insurrection on 6 January 2021, large social media platforms cleaned house and deplatformed a plethora of groups and movements of diverse ideologies. In this shakeup, QAnon adherents migrated to various alt-tech platforms, yet two of them (Telegram and Gab) in the past seven months have turned into central hubs, where adherents and influencers have built new communities. In May 2021, Jared Holt and Max Rizzuto analyzed data from Gab, Parler, Dot-Win forums, 4chan and 8kun. Though extremely insightful, the authors did highlight that “Our analysis did not include data from Telegram, which is a popular venue for QAnon followers to distribute information among themselves, due to a lack of comprehensive data available for the platform.”

Since the electoral loss of former president Donald-Trump, as well as the silence of ‘Q’, the QAnon movement has had to significantly deal with the failure of their prophecies. In October 2020 Amarasingam and Argentino analysed the impact of failed QAnon prophecies and highlighted ways in which QAnon would potentially carry on after an electoral loss. The authors highlighted three responses:

- “There could be instances of violence, as followers undertake an urgent campaign to bring about the arrest of supposed corrupt elites, celebrities, and the deep state as a whole.”

- “We may see factionalism in the movement, with some followers being siphoned off into other movements and groups and continuing their activism in ways that become only loosely tied to QAnon.”

- “We may see the movement carry on as if nothing has changed […] that the movement is older and bigger than Trump himself, and it’s now up to them to carry the torch.”

6 January exemplifies point one; however, relevant to this piece are points two and three. Over the past seven months, QAnon has seen some members siphoned off into other movements, or movements adjacent to QAnon, while other members simply continue to carry the torch and fight against the supposed deep state. Telegram is an important ecosystem to analyse the factionalism and evolution of QAnon in 2021. This piece seeks to analyse the QAnon community on Telegram by examining a curated list of 30 Telegram groups of varying sizes to determine a minimum baseline figure of how many potential active members presently operate on Telegram while assessing the health of these communities.

Methodology

Currently in our database there are more than 3,500 Telegram groups and channels that refer directly to QAnon and a further 10,000+ groups and channels linked to these, demonstrating a network of QAnon-adjacent chats in a multitude of languages and representing conspiracy movements internationally.

Channels represent most of the QAnon digital assets on Telegram, some of these are simple and are used only for broadcasting messages, whereas others offer the capacity to comment on posts, a feature released in October 2020. While some channels are controlled by a single user, others are controlled by a group of admins (in one QAnon channel, 38 of the influencers that have been central to the movement administrate the channel together). As channels are not consistent in how they operate and many do not have the capacity to comment on posts, it is difficult to assess the level of user activity and engagement in these spaces, as well as how many users participate or lurk. Additionally, channel data available through the Telegram API does not offer a comprehensive way of measuring channel engagement with posts at an individual user level. To that end, though fewer in number, groups on Telegram offer the capacity to examine unique user activity, with which we can determine which accounts have been active and engaged with content and which accounts simply lurk.

To gather the data, complete member lists and chat archives for each group were collected through Telegram’s API. For each group, the message archives were filtered based on the groups’ member lists to identify currently active members. For the selected sample, all except six of these groups were created after August 2020.

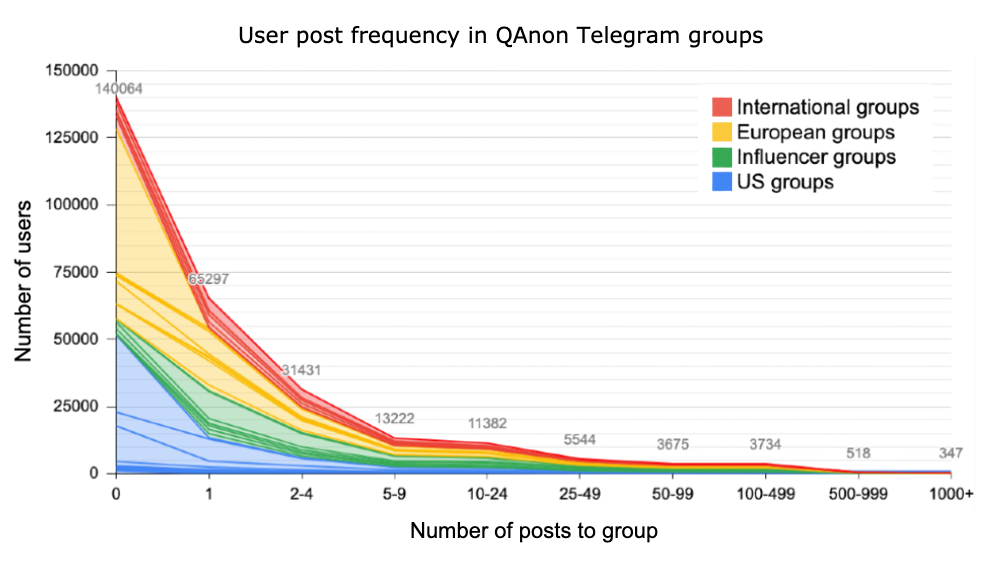

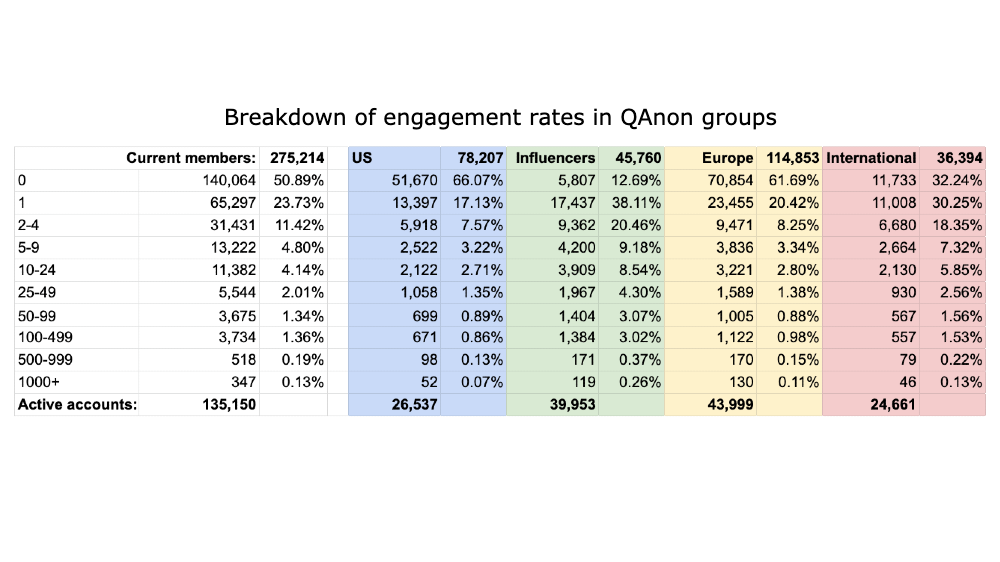

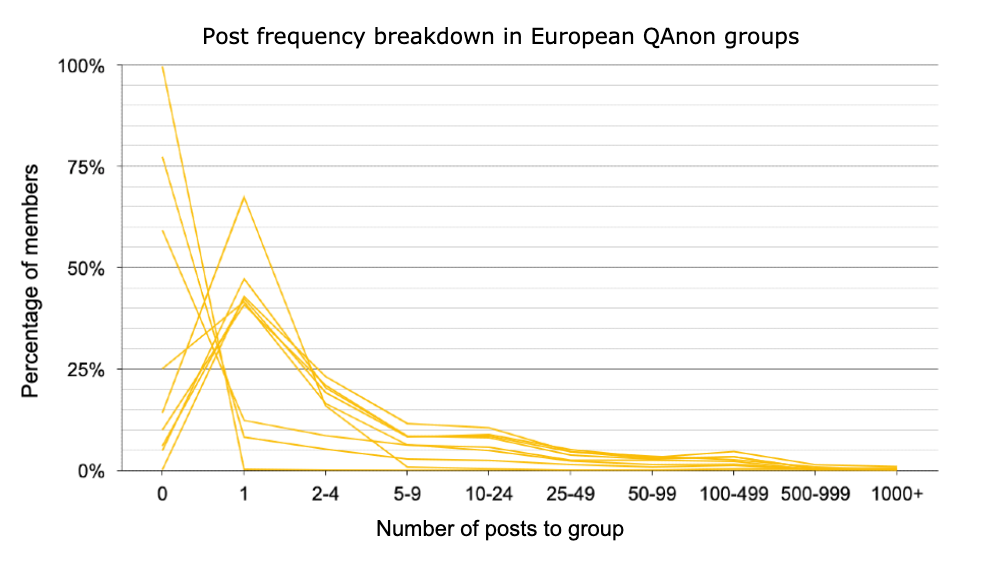

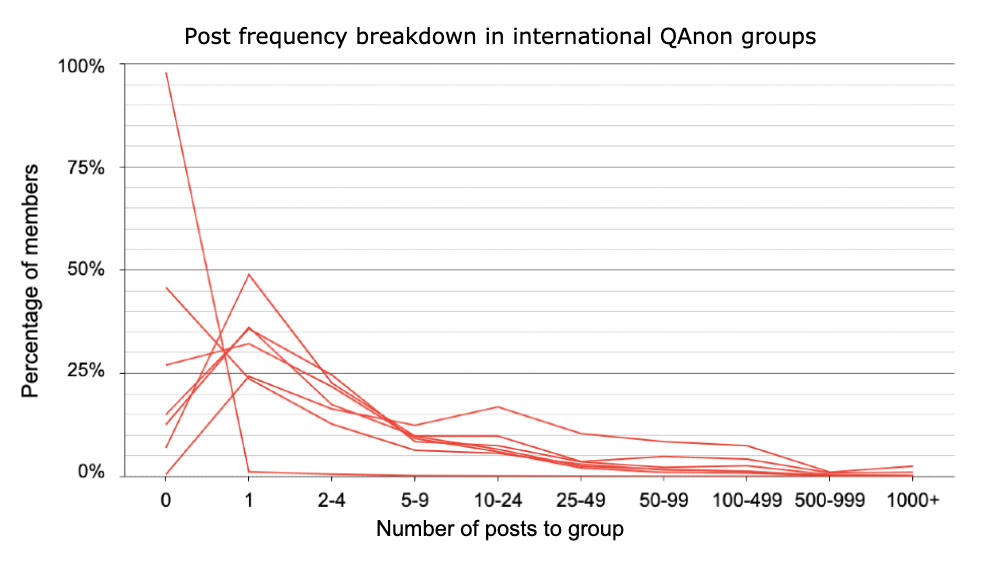

The post frequency of members with active accounts was calculated based on the number of posts each account made in the lifetime of the associated chat. To establish the number of users who had not posted in the group, the total number of active accounts was subtracted from the total group members figure. The data was then broken up into four categories: US (6 groups), key Qanon influencers (7 groups, all of which are US-based), European groups (10 groups, includes those for the UK, Germany, France, Italy and Poland) and International (7 groups, includes those which target an international audience or are for regions outside the US and Europe).

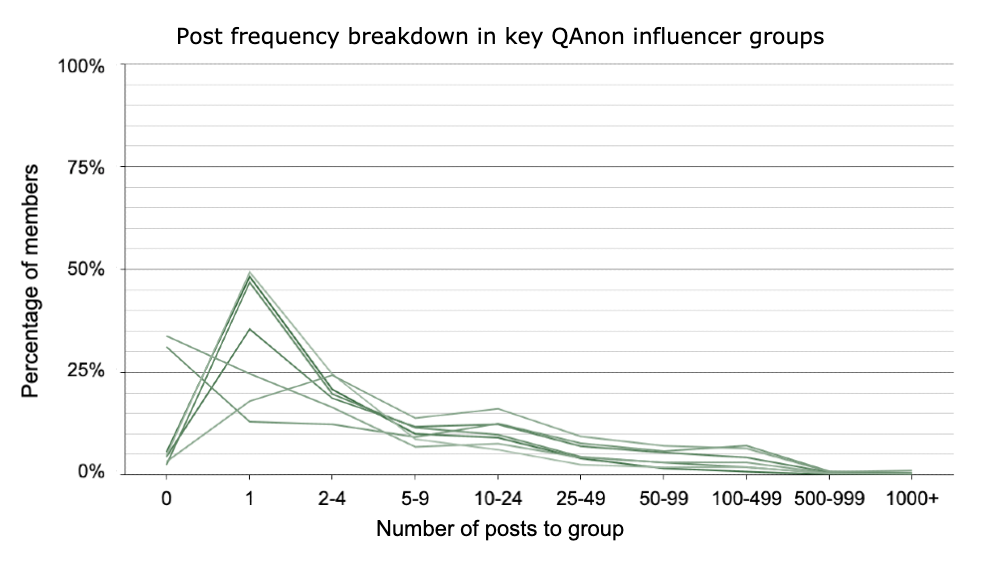

In some groups, welcome messages, or initial user verification (such as a CAPTCHA-like tests) resulted in users engaging once by default, to confirm they’re human. In at least one group, inactive users are removed if they do not complete this verification, in another, users are prevented from posting if they fail to complete this test. In general, this results in such groups having a high percentage of users who post only once.

Findings

In the assessed sample there are currently 229,797 unique accounts that are members of the surveyed 30 groups, of those, 227,792 are members of three or fewer groups and 135,150 have posted at least once. We were also able to collect data from accounts that have been active since the creation of these groups and who have since left the group, revealing a total of 639,909 accounts which have posted at all in the lifetime of these chats (including current members).

The ability to determine if an account is active is important, as it indicates the number of people who have engaged with QAnon content in these spaces. Most users likely joined Telegram as a response to the mass deplatforming of QAnon and other ideological movements in January 2021. However, if the new users found Telegram difficult to use or didn’t enjoy the content, most would have deleted the app. This neither deletes a user’s account data nor removes them from groups. However, a basic security feature of Telegram is that, by default, accounts and their data (including messages, media and contacts) are deleted after six months of inactivity.

Similar to the findings of Holt and Rizzuto, we can point to a decrease of 78.19% of active membership in QAnon groups; however, 135,150 currently active accounts in these 30 groups alone still make the QAnon the largest active extremist community on Telegram.

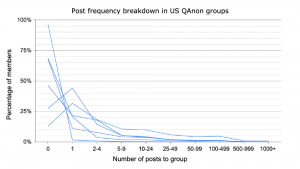

As the number of active accounts alone is not enough to examine levels of engagement with the content, we broke down user activity into several brackets to scale the levels of activity based on the number of posts an account made (See Table 1). We found that 74.62% of users either had never posted or posted only once (likely to complete the verification to join the chat). While single posts are enough to confirm an account entering a group is intentional, these users can not be considered regularly active participants. From the remaining users, which we categorised as regularly active, 1.68% (4,599) are responsible for the vast majority of posts made into these groups, with an additional 3.35% (9,219) being moderately active (25 to 100 posts), and a larger potion 20.36% (56,035) being only a little active (2 to 24 posts).

When we break down the chats by category, we found that the chats associated with channels controlled by QAnon influencers and US based chats had fewer superposters compared to the European and international categories. Though influencers rarely post in their own chats, users in these chats regularly share posts from the associated influencer’s channel to discuss. Thus they inadvertently act as a superuser that the community engages with and responds to. Furthermore, there is likely a transference of users who were already actively following these influencers on Twitter, Facebook, YouTube and Instagram, thus they were already committed to consuming the content and messaging created by these influencers.

Of the European chats, those that are not dominated by English speakers had the highest number of superusers (500+ posts). This is not abnormal, as most of the content is translated and contextualised from a US-centric outlook to an international or localised context by influencers in these communities, and not necessarily by US-based QAnon influencers. Nevertheless, former US President Trump’s statements had a significant influence in these communities, especially as they recontextualised (and sometimes mistranslated) his statements to apply to their own contexts. Additionally, international communities reacted to their own geo-political news, which is shared and driven by their own subset of influencers, further developing and sometimes fragmenting QAnon canon in these localised contexts.

We see a similar pattern of superusers in the international chats, where content is often translated and contextualised in a similar way. The original major QAnon influencers are mostly US-based; however international communities have their own subset of influences and conspiracy theories unique to them and associated with QAnon. Because QAnon is a ‘big-tent’ conspiracy ideology, some theories that predate QAnon narratives become part of the localised canon, such as sovereign citizen movements as well as recent additions, such as ‘big-pharma’ conspiracies alleging that an elite group is trying to control the population through advances in medicine and a growing number of conspiracy theories around climate change.

Conclusion

Though there has been a decline in QAnon activity on Telegram from its peak in early 2021, a healthy and active community still exists on the platform. The QAnon ecosystem follows the rule of participation inequality (the phenomenon that a very small percentage of participants contribute the most significant proportion of information to the total output), but what is interesting about the chats is that QAnon influencers aren’t always highly active in the groups themselves, resulting in user-generated content created by rank-and-file QAnon adherents gaining prominence. It is in these chats that we see the formation of factionalism, this occurs either when QAnon adherents are introduced to more extreme content by individuals seeking to syphon them off into other movements, as a result of infighting between QAnon influencers which trickles down to the rank-and-file follower, the introduction of new theories by group members based on local or international current events, or by adherents doing their own decodes or reinterpretations of Q-drops or Trump statements and crafting their own new conspiracy theories.

When comparing with other ecosystems on Telegram, QAnon chats dwarf those found on Terrorgram and in violent extremist ecosystems combined, yet there is cross-pollination between all three ecosystems. QAnon content often ends up in violent extremist ecosystems, but more concerning is the violent extremist and terrorist content which ends up in QAnon chats. Although most QAnon groups and channels will likely remain as part of their own ecosystems, there are some that have (and others that may) moved towards violent extremism. An example of this would be the group linked to GhostEzra’s Telegram channel. GhostEzra is a QAnon influencer who runs the largest QAnon channel on Telegram at more than 332,000 subscribers, and the associated Telegram group chat had, at the time of analysis, 6,162 members (by publication, this had risen to more than 7,300.) GhostEzra is also the QAnon influencer who has moved from coded antisemitism to explicit Nazi propaganda and narratives, with Arieh Kovler, writer and former head of policy and research for Britain’s Jewish Leadership Council, tweeting in May that it “might now be the largest antisemitic online channel or forum in the world.”

Therefore, though we are far from the numbers that were seen when QAnon was on mainstream social media platforms, the community that remains is still active and cross-pollinating with more extreme movements on Telegram. Factionalism will continue to form, and new factions are increasingly influenced by the Telegram extremist ecosystem. While it is most likely that QAnon will remain behind the screen, even with a more violent extremist slant, it does also mean that a large pool of individuals also present themselves as targets for recruitments by violent extremists. Furthermore, this ecosystem has established a captive audience for QAnon as it establishes itself as a political force, with a number of QAnon-supporting candidates intending to run for US Congress in 2022.

Another possibility, is that with the creation of a MAGA ecosystem on Telegram, as well as a presence of conservative media outlets and personalities, there are some QAnon adherents that will carry on as if nothing has changed and continue to thrive off of the statements and narratives of former President Trump, Marjorie Taylor Greene, Lauren Boebert, Lin Wood, Sydney Powell, Mike Lindell, and any other voice who is aligned with the Big Lie and/or QAnon conspiracy theories.

With an established baseline, it is now possible to periodically repeat this research with a larger sample size and adjustment for fixed timeframes to continually monitor the size and engagement rate of these communities, as well as the threat level of these ecosystems.

These findings were also published by Logically.