Introduction

Earlier this month, the UK grappled with possibly one of the worst instances of organised far-right violence in its postwar history, exacerbated by a surge in digital extremism and widespread misinformation. Triggered by the horrific stabbing incident in Southport, where three little girls were killed, this tragedy ignited a wave of outrage on social media platforms, which was used by far-right actors to spread disinformation and inflammatory, racist, and anti-immigrant rhetoric. At the heart of these protests was misinformation about the suspect behind the attack – claims spread that he was a Muslim immigrant when the 17-year-old was, in fact, born to Rwandan parents in Cardiff.

In the digital age, digital spaces have become powerful tools for far-right extremists, allowing them to amplify their messages and mobilise violently. We have seen this reflected in these recent riots. This Insight examines how misinformation disseminated on social media platforms contributed to the bout of far-right violence in the UK, extremist mobilisation, and real-world harm.

Narratives of the Rioters

Far-right activism in the UK is overwhelmingly fueled by a potent mix of anti-immigration, Islamophobic, and xenophobic ideologies. The riots fell on all of these spectrums. These riots have been referred to as “organised illegal thuggery” and “anti-immigration protests,” although they are not directed at all immigrants, for instance, those who are white. Annelie Sernevall, a UK-based housing and asset management expert, shared in a post on LinkedIn that while she, a white immigrant, experiences no personal fear, her UK-born daughter of a different ethnicity faces racial discrimination. She asserts that the real issue is racism, not immigration.* Moreover, there have been reports that far-right agitators have vandalised and desecrated Muslim graves in Burnley Cemetery with white and grey paint and torched the hotels housing asylum seekers. Prime Minister Starmer has referred to them as “extremists” who are attempting to spread hate.

The riots were fueled by offline disinformation, false rumours, and misleading narratives, which incited real-world harm, leading to public panic and escalated violence. Inaccurate reports and sensationalised media coverage intensified the chaos. This highlights how disinformation, whether offline or through social media, has tangible and often destructive consequences.

Online Radicalisation fueling Offline Violence in the UK riots 2024

X Amplifying Islamophobia and Racism

Even before the riots erupted, Darren Grimes, a presenter on GB News, shared posts on X promoting Islamophobia. This type of rhetoric fuels violence and undermines public discourse, reflecting a broader problem- the normalization of hate speech and divisive narratives in mainstream media. Such rhetoric not only incites immediate violence but also deepens societal divisions, perpetuating a cycle of extremism and unrest.

According to Marc Owen Jones, an associate professor of Middle Eastern studies at Doha’s Hamad bin Khalifa University, whose research focuses on information control strategies, the recent riots were spurred by massive misinformation. By 30 July, a day after the Southport attack, Jones had tracked “at least 27 million impressions [on social media] for posts stating or speculating that the attacker was Muslim, a migrant, refugee, or foreigner. Other accounts on X also blamed Muslims, including Channel 3 Now, which later issued a “sincere apology and correction.” Similarly, far-right campaigner and the leader of the now-disbanded English Defense League (EDL), Stephen Yaxley-Lennon, who goes by the name of Tommy Robinson, told his nearly 800,000 followers on X that “there was more evidence to suggest Islam is a mental health issue rather than a religion of peace.” (Figure 1)

Telegram and TikTok Used for Inciting Violence and Hate

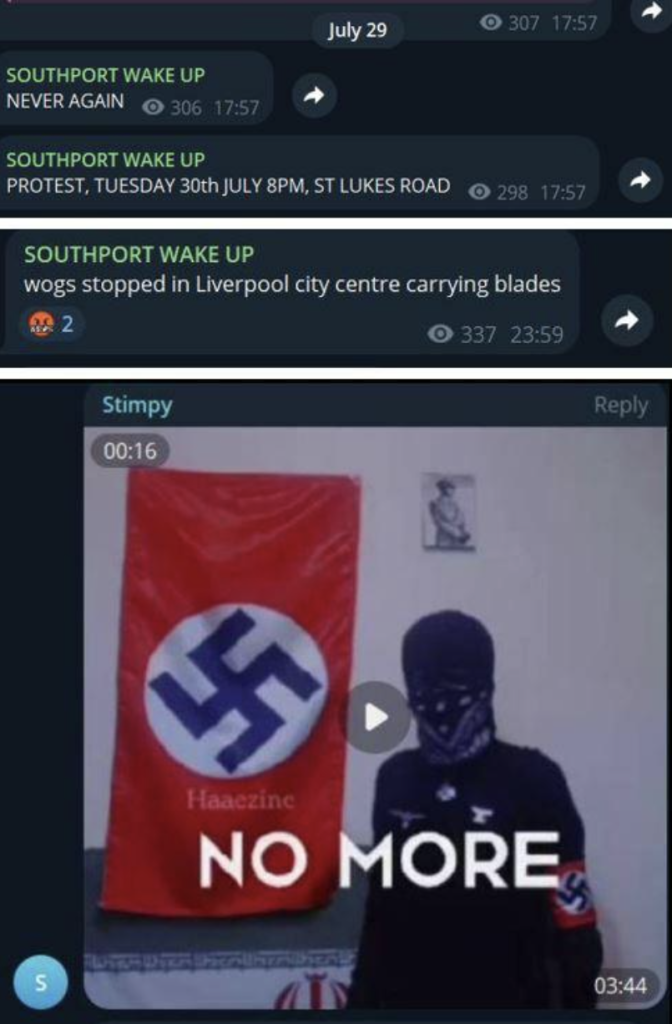

The private nature of Telegram restricts the impact of moderation, making it a go-to app for those who want to spread toxic content. The unchecked proliferation of misinformation often acts as echo chambers for extremist views, avoiding mainstream media checks and regulations. Following the Southport stabbings, Telegram became a central hub for organising the riots. Far-right messaging channels circulated calls for genocide against Muslims, destruction of mosques, and bomb-making instructions. Across nine groups on Telegram, the Bureau of Investigative Journalism found that far-right groups have posts linking to documents telling users how to commit arson, instructions for building bombs and evading police. Another channel, “Southport Wake Up”, channel quickly gained traction, and it publicised protest locations and shared extremist content, including instructions for violence. (Figure 2)

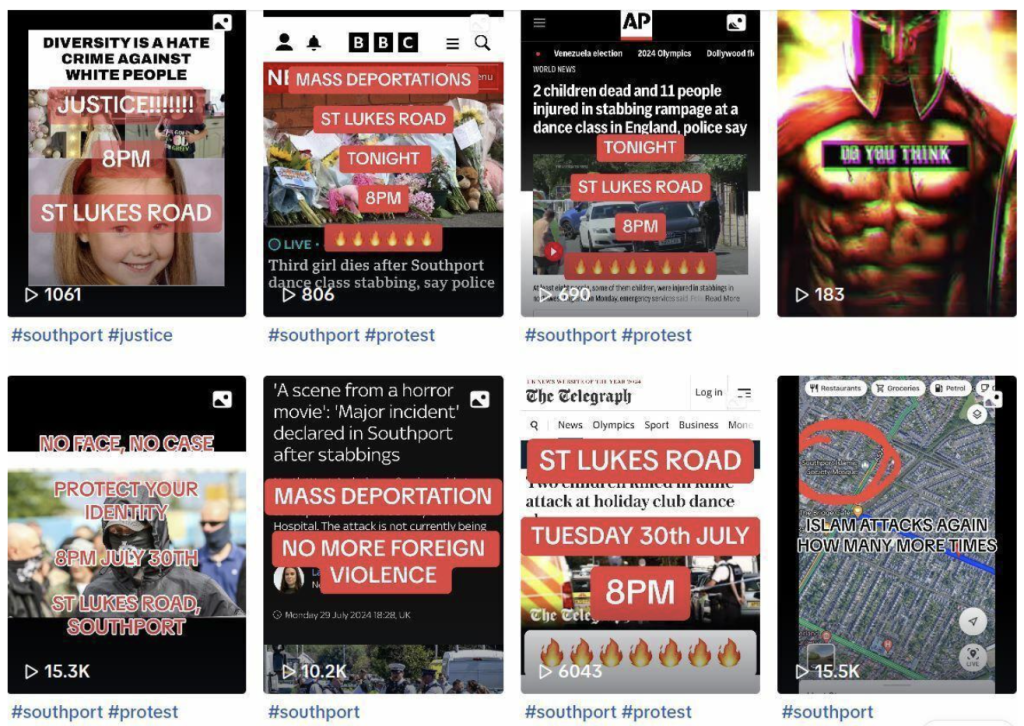

Within an hour of launching, the group shared disturbing TikTok videos promoting racist messages and encouraging anonymity. (Figure 3) A report published in 2021 analysed TikTok as a hotspot for individuals intending to use these platforms to share and promote hate and extremism, and the recent riots have confirmed this research.

Amplification of Individual Actions in Collective Unrest

How does social media transform individual actions into collective actions visible in protests and riots? While individuals bear personal responsibility for their actions, mob dynamics can quickly lead to chaos. Undeniably, digital spaces, especially social media, bring together like-minded people to act collectively, thus amplifying the impact of disorder and facilitating rapid and widespread mobilisation, often distorting personal accountability. Moreover, social media contributed to crowd mentality and depersonalisation, with people often losing their individuality and following group behaviour.

Overlooked yet Critical Aspects of Online Disinformation

- Algorithmic Amplification: Algorithms that amplified the most extreme comments and triggered a cycle of outrage and misinformation made the recent riots almost inevitable. Social media algorithms control the flow of information by amplifying content that drives user engagement, such as emotional or biased information. To increase user engagement, a study found that social media algorithms leverage biases towards prestigious, in-group, moral, and emotional information (PRIME). The amplification of PRIME information through algorithms leads to social misperceptions, conflict, and misinformation. This misinformation will likely be amplified, gaining legitimacy and exacerbating real-world consequences, as social media algorithms focus on engagement rather than accuracy. Moreover, social media companies failed to address online extremism and use algorithms that prioritise engaging content, heightening polarisation, partisan animosity, and political division. Furthermore, platforms like Facebook routinely use algorithms to make critical decisions that shape our social, political, and civic processes.

- Role of Influencers and Celebrities: The role of social media influencers in spreading misinformation cannot be overlooked. Influencers with large followings can amplify misinformation without rigorous fact-checking, and their endorsement can lend credibility to misleading information, multifold the impact. Some accounts, including those of far-right provocateur Tommy Robinson, significantly amplified these claims, reaching millions of accounts in seconds. Elon Musk, the owner of X, is also fanning the flames. Notably, he suggested that mass migration led to riots and that “civil war was inevitable.” This view was shared after UK Prime Minister Keir Starmer warned social media firms about violating laws in relation to online misinformation fueling the riots.

Concluding Recommendations

In liberal democracies, there will always be a debate between upholding free expression and preventing violent disorder. Responsibility lies at both collective and individual levels.

Content moderation must be strengthened collectively. Simply removing posts or blocking sites is not enough; a collaborative approach, combining government, NGOs, and tech and social media companies, is needed. Moreover, social media companies need to be more transparent about their algorithms and how they amplify content, which can help identify and mitigate the spread of harmful misinformation. De-platforming accounts on mainstream social media has often led to their migration to encrypted apps like Telegram, where monitoring is more challenging. This migration of de-platformed users to alternative platforms raises concerns about the effectiveness of de-platforming. This underscores the need for a holistic strategy that addresses both technological and social dimensions of extremism.

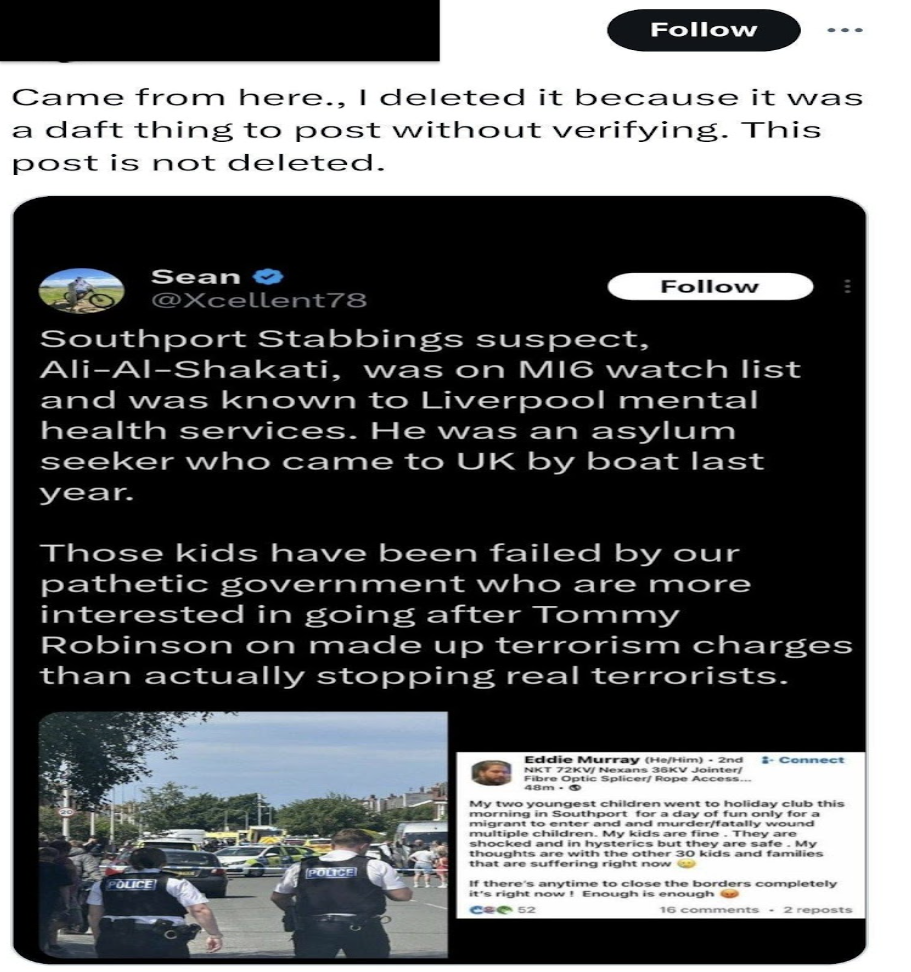

Often, individuals encountering sensational or provocative news fail to verify the accuracy of the information before sharing it. For example, like many other individuals, this account holder, shared the post without verifying it (Figure 4).

This digital misinformation ecosystem feeds on the rapid dissemination of information, creating widespread unrest. With countless online sources and the rise of AI, low media literacy makes people more vulnerable to extremist content. Understanding how online content leads to real-world violence is crucial for a comprehensive solution.

In conclusion, the recent riots reveal that digital spaces, mainly on social media with faceless influencers and disinformation campaigners, have the potential to incite real-world unrest and potential to fuel far-right violence. Moreover, this violence, even though spread by misinformation, is a result of a years-long creation of a divisive environment by targeting “outsiders,” particularly Muslims and immigrants. The failure to address far-right extremism as the fastest-growing terrorism threat in the UK and the dismantling of counter-extremism strategies worsened the situation. A reformed and respectful approach to addressing the growing tensions and hate is needed. Politicians should prioritise unifying language that fosters inclusivity and understanding rather than division. Supporting initiatives that provide alternative, positive narratives to counteract the hateful rhetoric is needed more than ever to build a more cohesive society.

*Permission was obtained to share her post and views.

Mariam Shah is an independent researcher and a PhD scholar in Peace and Conflict Studies. She tweets at @M_SBukhari.