Introduction

Latest reports have highlighted that as of April 2023, 60% of the world’s population utilises social media for an average of just under two and a half hours a day, with social media sites being used by two-thirds of all internet users. Social media is now a major influence in the formation of public opinion and how we perceive certain issues.

One issue raised when examining the growing usage and influence of social media is the potential for hateful and extreme ideologies to be promoted among vulnerable individuals. Research has indicated that social media has become a fundamental tool for promoters of extremist ideologies to not only share their ideological propaganda but also seek out potential new recruits for their group or cause.

Research has documented that the more an individual is exposed to hateful and extreme narratives, the more likely it is that they start identifying with these ideologies and become radicalised. All of these advancements in the arsenal of extremist groups and the rising fear of vulnerable individuals becoming radicalised online only further exacerbates the criticisms and concerns of social media and the safety of the users who regularly utilise these sites.

In this Insight, we provide an overview of our study into the experience of social media users and their exposure to hateful or extremist content. We explored and compared the experiences of social media users to the safeguarding policies stated by prominent social media sites, in the context of extremist communication and hate speech. We find that the average social media user is being exposed to extremist material and hate speech 48.44% of the time they spent online. This Insight explores the community guidelines and policies of prominent social media sites regarding extremist material, comparing the platform’s policies with the user experiences.

Social Media’s Commitment to Preventing Hate Speech

To battle these concerns and criticisms, when it comes to issues regarding hate speech and extremist communication, social media sites openly state in their publicly available terms of service and community guidelines their intolerance to topics or discussions that appear to be involving or implying hateful or extremist content.

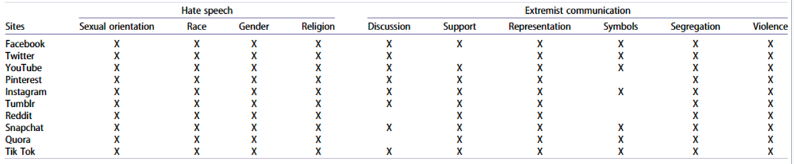

Table 1: A table to show the topics each social media site openly states they do not support

Social media platforms have also adamantly promoted their efforts to moderate and remove any material that is in breach of their terms and service, often promising to constantly improve their safeguarding measures. This closely scrutinised content moderation and safeguarding is often carried out through numerous different methods, ranging from the use of artificial intelligence implementation to detect content that breaches policy guidelines to having active staff members acting as content moderators for content that is flagged up by users of the site reporting inappropriate content they have seen.

The Study

To examine the exposure social media users experience to hate speech and extremist communication, a scale was created (Online Extreme Content Exposure Scale: OECE) utilising both the findings in research exploring radicalisation language online and the policies stated by social media sites that are in place to prevent radicalisation and user harm (Table 1). The scale comprised 20 questions measured on a 6-point Likert scale (Anchor range: 1 = Never, 6 = Always), with the participants answering the relevant questions with how frequently they are exposed to and experience the specific content in the question.

The OECE scale measures both the participants’ exposure to hate speech and extremist communication individually, with the hate speech segment of the questionnaire containing 12 questions and the extremist communication segment containing 8 questions. The hate speech questions focused on the participants’ experience with content that they identify as hateful, with each question exploring a different topic that the hate content may be targeted towards, such as specific races, genders and religions, e.g. ‘I see individuals online discuss particular races in a disrespectful and/or hateful manner’. The extremist communication section of the questionnaire focused on the participants’ experience with content that praises, supports and/or represents an extreme ideology or group, including the advocation of violence and use of symbols that are affiliated with an extremist organisation (Swastikas, ISIS flags) e.g. ‘I see users post content online that encourages violence or extreme action’.

Findings

This exploratory study found that the average social media user was being exposed to extremist material and hate speech 48.44% of the time they spent online. This is a worrying inference, especially when considering the average daily duration social media users spend online, which this study found to be two hours and 39 minutes. This indicates that if the average user is utilising social media for just over two and a half hours a day, then the average social media user could be exposed to extremist content for more than one hour each day. These findings align with existing research that has showcased the volume of extremist content present on prominent social media sites. However, our research does offer insight into not only the volume of extremist content social media users are exposed to, but the discrepancy between the safeguarding claims of social media sites and the actual experiences of social media users. So, why are there such noticeable differences between safeguarding policies and user experience? Our research explored three possible explanations.

Free Speech

For prominent sites such as Facebook, Twitter and Reddit, a major hurdle in their capacity to combat hate and extremism is balancing adherence to the safeguarding regulations and their commitment to free speech. US-based companies are protected by the 1996 Communications Decency Act, which not only provides protection to social media sites in regard to content but additionally places the responsibility of the content on these platforms on the users who post it. These regulations adhere to the US’s constitutional legislative practice of protecting an individual’s constitutional right to free speech. This, in theory, protects the individuals posting and promoting hateful and extreme narratives online.

Problems with Volume

According to Facebook’s data transparency report, they identified and flagged 82% of hate speech and 99.1% of extremist-related content on their website before users could be exposed to it and report it to the site administrator. Similar values were highlighted for Instagram, where 95.3% of hate speech and 95.1% of extremist-related content were taken down before users had a chance to report them. However, our research suggests that users are still regularly being exposed to extreme content, despite platforms removing large quantities of extremist content. What is more worrying is that the small amount of content that is not immediately detected makes up almost half of the content that users report being exposed to.

The research we conducted also highlighted the quantity of extremist content and hate speech social media users are being exposed to in comparison to their time on social media sites, which might indicate another explanation for the discrepancy between policy and user experience. According to Facebook’s report covering the period of January to March 2023, they identified and flagged 82% of hate speech and 99.1% of extremist-related content on their website before users could be exposed to it and report it to the site administrator. Similar values were highlighted in the same report for Instagram, where it was reported that 95.3% of hate speech and 95.1% of extremist-related content were taken down before users had a chance to report them.

Tactical Content

Another issue to consider is the choice of content extremist actors use to promote their ideology or group. A noticeable feature in most social media data reports is that extremist communication and material is detected much more effectively than content that is deemed hate speech. Our research also found that social media users are exposed to hate speech more than they are to extremist communication.

This suggests that a preferred tactic of online extremist promotion is to promote hate narratives towards the perceived enemies and oppressors of their groups or ideologies, as opposed to openly discussing the ideology and its propaganda. This also showcases how social media content moderation is more effective for extremist communication and propaganda than for hate speech.

Conclusion

The research we conducted highlighted a large discrepancy between the claims made by social media sites and the actual experience of their users. Although these were only preliminary findings, the results highlighted not only potential shortcomings in the safeguarding measures of prominent social media sites but also shed light on the volume of hate and extreme content that the average social media user is being exposed to on a daily basis. As found in the present study, participants experience more exposure to hate speech than an outright discussion of extremist ideology, potentially highlighting a preferred tactic of extremist online promotion and potentially showcasing how content moderation is more effective for extremist communication as opposed to hate speech.

Going forward, the OECE could be utilised to explore social media sites individually, to discover potential commonalities and differences in the volumes and typologies of exposure users experience on those sites. This could then enhance the findings of the present study, by highlighting the social media sites that expose their users to the highest volumes of extremist content, potentially calling attention to the gaps in their content moderation and contrasting their public community guidelines and policies. The findings of these extended findings could help social media companies enhance the safety of their platforms and help them identify where the gaps in their safeguarding efforts are. This in turn could help them improve their consistency between the policies they state regarding extremist communication and hate speech, and the actual experiences of the users utilising their products.

Thomas James Vaughan Williams is a part-time lecturer for the MSc Investigative Psychology at the University of Huddersfield and is working towards gaining his PhD in Psychology with a focus on online radicalisation. Twitter: @tomjvw23

Dr Calli Tzani is a senior lecturer for the MSc Investigative Psychology and MSc Investigative Psychology at the University of Huddersfield. Dr Tzani has been conducting research on online fraud, harassment, terrorism prevention, bullying and sextortion.

Prof Maria Ioannou is a Chartered Forensic Psychologist (British Psychological Society), HCPC Registered Practitioner Psychologist (Forensic), Chartered Scientist (BPS), European Registered Psychologist (Europsy), Associate Fellow of the British Psychological Society, Chartered Manager (Chartered Management Institute) and a Fellow of the Higher Education Academy. She is the Course Director of the MSc Investigative Psychology and Course Director of the MSc Security Science.