Extremist content is frequently coded–-both to escape moderation and to signal to others with similar ideologies. In many cases, the nature of this content is far from explicit, couched in humour or sarcasm that avoids direct violation of platform policy.

These challenges are exacerbated by the increasing proliferation of non-text content formats where existing moderation tactics may not be as effective—including music.

Consider the far-right musical and aesthetic genre Fashwave, a portmanteau of ‘fascism’ and ‘wave’ first widely reported on in 2016. Recent research, conducted in part for GIFCT, found that Fashwave songs and images continue to appear online.

This Insight details the scope of the issue, identifying:

- Mainstream influences that risk spillover of Fashwave content to new audiences

- Threats stemming from parody tunes and the re-appropriation of trending songs

- User tactics like emojis, word variations and link sharing—and how to combat them

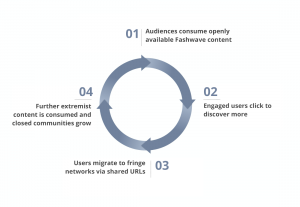

A potential digital radicalisation journey for consumers of Fashwave content.

Mainstream Influences

Fashwave provides an easy access point to extremist content. Despite ongoing rounds of video and song removals, Storyful uncovered an abundance of Fashwave content on mainstream platforms when searching associated keywords, including many tracks that had been re-uploaded after removal.

In a surface sweep of alt-right music, Storyful identified 9.64k mentions of ‘Fashwave’ on digital and social media over the past year, from 20 August 2020– 20 August 2021. Keyword searches of ‘Fashwave’ produced at least 390+ results for videos on YouTube and 43 song results on SoundCloud (including tags with expletives yielded even more results). At least 130+ Fashwave videos were uploaded on YouTube in February 2021 alone, highlighting an uptick after the Capitol insurrection. Views peaked on YouTube at 47.2k, while SoundCloud tracks garnered upwards of 60 approving comments.

Many Fashwave songs were instrumentals, making it difficult to distinguish from other genres, while others sampled fascist speeches from the likes of Hitler, Mussolini, Goebbels and Oswald Mosley. Visuals included 80s retro aesthetics alongside alt-right imagery and text slogans, while song titles and lyrics featured racist, antisemitic and homophobic slurs, and mocked BLM icons like George Floyd and Treyvon Martin.

Popular mainstream songs were also covered in alt-right parodies of Lil Pump’s ‘Gucci Gang’ as ‘Nazi Gang’, Wheatus’ ‘Teenage Dirtbag’ as ‘Teenage Nazi’, and Elvis Presley’s ‘In the Ghetto’. Fashwave edits proved popular among gaming and history buffs, particularly on Reddit, where communities shared memes and videos. For instance, a memes subreddit for The New Order, a Hearts of Iron IV game modification set in an alternate timeline where Nazi Germany won World War II, included a Fashwave-inspired video edit that was received positively.

Storyful also found users of popular boards like 4chan’s /pol/ sharing and applauding Fashwave edits that co-opted trending songs from TikTok. Although TikTok has banned the phrase and related content, pop band MGMT’s song ‘Little Dark Age’, which went viral on TikTok in late 2020, led to Fashwave edits depicting Nazis, the Confederate Army and sexual violence, among other themes. Storyful also identified explicit content in 4chan threads about what users called ‘nazi/based/little dark age edits’ and ‘white people’ music, with multiple ones receiving over 300 comments.

Fashwave Tactics

Emojis

Emojis were popular in comments on Fashwave content, with SS Bolts (⚡️⚡️) and Sieg Heil salutes or ‘Romans’ (✋🏻 or 🙋🏻🙋🏻♂️ or “o/” ) proving common responses. Emojis contained hidden and inferred meanings, tying in with extremist ideologies and offensive memes deemed ‘edgy’ or ‘based’. Internet memes were propagated in Fashwave discourse as well, in particular Pepe the Frog, Chad, and Wojak.

Word Variations

Word variations continued to prove a basic but effective tactic adopted by Internet users and fringe communities to avoid online detection or censorship, characterised by minor misspellings, different fonts, symbols, spacing, accents and emoji letter and text number substitutions. Examples included:

- 𝔅𝔯𝔞𝔷𝔦𝔩 – (𝐹𝒶𝓈𝒽𝓌𝒶𝓋𝑒 𝒯𝓇𝑜𝓅𝒾𝒸𝒶𝓁)

- H A T E W A V E

- N ∆ Z I

Content Takedowns & Link Sharing

Content takedowns on mainstream platforms have encouraged alt-right communities to migrate and re-upload content to alternative websites and services including Telegram and BitChute (at least 319 explicit Fashwave videos active), which do not typically moderate content. Re-uploaded songs and videos were monitored not only on alternative platforms, but also on the ones they were removed from, with posters omitting artists’ names, song titles or other key identifiers.

A growing development on Twitter and Instagram has also been the use of URLs directing users to landing pages on sites like Linktree and Linkin.bio, which typically host links to other social media channels.

One Fashwave creator’s videos were restricted on BitChute on the grounds the content contained ‘incitement to hatred’. But there was a prevalence of explicit unfiltered content available to mass audiences, highlighted by the fact that the same artist had tracks active on YouTube. They requested donations from fans, with supporters sharing URL links to donate via anonymous crypto payment services in comments. This shows how websites’ comment sections might inconspicuously help fund extremist content creators, abetted by new payment technologies like crypto.

How Platforms Could Address Fashwave Content

Re-uploads of Fashwave content on platforms underscore the need for long-term monitoring and moderation processes. So-called future-proofing will be key to addressing the constant evolution of extremist genres and communities.

Some potential approaches to this problematic genre could include:

- Consistent monitoring of Fashwave-associated keywords and hashtags, including image text, to contextually verify any extremist connotations

- Including wildcard operators and word variations in sensitive lists to uncover coded communication

- Banning keyword searches and hashtag categorisation of Fashwave

- Blocking sensitive keyword lists from profile usernames (with exceptions to allow for constructive discussions)

- Manually evaluating links posted by flagged artists to sites like Linktree

Each of these steps and others could go a long way toward creating safer spaces for online communities, representing a win for platforms and their users.

Max Wyatt is an intelligence analyst at Storyful, with a background in public policy and China-related corporate affairs. He specialises in content and network analysis of Internet activism, TikTok, music and meme subcultures, as well as intercultural nuances in geopolitical spheres.